Photo illustration: Facebook Community Standards vs Mastodon Guidelines

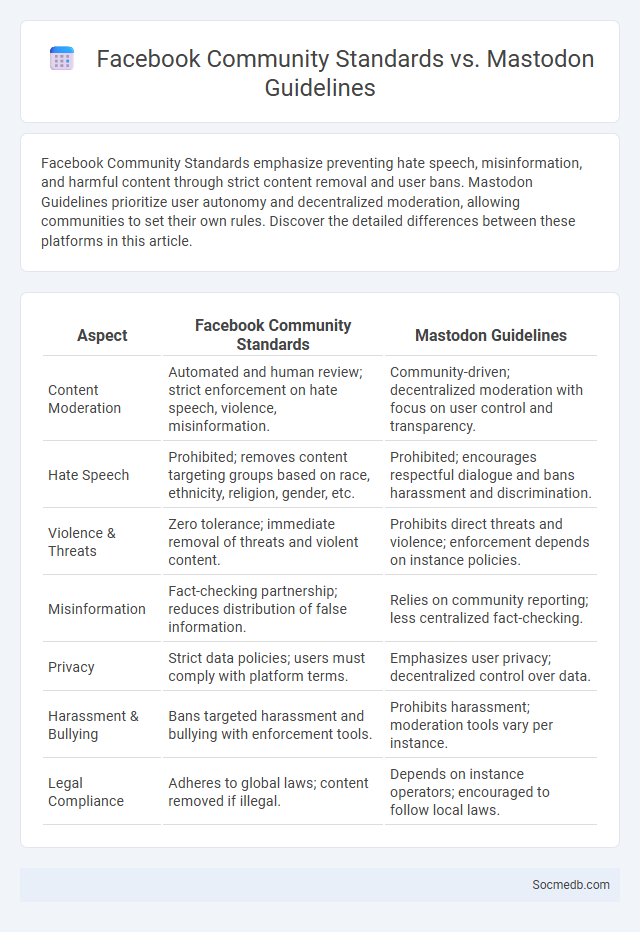

Facebook Community Standards emphasize preventing hate speech, misinformation, and harmful content through strict content removal and user bans. Mastodon Guidelines prioritize user autonomy and decentralized moderation, allowing communities to set their own rules. Discover the detailed differences between these platforms in this article.

Table of Comparison

| Aspect | Facebook Community Standards | Mastodon Guidelines |

|---|---|---|

| Content Moderation | Automated and human review; strict enforcement on hate speech, violence, misinformation. | Community-driven; decentralized moderation with focus on user control and transparency. |

| Hate Speech | Prohibited; removes content targeting groups based on race, ethnicity, religion, gender, etc. | Prohibited; encourages respectful dialogue and bans harassment and discrimination. |

| Violence & Threats | Zero tolerance; immediate removal of threats and violent content. | Prohibits direct threats and violence; enforcement depends on instance policies. |

| Misinformation | Fact-checking partnership; reduces distribution of false information. | Relies on community reporting; less centralized fact-checking. |

| Privacy | Strict data policies; users must comply with platform terms. | Emphasizes user privacy; decentralized control over data. |

| Harassment & Bullying | Bans targeted harassment and bullying with enforcement tools. | Prohibits harassment; moderation tools vary per instance. |

| Legal Compliance | Adheres to global laws; content removed if illegal. | Depends on instance operators; encouraged to follow local laws. |

Overview of Facebook Community Standards

Facebook Community Standards outline essential rules that govern user behavior and content on the platform, ensuring a safe and respectful environment. These standards address issues such as hate speech, harassment, misinformation, and graphic content, balancing freedom of expression with user protection. Your compliance with these guidelines helps maintain a trustworthy social media community and minimizes content removal or account suspension.

Introduction to Mastodon Guidelines

Mastodon is a decentralized social media platform emphasizing user control and community moderation through clear and transparent guidelines. These guidelines prioritize respectful interaction, privacy protection, and content moderation tailored by individual server administrators. Understanding Mastodon's community standards is essential for fostering a safe and inclusive environment across its federated network.

Defining Community Standards Across Platforms

Ensuring consistent community standards across social media platforms safeguards user experience and promotes respectful interactions. Your content must comply with each platform's guidelines, which typically address hate speech, misinformation, and harassment to maintain a safe digital environment. Clear rules and proactive moderation help foster trust and accountability within online communities.

Key Differences in Content Moderation

Social media content moderation varies significantly in approach, scope, and enforcement policies across platforms such as Facebook, Twitter, and TikTok. Facebook employs AI-driven algorithms combined with human reviewers to detect hate speech and misinformation, while Twitter prioritizes real-time moderation and public transparency through content labels and user appeals. TikTok focuses on community guidelines that emphasize young user protection, using automated filters and a dedicated moderation team to swiftly remove harmful or deceptive content.

Privacy and Data Protection Policies

Social media platforms implement stringent privacy and data protection policies to safeguard your personal information from unauthorized access and misuse. These policies regulate data collection, storage, and sharing practices, ensuring compliance with regulations such as GDPR and CCPA. Understanding these protections helps you maintain control over your digital footprint and enhance online security.

Approaches to Hate Speech and Harassment

Effective approaches to hate speech and harassment on social media involve a combination of advanced AI algorithms for content detection and robust community guidelines enforcement. Platforms implement real-time monitoring systems that analyze text, images, and videos to identify harmful behavior and remove or flag inappropriate content swiftly. Your online safety is enhanced through user reporting tools and proactive moderation, fostering healthier digital interactions.

Handling Misinformation and Fake News

Handling misinformation and fake news on social media requires critical evaluation of sources and verification of facts before sharing content. You should utilize fact-checking tools and rely on credible news outlets to discern truth from falsehoods online. Encouraging media literacy and reporting suspicious posts helps reduce the spread of deceptive information across digital platforms.

User Empowerment and Self-Governance

Social media platforms enhance user empowerment by providing tools for content creation, privacy control, and direct interaction with communities, fostering a sense of agency and personal expression. Self-governance mechanisms, such as user-led moderation, community guidelines, and decentralized decision-making processes, promote accountability and trust within digital networks. These features collectively support a participatory ecosystem where users can shape platform norms and influence online behavior effectively.

Appeals and Enforcement Mechanisms

Social media platforms leverage appeals and enforcement mechanisms to maintain community standards and ensure user compliance. These mechanisms include content moderation tools, automated filters, and user reporting systems designed to address violations effectively. Understanding how your appeals process works can help you navigate disputes and protect your online presence.

Implications for Online Communities

Social media platforms significantly impact online communities by shaping collective identities and facilitating real-time interactions among diverse members. The proliferation of user-generated content fosters knowledge sharing, but also raises challenges related to misinformation, privacy breaches, and digital harassment. Algorithms that prioritize engagement often create echo chambers, influencing social dynamics and community cohesion within digital environments.

socmedb.com

socmedb.com