Photo illustration: Facebook Community Standards vs Medium Rules

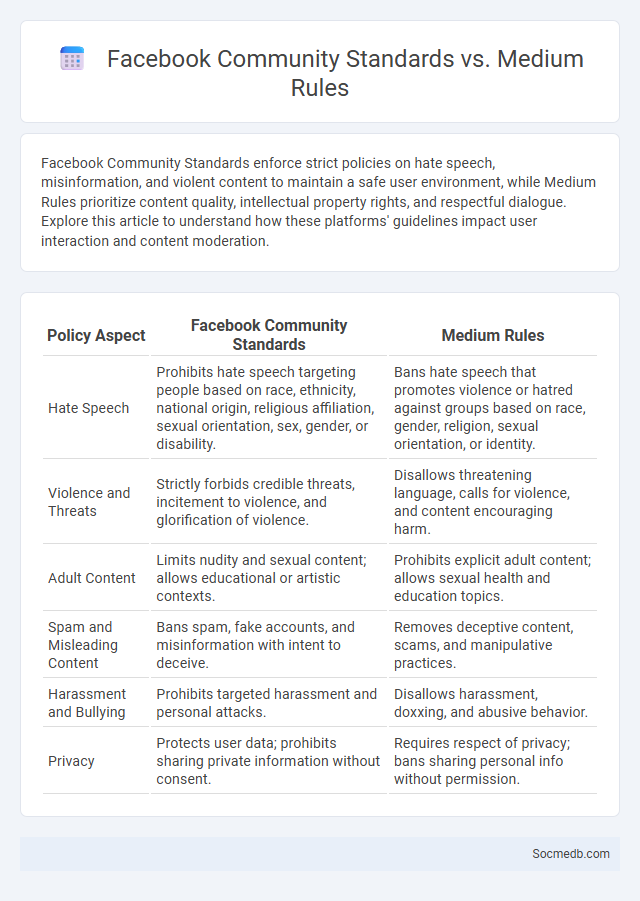

Facebook Community Standards enforce strict policies on hate speech, misinformation, and violent content to maintain a safe user environment, while Medium Rules prioritize content quality, intellectual property rights, and respectful dialogue. Explore this article to understand how these platforms' guidelines impact user interaction and content moderation.

Table of Comparison

| Policy Aspect | Facebook Community Standards | Medium Rules |

|---|---|---|

| Hate Speech | Prohibits hate speech targeting people based on race, ethnicity, national origin, religious affiliation, sexual orientation, sex, gender, or disability. | Bans hate speech that promotes violence or hatred against groups based on race, gender, religion, sexual orientation, or identity. |

| Violence and Threats | Strictly forbids credible threats, incitement to violence, and glorification of violence. | Disallows threatening language, calls for violence, and content encouraging harm. |

| Adult Content | Limits nudity and sexual content; allows educational or artistic contexts. | Prohibits explicit adult content; allows sexual health and education topics. |

| Spam and Misleading Content | Bans spam, fake accounts, and misinformation with intent to deceive. | Removes deceptive content, scams, and manipulative practices. |

| Harassment and Bullying | Prohibits targeted harassment and personal attacks. | Disallows harassment, doxxing, and abusive behavior. |

| Privacy | Protects user data; prohibits sharing private information without consent. | Requires respect of privacy; bans sharing personal info without permission. |

Introduction to Platform Community Guidelines

Platform community guidelines establish clear rules designed to maintain a safe, respectful online environment by regulating content, behavior, and interactions. Understanding these guidelines helps you avoid violations that can lead to account suspensions or content removal, ensuring your social media experience remains positive and productive. Compliance with these standards promotes constructive engagement and protects your digital reputation.

Overview of Facebook Community Standards

Facebook Community Standards establish clear guidelines to ensure a safe and respectful environment by prohibiting hate speech, violence, and misinformation. The standards address content related to harassment, harmful behavior, and graphic content, enforcing policies through a combination of automated systems and human review. These rules are continuously updated to respond to evolving online threats and maintain platform integrity.

Key Points of Medium's Rules and Guidelines

Medium's Rules and Guidelines emphasize creating respectful and constructive content, prohibiting harassment, hate speech, and misinformation to maintain a safe community environment. You must ensure your posts adhere to copyright laws and avoid spam or deceptive practices that could undermine trust. Consistently following these key points fosters engagement and preserves the platform's integrity.

Comparison: Facebook vs Medium Policies

Facebook's content policy emphasizes community standards aimed at preventing hate speech, misinformation, and harmful content across a diverse global user base of over 2.9 billion monthly active users. Medium enforces content guidelines prioritizing quality writing and author accountability, allowing more nuanced discussions with fewer restrictions on controversial topics but maintaining prohibitions against harassment and plagiarism. The key difference lies in Facebook's broad, algorithm-driven moderation for a wide audience versus Medium's editorial focus fostering thoughtful, long-form content with selective enforcement.

Content Moderation Approaches

Effective content moderation approaches on social media leverage a combination of automated algorithms and human reviewers to identify and remove harmful or inappropriate posts. Machine learning models analyze text, images, and videos for policy violations, while human moderators provide contextual judgment and handle complex cases. Your platform's safety and user trust depend on continuously updating these moderation techniques to address evolving online behaviors and emerging threats.

User Behavior Restrictions

User behavior restrictions on social media platforms are designed to regulate content sharing, prevent harassment, and ensure community safety. These restrictions often include limits on posting frequency, content moderation policies, and algorithms to detect inappropriate behavior or misinformation. Enforcement mechanisms like temporary bans, content removal, and account suspensions aim to maintain a positive user experience and uphold platform guidelines.

Handling Hate Speech and Harassment

Handling hate speech and harassment on social media requires implementing robust moderation tools that identify and remove harmful content swiftly. Your safety is enhanced by platforms using artificial intelligence combined with human reviewers to detect offensive language, enforce community guidelines, and support reporting mechanisms. Empowering users with clear policies and effective blocking or muting options creates a safer online environment for everyone.

Consequences of Rule Violations

Violating social media rules can result in account suspension, content removal, and limited reach, significantly impacting your online presence and engagement. Platforms enforce strict policies to maintain community standards, leading to penalties that may include temporary bans or permanent account deletion. Understanding these consequences helps you navigate social media responsibly and protect your digital reputation.

Appeals and Enforcement Mechanisms

Social media platforms utilize appeals and enforcement mechanisms to maintain community guidelines and manage content violations effectively. Users can challenge content removals or account suspensions through structured appeal processes, ensuring transparency and fairness. Enforcement mechanisms include automated detection systems, human moderation, and graduated sanctions to deter harmful behavior and protect platform integrity.

Evolving Community Standards: Future Trends

Evolving community standards on social media are increasingly driven by advances in artificial intelligence and user-generated content moderation to ensure safer, more inclusive online environments. Your interactions will be shaped by platforms employing real-time content analysis and adaptive policies that respond to emerging social issues and cultural sensitivities. These future trends highlight the importance of transparency, accountability, and user empowerment in maintaining digital trust and engagement.

socmedb.com

socmedb.com