Photo illustration: Facebook Community Standards vs Threads Community Guidelines

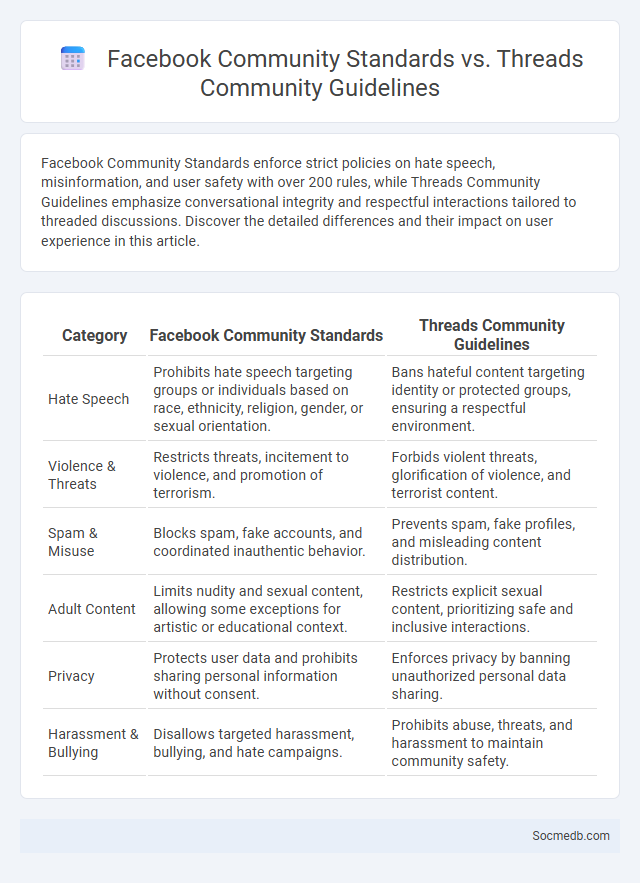

Facebook Community Standards enforce strict policies on hate speech, misinformation, and user safety with over 200 rules, while Threads Community Guidelines emphasize conversational integrity and respectful interactions tailored to threaded discussions. Discover the detailed differences and their impact on user experience in this article.

Table of Comparison

| Category | Facebook Community Standards | Threads Community Guidelines |

|---|---|---|

| Hate Speech | Prohibits hate speech targeting groups or individuals based on race, ethnicity, religion, gender, or sexual orientation. | Bans hateful content targeting identity or protected groups, ensuring a respectful environment. |

| Violence & Threats | Restricts threats, incitement to violence, and promotion of terrorism. | Forbids violent threats, glorification of violence, and terrorist content. |

| Spam & Misuse | Blocks spam, fake accounts, and coordinated inauthentic behavior. | Prevents spam, fake profiles, and misleading content distribution. |

| Adult Content | Limits nudity and sexual content, allowing some exceptions for artistic or educational context. | Restricts explicit sexual content, prioritizing safe and inclusive interactions. |

| Privacy | Protects user data and prohibits sharing personal information without consent. | Enforces privacy by banning unauthorized personal data sharing. |

| Harassment & Bullying | Disallows targeted harassment, bullying, and hate campaigns. | Prohibits abuse, threats, and harassment to maintain community safety. |

Introduction to Social Media Community Guidelines

Social media community guidelines establish the rules and standards to maintain a respectful and safe online environment for all users. These guidelines cover appropriate behavior, content sharing, privacy protection, and consequences of violations, ensuring your interactions remain positive and secure. Understanding and following these policies helps you contribute to a trustworthy digital community.

Overview of Facebook Community Standards

Facebook Community Standards establish comprehensive guidelines designed to maintain a safe and respectful environment across the platform. These standards address areas such as hate speech, violence, harassment, misinformation, and graphic content, ensuring content aligns with legal and ethical norms. Facebook employs advanced AI and human reviewers to enforce these rules, balancing user expression with the protection of the community.

Key Principles of Threads Community Guidelines

Threads Community Guidelines emphasize respectful communication, ensuring that all users engage without harassment, hate speech, or harmful content. Upholding privacy and security is crucial, with strict rules against sharing personal information and promoting misinformation. The platform also encourages authentic interactions, discouraging spam, fake accounts, and content manipulation to maintain a trustworthy community environment.

Definition and Scope of General Community Standards

General Community Standards define the rules and guidelines that govern acceptable behavior and content on social media platforms to ensure a safe and respectful online environment. These standards cover a broad scope, including prohibitions against hate speech, harassment, misinformation, explicit content, and illegal activities. Social media companies enforce these guidelines through content moderation policies, user reporting systems, and automated detection tools to maintain community trust and compliance with legal requirements.

Content Moderation: Differences and Similarities

Content moderation on social media involves reviewing and managing user-generated content to enforce platform policies, ensuring a safe and respectful online environment. You need to understand that while different platforms employ varied moderation strategies--such as automated algorithms, human moderators, or a hybrid approach--they all aim to balance freedom of expression with the removal of harmful or inappropriate content. Similarities across platforms include adherence to community guidelines and legal regulations, but differences arise in the scale, transparency, and cultural sensitivity of content review processes.

Enforcement Mechanisms Across Platforms

Enforcement mechanisms across social media platforms include automated content moderation tools, user reporting systems, and human review teams to ensure adherence to community guidelines. Platforms like Facebook, Twitter, and Instagram implement AI-driven algorithms to detect and remove harmful content such as hate speech, misinformation, and graphic violence promptly. Collaboration with third-party fact-checkers and regular transparency reports enhance accountability and improve the effectiveness of these enforcement strategies.

User Rights and Responsibilities

Your social media experience is shaped by a balance between user rights and responsibilities, including privacy protection, content ownership, and respectful interaction. Platforms empower you with tools to control data sharing and manage account security while expecting adherence to community guidelines and legal standards. Understanding these dynamics ensures a safer, more positive online environment for everyone.

Handling Hate Speech and Misinformation

Social media platforms implement advanced algorithms and AI-driven tools to detect and remove hate speech, reducing its spread and impact on users. Fact-checking partnerships and user-reporting mechanisms play a crucial role in identifying and combating misinformation in real time. Policies enforcing content moderation and community guidelines help create safer online environments and promote accurate information dissemination.

Privacy and Data Protection Policies

Social media platforms implement stringent privacy and data protection policies to safeguard Your personal information from unauthorized access and misuse. These policies outline how data is collected, stored, and shared, ensuring compliance with regulations like GDPR and CCPA. Understanding these measures empowers You to control Your digital footprint and maintain online security effectively.

Future Trends in Community Guidelines Enforcement

Future trends in community guidelines enforcement will leverage advanced AI algorithms and machine learning to detect harmful content more accurately and swiftly. Platforms will increasingly use real-time monitoring combined with user feedback to maintain safer digital environments, ensuring Your online interactions comply with evolving standards. Transparent reporting and adaptive policies will empower communities while balancing freedom of expression with safety.

socmedb.com

socmedb.com