Photo illustration: Facebook Community Standards vs TikTok Community Guidelines

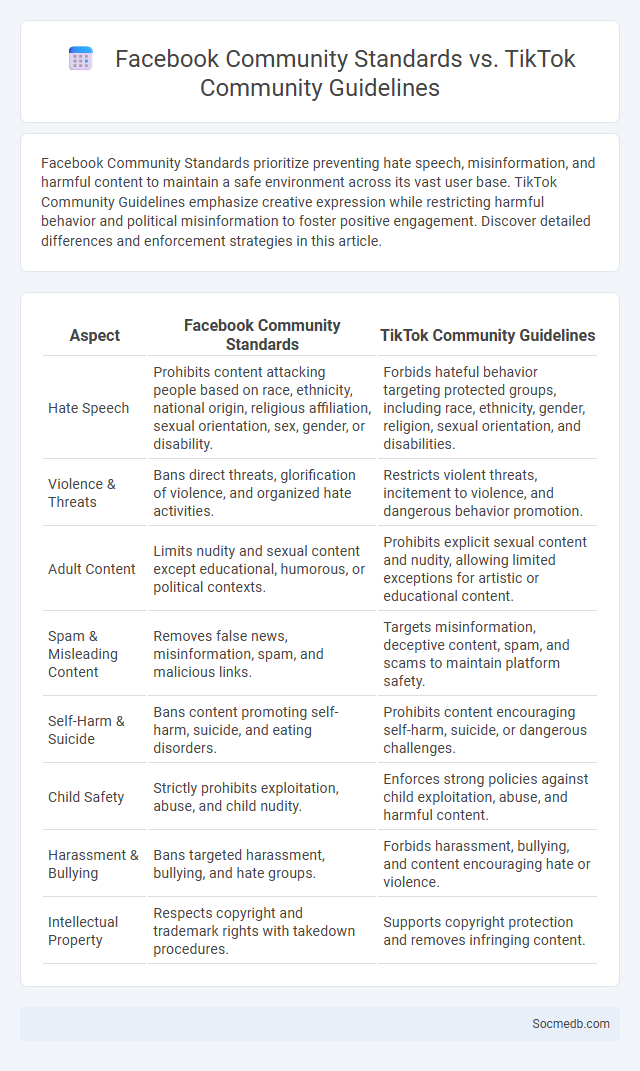

Facebook Community Standards prioritize preventing hate speech, misinformation, and harmful content to maintain a safe environment across its vast user base. TikTok Community Guidelines emphasize creative expression while restricting harmful behavior and political misinformation to foster positive engagement. Discover detailed differences and enforcement strategies in this article.

Table of Comparison

| Aspect | Facebook Community Standards | TikTok Community Guidelines |

|---|---|---|

| Hate Speech | Prohibits content attacking people based on race, ethnicity, national origin, religious affiliation, sexual orientation, sex, gender, or disability. | Forbids hateful behavior targeting protected groups, including race, ethnicity, gender, religion, sexual orientation, and disabilities. |

| Violence & Threats | Bans direct threats, glorification of violence, and organized hate activities. | Restricts violent threats, incitement to violence, and dangerous behavior promotion. |

| Adult Content | Limits nudity and sexual content except educational, humorous, or political contexts. | Prohibits explicit sexual content and nudity, allowing limited exceptions for artistic or educational content. |

| Spam & Misleading Content | Removes false news, misinformation, spam, and malicious links. | Targets misinformation, deceptive content, spam, and scams to maintain platform safety. |

| Self-Harm & Suicide | Bans content promoting self-harm, suicide, and eating disorders. | Prohibits content encouraging self-harm, suicide, or dangerous challenges. |

| Child Safety | Strictly prohibits exploitation, abuse, and child nudity. | Enforces strong policies against child exploitation, abuse, and harmful content. |

| Harassment & Bullying | Bans targeted harassment, bullying, and hate groups. | Forbids harassment, bullying, and content encouraging hate or violence. |

| Intellectual Property | Respects copyright and trademark rights with takedown procedures. | Supports copyright protection and removes infringing content. |

Introduction to Community Standards Across Social Platforms

Social media platforms enforce community standards to maintain safe and respectful environments for users. These guidelines regulate content related to hate speech, harassment, misinformation, and explicit material to protect individuals and promote positive interactions. Understanding these standards helps you navigate and contribute responsibly across different social networks.

Overview of Facebook Community Standards

Facebook Community Standards outline rules designed to maintain a safe and respectful environment by prohibiting hate speech, harassment, and explicit content. These guidelines emphasize transparency, aiming to balance free expression with protecting users from harmful behavior. Enforcement mechanisms include content removal, account restrictions, and appeals processes to uphold platform integrity.

TikTok Community Guidelines: Key Principles

TikTok Community Guidelines emphasize maintaining a safe, respectful, and authentic environment by prohibiting hate speech, harassment, and misinformation. The platform enforces clear rules against harmful content such as violence, nudity, and illegal activities to protect users of all ages. Algorithmic moderation and community reporting mechanisms ensure adherence, promoting positive interactions and creative expression across diverse communities.

Understanding Generic Community Standards

Generic community standards on social media platforms establish guidelines for acceptable behavior, including prohibitions against hate speech, harassment, and misinformation to foster a safe online environment. These standards often emphasize respect for user privacy, the importance of authentic content, and mechanisms for reporting violations. Understanding these norms enables users to navigate platforms responsibly while maintaining a positive digital culture.

Comparing Policy Goals: Facebook vs TikTok

Facebook prioritizes content moderation policies focused on combating misinformation, hate speech, and protecting user data through transparent privacy settings. TikTok emphasizes promoting creative expression and youth engagement while addressing digital safety with robust algorithmic content curation and community guidelines. Your experience on these platforms reflects their distinct policy goals, balancing user freedom with platform responsibility.

Enforcement Mechanisms: Facebook vs TikTok

Facebook uses a comprehensive enforcement mechanism combining AI algorithms and human moderators to detect and remove harmful content, prioritizing user safety and compliance with community standards. TikTok employs a blend of automated content filtering and real-time human review to enforce policies, particularly targeting misinformation and inappropriate material to protect younger audiences. Your experience can vary significantly depending on how each platform balances these enforcement tools to maintain a secure environment.

Content Moderation Approach: Similarities and Differences

Content moderation approaches across social media platforms share core strategies like automated filtering and human review to detect and remove harmful content. Differences arise in policy enforcement levels, transparency, and user appeal mechanisms, affecting how your content is regulated and the platform's community standards. Understanding these nuances helps you navigate platform-specific rules while maintaining effective online engagement.

Impact on User Experience

Social media platforms significantly shape user experience by offering personalized content through advanced algorithms that analyze user behavior and preferences. Interactive features such as real-time comments, shares, and likes enhance engagement and foster a sense of community. However, algorithm-driven content can also create echo chambers, influencing user perception and emotional well-being.

Criticisms and Challenges Faced

Social media platforms face significant criticisms around privacy concerns, misinformation spread, and mental health impact on users. Algorithmic biases often amplify harmful content, creating challenges in content moderation and ethical responsibility. Understanding these issues helps you navigate social media more consciously and protect your digital well-being.

Future Trends in Community Standards

Future trends in social media community standards will emphasize AI-driven content moderation to efficiently identify harmful or misleading posts, enhancing platform safety. Increasing user involvement in policy creation will empower your voice and foster transparency in enforcement practices. Platforms will adopt stricter guidelines to address misinformation, hate speech, and privacy concerns, ensuring more accountable and inclusive online environments.

socmedb.com

socmedb.com