Photo illustration: Facebook vs AI Detection Tools

Facebook's advanced algorithms for content moderation increasingly integrate AI detection tools to identify harmful or misleading posts with higher accuracy. Explore this article to understand how Facebook leverages AI detection technology to enhance user experience and safety.

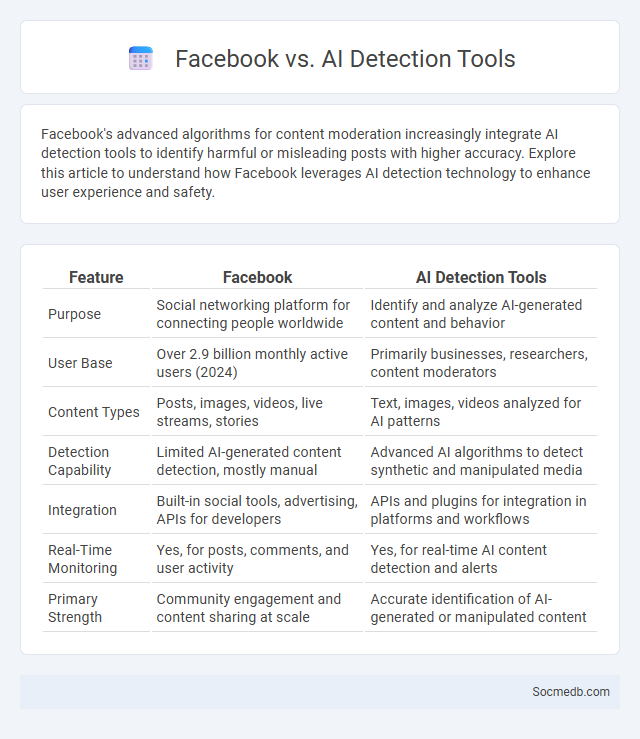

Table of Comparison

| Feature | AI Detection Tools | |

|---|---|---|

| Purpose | Social networking platform for connecting people worldwide | Identify and analyze AI-generated content and behavior |

| User Base | Over 2.9 billion monthly active users (2024) | Primarily businesses, researchers, content moderators |

| Content Types | Posts, images, videos, live streams, stories | Text, images, videos analyzed for AI patterns |

| Detection Capability | Limited AI-generated content detection, mostly manual | Advanced AI algorithms to detect synthetic and manipulated media |

| Integration | Built-in social tools, advertising, APIs for developers | APIs and plugins for integration in platforms and workflows |

| Real-Time Monitoring | Yes, for posts, comments, and user activity | Yes, for real-time AI content detection and alerts |

| Primary Strength | Community engagement and content sharing at scale | Accurate identification of AI-generated or manipulated content |

Introduction: Understanding Fake News in the Digital Age

Fake news in the digital age refers to intentionally fabricated information designed to mislead audiences on social media platforms like Facebook, Twitter, and Instagram. The rapid dissemination of false content leverages algorithms that prioritize engagement over accuracy, amplifying misinformation across millions of users globally. Recognizing the mechanisms and impact of fake news is crucial for protecting online communities and promoting digital literacy.

Facebook’s Battle Against Online Misinformation

Facebook employs advanced AI algorithms and human fact-checkers to combat the spread of online misinformation, significantly reducing the reach of false content. The platform prioritizes credible sources and labels disputed information to help protect Your newsfeed from deceptive posts. Ongoing updates to its policies and tools reinforce Facebook's commitment to creating a safer digital environment.

Rise of AI Detection Tools for Fake News

The rise of AI detection tools has significantly enhanced the ability to identify and combat fake news on social media platforms, improving content authenticity and user trust. These advanced algorithms analyze patterns, sources, and linguistic cues to flag misleading or fabricated information effectively. Protecting Your digital experience requires leveraging these AI technologies to ensure the accuracy of shared content and reduce misinformation harm.

How Facebook Uses AI to Combat Fake News

Facebook leverages advanced artificial intelligence algorithms to detect and remove fake news by analyzing the credibility of sources and flagging misleading content in real time. Machine learning models evaluate patterns in posts, images, and videos to identify misinformation, reducing its spread across the platform. The integration of AI with human fact-checkers enhances accuracy in combating false information and maintaining a trustworthy social media environment.

Comparing AI Detection Tools: Accuracy and Limitations

AI detection tools on social media vary significantly in accuracy, with top performers achieving up to 95% precision in identifying automated or bot-generated content. Limitations include difficulties in detecting sophisticated deepfake videos and contextually nuanced misinformation, which often require human review for confirmation. Users and platforms must balance reliance on AI with manual oversight to effectively combat social media manipulation.

Challenges Faced by Facebook in Misinformation Control

Facebook encounters significant challenges in misinformation control due to the vast volume of content shared daily, making real-time monitoring complex. Algorithms designed to identify false information often struggle with context, leading to either over-censorship or under-detection. Your reliance on Facebook requires awareness of these limitations and the importance of critical evaluation of information encountered on the platform.

Strengths and Weaknesses of AI Solutions

AI solutions in social media enhance user engagement through personalized content recommendations and advanced sentiment analysis, improving overall user experience and targeted advertising efficiency. However, weaknesses include potential algorithmic bias, privacy concerns, and the spread of misinformation due to automated content moderation limitations. Balancing AI-driven automation with human oversight remains crucial to maximizing benefits while minimizing risks on social media platforms.

Human Moderators vs. AI: Finding the Right Balance

Human moderators bring nuanced understanding and empathy to social media content review, effectively handling context-sensitive issues that AI often misinterprets. AI-powered systems excel in processing large volumes of data quickly, identifying patterns, and flagging potentially harmful content with consistency and speed. Combining human judgment with AI scalability creates a balanced approach, enhancing accuracy and efficiency in maintaining safe and engaging online communities.

The Future of Fake News Prevention: Trends & Innovations

Advancements in artificial intelligence and machine learning enable real-time detection and removal of fake news on social media platforms, enhancing content verification accuracy. Blockchain technology introduces transparent and immutable sources for news verification, reducing misinformation spread. Collaboration between tech companies, fact-checkers, and governments fosters innovative tools and policies to combat disinformation effectively in evolving digital landscapes.

Conclusion: Toward Trustworthy Social Media Platforms

Building trustworthy social media platforms requires robust content moderation algorithms, transparent data privacy policies, and user-centric design that prioritizes safety and authenticity. Emerging technologies such as AI-driven fact-checking and decentralized networks can significantly reduce misinformation and enhance accountability. Continuous collaboration among developers, regulators, and users will drive innovation toward platforms that foster genuine engagement and protect digital rights.

socmedb.com

socmedb.com