Photo illustration: Facebook vs TikTok Content Moderation

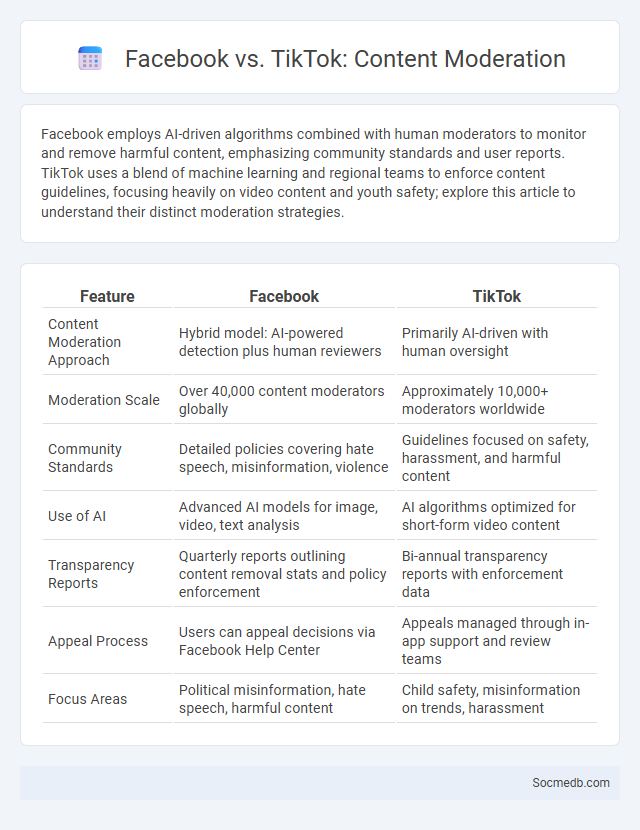

Facebook employs AI-driven algorithms combined with human moderators to monitor and remove harmful content, emphasizing community standards and user reports. TikTok uses a blend of machine learning and regional teams to enforce content guidelines, focusing heavily on video content and youth safety; explore this article to understand their distinct moderation strategies.

Table of Comparison

| Feature | TikTok | |

|---|---|---|

| Content Moderation Approach | Hybrid model: AI-powered detection plus human reviewers | Primarily AI-driven with human oversight |

| Moderation Scale | Over 40,000 content moderators globally | Approximately 10,000+ moderators worldwide |

| Community Standards | Detailed policies covering hate speech, misinformation, violence | Guidelines focused on safety, harassment, and harmful content |

| Use of AI | Advanced AI models for image, video, text analysis | AI algorithms optimized for short-form video content |

| Transparency Reports | Quarterly reports outlining content removal stats and policy enforcement | Bi-annual transparency reports with enforcement data |

| Appeal Process | Users can appeal decisions via Facebook Help Center | Appeals managed through in-app support and review teams |

| Focus Areas | Political misinformation, hate speech, harmful content | Child safety, misinformation on trends, harassment |

Overview of Facebook and TikTok Content Moderation

Facebook employs advanced AI algorithms combined with human reviewers to monitor and remove harmful content, focusing on hate speech, misinformation, and graphic violence. TikTok uses a combination of automated detection and community guidelines enforcement to regulate short-form videos, emphasizing the protection of young users from inappropriate or harmful material. Both platforms continuously update their moderation policies to address emerging challenges in user-generated content.

Core Principles of Content Moderation

Content moderation on social media is guided by core principles such as transparency, consistency, and fairness to ensure user safety and platform integrity. Moderators apply clear community guidelines to identify and remove harmful content, including hate speech, misinformation, and violent extremism. Automated tools combined with human review enhance efficiency, balancing free expression with the need to reduce online harm.

Algorithms vs Human Moderators: A Comparative Analysis

Social media platforms rely heavily on algorithms to efficiently filter and prioritize vast amounts of content, optimizing user engagement through personalized feeds and real-time recommendations. Human moderators offer nuanced judgment in interpreting context, detecting subtle violations, and managing sensitive issues that algorithms might overlook or misclassify. Understanding the balance between automated systems and human oversight is crucial for enhancing your online experience and ensuring safer, more relevant interactions.

Policies on Hate Speech and Misinformation

Social media platforms enforce strict policies on hate speech and misinformation to create safer online environments and protect users from harmful content. Your awareness of these regulations helps ensure compliance and promotes respectful interactions while reducing the spread of false information. Monitoring tools and content moderation algorithms play a critical role in detecting and removing violations swiftly.

Handling Political Content and Election Integrity

Handling political content on social media requires robust fact-checking systems and transparent content moderation policies to combat misinformation effectively. Platforms must prioritize election integrity by implementing real-time monitoring tools to detect and flag false claims or coordinated disinformation campaigns. Protecting Your access to accurate information ensures fair democratic participation and maintains public trust in online environments.

Child Safety and Community Guidelines Enforcement

Social media platforms implement robust child safety measures and strict community guidelines enforcement to protect minors from harmful content and online predators. Your safety and well-being are prioritized through advanced moderation tools, age verification systems, and real-time content filtering algorithms designed to identify and remove inappropriate material quickly. Ensuring compliance with these policies fosters a safer digital environment where children can interact responsibly and securely.

Transparency and User Appeals Processes

Transparency in social media platforms enhances user trust by clearly outlining content moderation policies and data usage practices. Your ability to appeal content decisions through structured review processes ensures fair treatment and accountability. Platforms prioritizing transparent communication and accessible user appeals foster a safer and more respectful online community.

Global Challenges in Content Moderation

Social media platforms face significant global challenges in content moderation, including the detection and removal of harmful content such as misinformation, hate speech, and violent extremism. Algorithms and artificial intelligence struggle to accurately interpret cultural nuances and context across diverse languages, leading to inconsistent enforcement and potential censorship. Balancing user safety while protecting freedom of expression remains a critical and complex issue for multinational social networks.

Impact of Moderation on User Engagement

Moderation on social media platforms significantly shapes user engagement by filtering harmful content and fostering safer online communities. Effective content moderation reduces instances of harassment and misinformation, encouraging more active participation and longer session durations. Platforms like Facebook and Twitter report increased user retention rates when robust moderation policies are in place.

Future Trends in Social Media Content Moderation

Future trends in social media content moderation emphasize AI-driven automation combined with advanced machine learning algorithms to detect harmful content more accurately and in real time. Platforms are increasingly implementing multimodal analysis, integrating text, image, and video recognition to effectively identify violations while reducing false positives. Your engagement with social media will benefit from improved safety measures and transparent moderation policies driven by evolving regulatory frameworks and ethical AI standards.

socmedb.com

socmedb.com