Photo illustration: Muted Account vs Reported Account

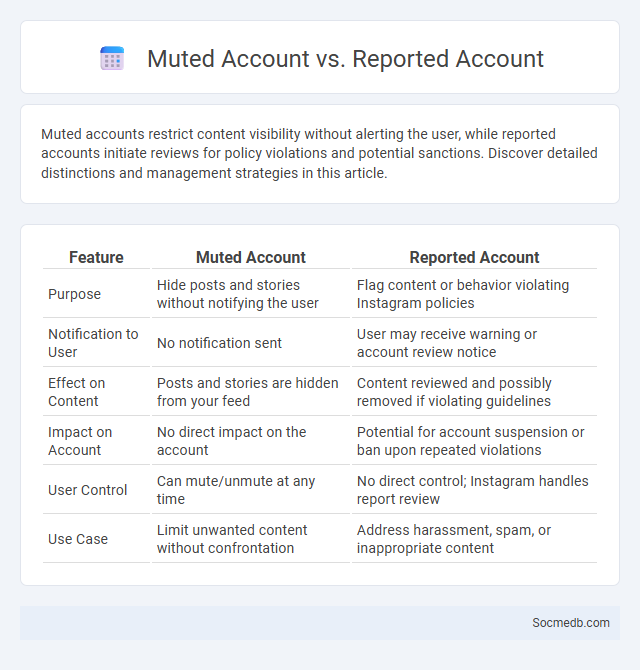

Muted accounts restrict content visibility without alerting the user, while reported accounts initiate reviews for policy violations and potential sanctions. Discover detailed distinctions and management strategies in this article.

Table of Comparison

| Feature | Muted Account | Reported Account |

|---|---|---|

| Purpose | Hide posts and stories without notifying the user | Flag content or behavior violating Instagram policies |

| Notification to User | No notification sent | User may receive warning or account review notice |

| Effect on Content | Posts and stories are hidden from your feed | Content reviewed and possibly removed if violating guidelines |

| Impact on Account | No direct impact on the account | Potential for account suspension or ban upon repeated violations |

| User Control | Can mute/unmute at any time | No direct control; Instagram handles report review |

| Use Case | Limit unwanted content without confrontation | Address harassment, spam, or inappropriate content |

Introduction to Muted and Reported Accounts

Muted accounts allow users to hide posts or stories without unfollowing or blocking, enhancing content control and reducing exposure to unwanted updates. Reported accounts are flagged for violating platform policies, triggering reviews by moderators to ensure community safety and content compliance. Both features empower users to customize their social media experience and maintain a positive online environment.

What is a Muted Account?

A muted account on social media allows you to hide posts and updates from a specific user without unfollowing or blocking them, maintaining your connection while reducing unwanted content. This feature helps you control your feed more effectively by minimizing distractions or negative interactions. Your muted accounts remain unaware, ensuring a discreet way to customize your social media experience.

What is a Reported Account?

A reported account on social media refers to a user profile flagged by others for violating platform rules, such as spreading misinformation, engaging in harassment, or posting inappropriate content. When you report an account, the platform's moderation team reviews it to determine if actions like warnings, content removal, or suspension are necessary. This process helps maintain a safer and more respectful online community.

Key Differences: Muted vs Reported Accounts

Muted accounts on social media restrict the visibility of posts and updates without alerting the muted user, allowing users to avoid unwanted content discreetly. Reported accounts involve actively flagging content or behavior for review by the platform's moderation team due to violations of community guidelines or terms of service. Muting is a passive, personal content control tool, while reporting initiates a formal investigation that may lead to account suspension or removal.

How Muting Affects User Experience

Muting on social media enhances user experience by allowing users to filter unwanted content without unfollowing or blocking, reducing exposure to negativity and irrelevant posts. This feature promotes mental well-being by minimizing stress from contentious or overwhelming interactions while maintaining social connections. Platforms leveraging advanced muting algorithms improve content personalization, increasing engagement and user satisfaction.

Consequences of Reporting an Account

Reporting an account on social media can lead to temporary suspension, content removal, or permanent banning if violations of community guidelines are confirmed. Your report triggers a review process where platforms analyze the account's activity for spam, harassment, misinformation, or other harmful behavior. Understanding these consequences helps you protect online communities and maintain platform safety effectively.

Privacy and Visibility: Muting vs Reporting

Muting on social media allows users to control their visibility by hiding content from specific accounts without notifying the muted user, preserving privacy and minimizing conflict. Reporting, on the other hand, involves flagging content or accounts for violating community guidelines, which triggers a review process aimed at enforcing platform safety and protecting user privacy from harmful or abusive behavior. Choosing between muting and reporting depends on the user's goal: personal content management or promoting broader platform security and compliance.

When to Mute and When to Report

Mute social media accounts when posts disrupt your mental well-being without violating platform rules, allowing control over content without escalation. Report accounts or posts that involve harassment, hate speech, misinformation, or other violations of community guidelines to ensure platform enforcement and user safety. Effective use of mute and report functions enhances digital space quality and personal online experience.

Platform Policies on Muting and Reporting

Social media platform policies on muting and reporting empower users to control their online experience by filtering unwanted content and flagging harmful behavior. These policies outline clear procedures for muting accounts, managing notifications, and submitting reports for harassment, spam, or misinformation, ensuring a safer digital environment. Enforcement mechanisms often include temporary suspensions, content removal, and permanent bans based on the severity of violations, reinforcing platform community guidelines.

Final Thoughts: Choosing the Right Action

Selecting the right action on social media requires analyzing audience engagement metrics, platform algorithms, and content relevance to maximize reach and impact. Prioritizing authentic interactions and aligning actions with brand values encourages sustained growth and community trust. Data-driven decisions and continuous optimization ensure effective social media strategies that drive meaningful results.

socmedb.com

socmedb.com