Photo illustration: AutoModerator vs manual moderation

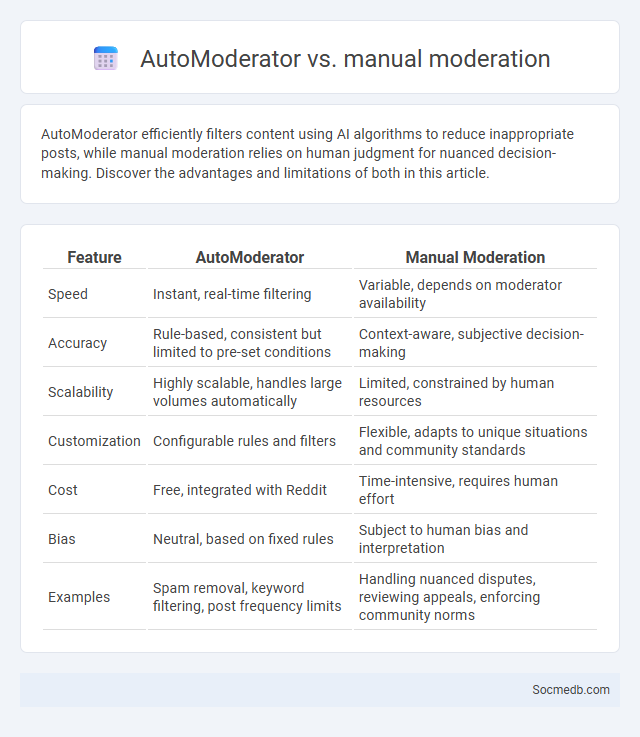

AutoModerator efficiently filters content using AI algorithms to reduce inappropriate posts, while manual moderation relies on human judgment for nuanced decision-making. Discover the advantages and limitations of both in this article.

Table of Comparison

| Feature | AutoModerator | Manual Moderation |

|---|---|---|

| Speed | Instant, real-time filtering | Variable, depends on moderator availability |

| Accuracy | Rule-based, consistent but limited to pre-set conditions | Context-aware, subjective decision-making |

| Scalability | Highly scalable, handles large volumes automatically | Limited, constrained by human resources |

| Customization | Configurable rules and filters | Flexible, adapts to unique situations and community standards |

| Cost | Free, integrated with Reddit | Time-intensive, requires human effort |

| Bias | Neutral, based on fixed rules | Subject to human bias and interpretation |

| Examples | Spam removal, keyword filtering, post frequency limits | Handling nuanced disputes, reviewing appeals, enforcing community norms |

Introduction to Moderation in Online Communities

Effective moderation in online communities ensures a safe, respectful environment by enforcing guidelines that prevent harmful content and promote positive interactions. By actively managing user behavior and addressing conflicts promptly, moderators protect Your community's integrity and encourage meaningful engagement. Tools such as automated filters, user reporting systems, and community feedback mechanisms play a vital role in maintaining balance and fostering trust among members.

What is AutoModerator?

AutoModerator is a tool that automatically monitors and manages content on social media platforms, filtering posts and comments according to predefined rules to maintain community standards. By detecting spam, offensive language, or off-topic content, it helps keep discussions relevant and safe for your audience. Utilizing AutoModerator enhances engagement by ensuring a positive and organized social media environment.

Manual Moderation Explained

Manual moderation involves human reviewers who assess content on social media platforms to ensure it complies with community guidelines and policies. This process helps identify nuanced or context-sensitive issues like hate speech, misinformation, and harassment that automated systems may miss. Your social media experience benefits from manual moderation by fostering a safer and more respectful online environment.

Key Differences: AutoModerator vs Manual Moderation

AutoModerator leverages algorithms to automatically detect and filter inappropriate content based on predefined rules, ensuring real-time moderation across large social media platforms. Manual moderation involves human reviewers who assess context, nuance, and subtlety, providing more accurate judgment on complex or borderline cases. While AutoModerator excels in scalability and speed, manual moderation is essential for handling subjective issues like sarcasm, cultural sensitivity, and nuanced community guidelines.

Pros and Cons of AutoModerator

AutoModerator enhances social media management by automatically filtering spam, enforcing community guidelines, and reducing the workload for moderators, which helps maintain a positive user experience. However, over-reliance on AutoModerator can lead to false positives, where legitimate posts or comments are mistakenly removed, potentially harming user engagement. Balancing automated moderation with human oversight is crucial for optimizing content quality and community health on platforms like Reddit, Facebook, and Discord.

Pros and Cons of Manual Moderation

Manual moderation offers precise, context-aware content review by human moderators, ensuring nuanced understanding of complex or sensitive posts. It can effectively identify harmful content, such as hate speech or misinformation, that automated systems might miss, maintaining community standards. However, manual moderation is time-consuming, costly, and prone to human bias or inconsistencies, potentially limiting scalability on large social media platforms.

Efficiency and Scalability: Automated vs Manual

Automated social media management tools significantly enhance efficiency by scheduling posts, analyzing engagement metrics, and responding to common inquiries, allowing Your brand to maintain a consistent online presence without constant manual input. Manual social media efforts, while offering personalized engagement and creative flexibility, often lack scalability and consume more time and resources, limiting your ability to manage multiple platforms effectively. Leveraging automation enables seamless scalability, ensuring rapid content dissemination and real-time optimization across diverse channels.

Accuracy and Fairness: Human Touch vs Automation

Social media platforms strive to balance accuracy and fairness by combining human moderation with advanced automated systems. While automation efficiently detects harmful content using AI algorithms and large datasets, human oversight is essential to interpret context, nuance, and cultural sensitivities accurately. This hybrid approach enhances content evaluation, reduces bias, and promotes a fairer user experience across diverse online communities.

Best Practices for Combining AutoModerator and Manual Moderation

Effective social media management integrates AutoModerator with manual moderation to enhance content quality and user experience. AutoModerator efficiently filters spam and enforces community guidelines by automatically flagging or removing inappropriate posts, while manual moderators address nuanced cases requiring human judgment. Combining these methods ensures faster response times, consistent rule enforcement, and fosters a respectful, engaged online community.

Conclusion: Choosing the Right Moderation Strategy

Selecting the right social media moderation strategy depends on platform size, user engagement levels, and content sensitivity to maintain a safe online environment. Automated tools combined with human moderators optimize efficiency and accuracy, balancing quick response times and contextual understanding. Clear policies and continuous monitoring ensure community guidelines align with evolving user behaviors and legal requirements.

socmedb.com

socmedb.com