Photo illustration: AutoModerator vs spam filters

AutoModerator uses customizable rules and machine learning to proactively manage social media content, whereas traditional spam filters primarily detect and block unsolicited or harmful posts based on fixed criteria. Explore this article to understand how these tools differ and enhance social media moderation.

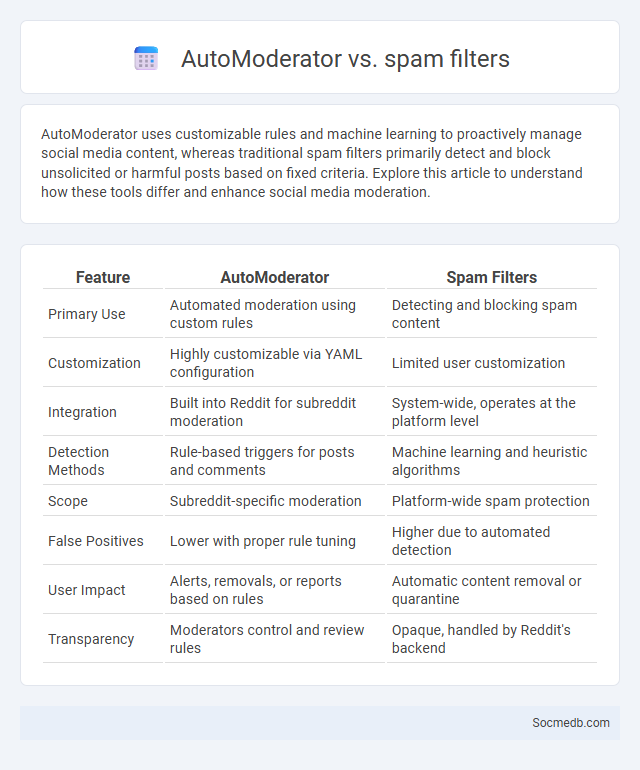

Table of Comparison

| Feature | AutoModerator | Spam Filters |

|---|---|---|

| Primary Use | Automated moderation using custom rules | Detecting and blocking spam content |

| Customization | Highly customizable via YAML configuration | Limited user customization |

| Integration | Built into Reddit for subreddit moderation | System-wide, operates at the platform level |

| Detection Methods | Rule-based triggers for posts and comments | Machine learning and heuristic algorithms |

| Scope | Subreddit-specific moderation | Platform-wide spam protection |

| False Positives | Lower with proper rule tuning | Higher due to automated detection |

| User Impact | Alerts, removals, or reports based on rules | Automatic content removal or quarantine |

| Transparency | Moderators control and review rules | Opaque, handled by Reddit's backend |

Introduction to Automated Moderation Tools

Automated moderation tools use artificial intelligence and machine learning algorithms to detect and manage inappropriate content on social media platforms, enhancing user safety and compliance with community standards. These tools analyze text, images, and videos in real-time to identify hate speech, spam, and cyberbullying, reducing the need for manual review and speeding up response times. Your online experience becomes more secure and enjoyable as these systems continuously learn and improve to handle diverse content challenges effectively.

What is AutoModerator?

AutoModerator is an automated moderation tool used primarily on social media platforms like Reddit to enforce community rules by detecting and removing inappropriate content. It operates based on customizable filters and criteria set by moderators to identify spam, offensive language, or rule-breaking posts without manual intervention. This technology enhances user experience by maintaining a safe and relevant discussion environment through real-time content screening.

Understanding Spam Filters in Online Communities

Understanding spam filters in online communities is essential for maintaining genuine interactions and protecting your digital reputation. These filters use machine learning algorithms to detect and block unwanted content based on keyword patterns, posting frequency, and user behavior. Optimizing your posts by avoiding typical spam triggers ensures smooth communication and enhances your presence in social platforms.

Key Differences: AutoModerator vs Spam Filters

AutoModerator operates through customizable rules and user-defined criteria to preemptively moderate content and enforce subreddit-specific guidelines, while spam filters utilize algorithmic detection and machine learning to identify unsolicited or harmful content across broader platforms. AutoModerator excels in community-tailored moderation by targeting posts and comments based on keywords, user behavior, and contextual factors, whereas spam filters primarily scan for spam patterns, phishing links, and blacklisted domains to protect user experience globally. These systems complement each other by combining rule-based intervention with adaptive spam detection for comprehensive social media content management.

Strengths and Weaknesses of AutoModerator

AutoModerator excels in efficiently managing social media communities by automatically filtering spam, enforcing rules, and reducing the workload on human moderators. Its customizable algorithms allow tailored moderation that aligns with your community guidelines, enhancing user experience and engagement. However, AutoModerator's limitations include occasional misclassification of legitimate content and lack of nuanced understanding, which may lead to unnecessary post removals or user frustration.

Effectiveness of Spam Filters in Moderation

Spam filters play a crucial role in enhancing the effectiveness of social media moderation by automatically detecting and removing unwanted content such as promotional messages, scams, and malicious links. These filters utilize advanced algorithms and machine learning techniques to analyze text patterns and user behavior, reducing the burden on human moderators and improving platform safety. Ensuring your social media experience remains relevant and secure depends significantly on the continuous optimization of these spam filtering systems.

Common Use Cases: When to Use Each Tool

Social media platforms serve distinct purposes based on user goals and audience engagement; for instance, Facebook excels in community building and event promotion while LinkedIn is ideal for professional networking and B2B marketing. Instagram is best suited for visual storytelling and influencer collaborations due to its image-centric design, whereas Twitter enables real-time updates and customer service interactions through concise messaging. Choosing the right social media tool depends on targeting specific demographics, content types, and interaction styles to maximize reach and engagement.

Integrating AutoModerator with Spam Filters

Integrating AutoModerator with spam filters enhances your social media platform's ability to detect and block unwanted content efficiently. This combination leverages machine learning algorithms and keyword analysis to identify spam patterns in real-time, reducing manual moderation efforts. Your community benefits from a cleaner, safer environment that promotes genuine engagement and trust.

Community Feedback: User Experiences and Insights

Community feedback on social media reveals diverse user experiences and valuable insights that help shape platform improvements and content strategies. Your engagement and shared opinions contribute to identifying trends, enhancing user satisfaction, and fostering authentic connections within online communities. Analyzing this feedback allows brands and developers to tailor features and interactions more effectively to meet the evolving needs of their audiences.

Future Trends in Automated Moderation Tools

Future trends in automated moderation tools emphasize the integration of advanced AI and machine learning algorithms capable of context-aware content analysis to improve accuracy in detecting harmful or inappropriate posts. These tools are expected to leverage natural language processing and image recognition technologies to handle multimedia content effectively, reducing the reliance on human moderators. By adopting these innovations, your social media platforms can maintain safer online communities while enhancing user experience and operational efficiency.

socmedb.com

socmedb.com