Photo illustration: AutoModerator vs user reports

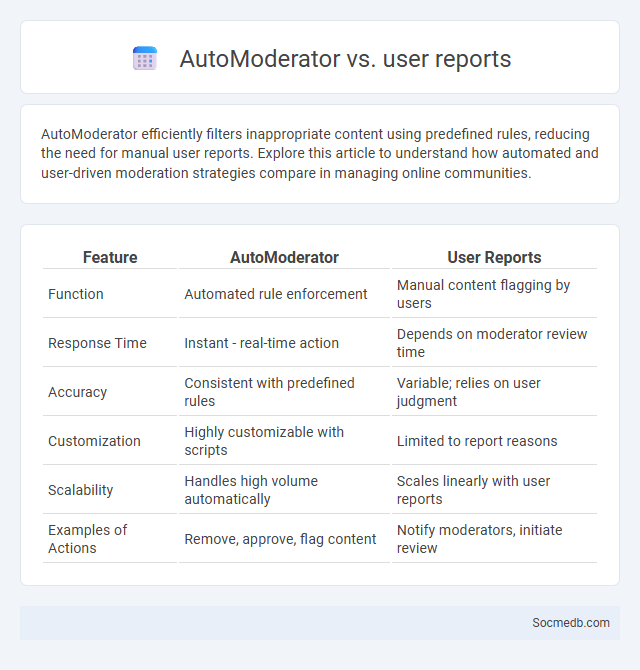

AutoModerator efficiently filters inappropriate content using predefined rules, reducing the need for manual user reports. Explore this article to understand how automated and user-driven moderation strategies compare in managing online communities.

Table of Comparison

| Feature | AutoModerator | User Reports |

|---|---|---|

| Function | Automated rule enforcement | Manual content flagging by users |

| Response Time | Instant - real-time action | Depends on moderator review time |

| Accuracy | Consistent with predefined rules | Variable; relies on user judgment |

| Customization | Highly customizable with scripts | Limited to report reasons |

| Scalability | Handles high volume automatically | Scales linearly with user reports |

| Examples of Actions | Remove, approve, flag content | Notify moderators, initiate review |

Introduction to Reddit Moderation Tools

Reddit moderation tools empower you to manage and curate communities effectively by automating content review, enforcing community rules, and handling user reports. These tools include AutoModerator for filtering posts, mod queues for tracking violations, and custom permissions to assign moderator roles. Leveraging Reddit moderation tools ensures a safer, more engaging environment tailored to your community's values.

What is AutoModerator?

AutoModerator is a powerful tool used on social media platforms to automatically enforce community rules by monitoring posts and comments for specific keywords, spam, or inappropriate content. Your online community benefits from reduced manual moderation, ensuring a safer and more engaging environment. This automation helps maintain high-quality interactions and prevents rule violations efficiently.

How User Reports Work

User reports on social media platforms enable you to flag inappropriate content, such as spam, harassment, or misinformation, for review by moderators. These reports are analyzed using automated systems combined with human oversight to determine if the content violates community guidelines. Timely user reports play a crucial role in maintaining a safe and respectful online environment.

AutoModerator vs User Reports: Key Differences

AutoModerator uses AI algorithms to automatically detect and filter inappropriate content, ensuring your social media platform stays safe and compliant with community guidelines. User reports rely on the community to flag content manually, providing a human perspective that can catch nuanced or context-specific issues. Combining both methods enhances moderation accuracy by balancing automated efficiency with user insight.

Strengths of AutoModerator

AutoModerator enhances social media management by automatically filtering harmful comments, spam, and inappropriate content, ensuring a safer community. Its customizable rules allow you to tailor moderation to your platform's specific needs, saving time and reducing manual oversight. With real-time moderation capabilities, AutoModerator helps maintain engagement quality and fosters positive user interactions.

Weaknesses of AutoModerator

AutoModerator's rigid filters often fail to understand nuanced language, causing it to mistakenly flag or remove genuine content. It struggles to adapt to evolving slang and context-specific jargon, which can frustrate community members. You may find that its over-automation reduces personalized moderation, impacting user engagement negatively.

Advantages of User Reports

User reports on social media platforms enhance content moderation by enabling the community to identify harmful or inappropriate posts quickly. These reports empower you to contribute to a safer online environment by flagging violations, which helps maintain platform integrity and user trust. Leveraging user feedback allows algorithms to improve accuracy in detecting problematic content, ensuring a more positive social media experience.

Limitations of User Reports

User reports on social media often face limitations such as inconsistent reporting standards, which hinder effective content moderation. The subjective nature of reports leads to potential bias, affecting the accuracy of identifying harmful or inappropriate content. Platforms struggle with scalability as large volumes of reports overwhelm moderation teams, resulting in delayed responses and unresolved issues.

Combining AutoModerator and User Reports Effectively

Combining AutoModerator's automated rule-based filtering with user reports enhances social media content moderation by reducing harmful or inappropriate posts swiftly and accurately. AutoModerator identifies common violations using predefined filters, while user reports provide real-time, crowd-sourced feedback on nuanced or emerging issues. Integrating these methods improves moderation efficiency and community trust by balancing automated precision with human judgment.

Choosing the Right Moderation Strategy for Your Subreddit

Selecting the right moderation strategy for your subreddit involves balancing community engagement with content quality to foster a positive environment. Implement clear, consistent rules that reflect your subreddit's goals and use a combination of automated tools and active moderator involvement to efficiently manage posts and comments. Your approach should adapt to the community's size and activity level, ensuring both scalability and responsiveness to maintain healthy discussions.

socmedb.com

socmedb.com