Photo illustration: Downvote vs Ban

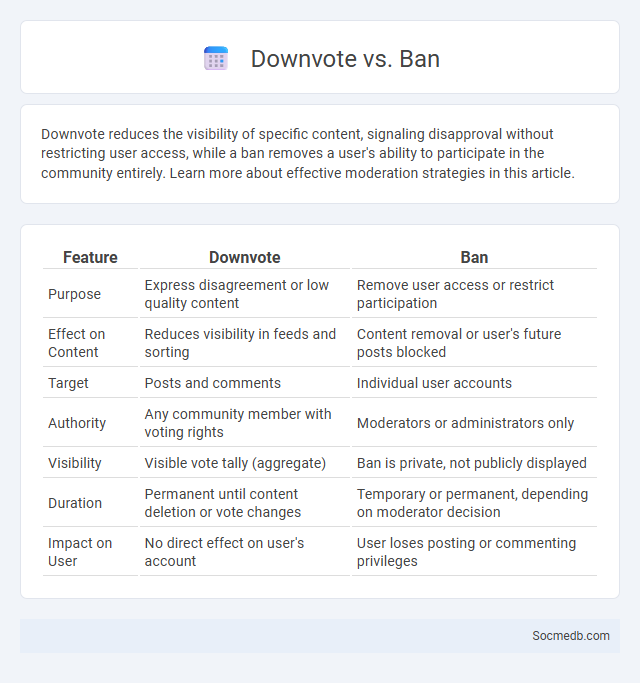

Downvote reduces the visibility of specific content, signaling disapproval without restricting user access, while a ban removes a user's ability to participate in the community entirely. Learn more about effective moderation strategies in this article.

Table of Comparison

| Feature | Downvote | Ban |

|---|---|---|

| Purpose | Express disagreement or low quality content | Remove user access or restrict participation |

| Effect on Content | Reduces visibility in feeds and sorting | Content removal or user's future posts blocked |

| Target | Posts and comments | Individual user accounts |

| Authority | Any community member with voting rights | Moderators or administrators only |

| Visibility | Visible vote tally (aggregate) | Ban is private, not publicly displayed |

| Duration | Permanent until content deletion or vote changes | Temporary or permanent, depending on moderator decision |

| Impact on User | No direct effect on user's account | User loses posting or commenting privileges |

Understanding Downvotes: Definition and Purpose

Downvotes function as a user-driven feedback mechanism on social media platforms, signaling content that is perceived as irrelevant, misleading, or inappropriate. This negative rating system helps algorithms filter low-quality posts, enhancing overall content curation and user experience. Understanding the role of downvotes reveals their importance in maintaining community standards and promoting valuable interactions.

What Constitutes a Ban: Key Differences

A social media ban restricts Your ability to access or use a platform due to violations of policies, ranging from temporary suspensions to permanent account removals. Key differences include the severity of the infraction, duration of the ban, and whether content or interactions are limited or completely blocked. Understanding the platform's specific community guidelines and enforcement measures helps clarify what actions trigger a ban and how it affects Your online presence.

Downvotes vs. Bans: When and Why They're Used

Downvotes serve as a user-driven feedback mechanism to signal disapproval or low-quality content without removing it from the platform, helping maintain community standards through collective judgment. Bans are enforced by moderators or administrators to permanently or temporarily remove users who violate platform rules, ensuring a safer and more respectful environment. Understanding when to use downvotes versus bans can help you effectively navigate and contribute to healthier online communities.

Community Guidelines and Enforcement Tools

Social media platforms implement comprehensive Community Guidelines to regulate user behavior, ensuring a safe and respectful environment by prohibiting hate speech, harassment, and misinformation. Enforcement tools such as automated content filters, user reporting mechanisms, and account suspension protocols enable timely identification and mitigation of guideline violations. Continuous updates to these policies and technologies help adapt to evolving digital interactions and maintain platform integrity.

Psychological Impact: Downvote vs. Ban

Social media platforms influence psychological well-being, with downvotes triggering feelings of rejection and decreased self-esteem, while bans induce more severe consequences such as social isolation and identity disruption. Downvotes often result in temporary negative emotions, affecting engagement and content creation motivation. Bans, however, can lead to prolonged anxiety and a loss of social support networks, amplifying mental health challenges.

Abuse and Misuse: Challenges of Downvotes and Bans

Social media platforms face significant challenges in managing abuse and misuse related to downvotes and bans, as these tools can be exploited for harassment or silencing dissent. Downvote systems often lead to groupthink or suppression of minority opinions, while bans can be inconsistently applied, raising concerns about censorship and fairness. Effective moderation algorithms and transparent policies are crucial to balance free expression and community safety.

Effectiveness in Moderation: A Comparative Look

Effective social media moderation relies on advanced algorithms combined with human oversight to identify and manage harmful content swiftly. Platforms utilizing AI-driven tools alongside community guidelines enforcement demonstrate higher success rates in reducing misinformation and toxic behavior. Your engagement and reporting within these moderated environments help maintain a safer and more respectful online space.

Transparency and Accountability in Moderation

Effective social media platforms implement transparent moderation policies that clearly outline content guidelines and enforcement procedures, fostering user trust and compliance. Accountability measures include detailed reporting systems and independent review boards to address complaints and reduce bias in content removal decisions. By combining transparency with accountability, social media companies enhance community safety while respecting freedom of expression.

User Experience: Navigating Downvotes and Bans

Social media platforms implement downvotes and bans to enhance user experience by moderating content and maintaining community standards. Effective navigation of these features involves understanding platform guidelines and engaging positively to avoid penalties. Clear feedback mechanisms and transparent policies improve user interactions and foster a respectful online environment.

Striking the Balance: Best Moderation Practices

Effective social media moderation hinges on striking a balance between fostering open communication and maintaining a respectful environment, which reduces harmful content without stifling creativity or expression. Employing clear guidelines, AI-driven content filtering, and human oversight ensures healthy community engagement while protecting users from misinformation and harassment. Your social media platform thrives when moderation enhances user experience by promoting safety and authenticity simultaneously.

socmedb.com

socmedb.com