Photo illustration: Reddit Mods vs Trolls

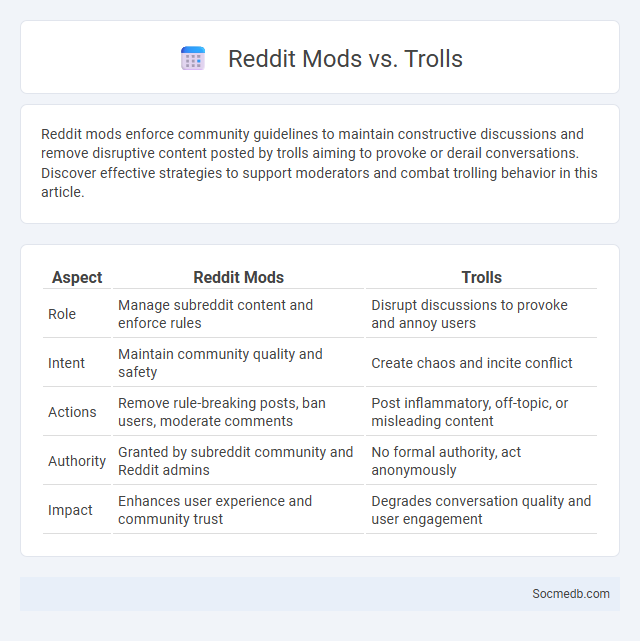

Reddit mods enforce community guidelines to maintain constructive discussions and remove disruptive content posted by trolls aiming to provoke or derail conversations. Discover effective strategies to support moderators and combat trolling behavior in this article.

Table of Comparison

| Aspect | Reddit Mods | Trolls |

|---|---|---|

| Role | Manage subreddit content and enforce rules | Disrupt discussions to provoke and annoy users |

| Intent | Maintain community quality and safety | Create chaos and incite conflict |

| Actions | Remove rule-breaking posts, ban users, moderate comments | Post inflammatory, off-topic, or misleading content |

| Authority | Granted by subreddit community and Reddit admins | No formal authority, act anonymously |

| Impact | Enhances user experience and community trust | Degrades conversation quality and user engagement |

Introduction to Reddit: A Battlefield of Moderation

Reddit is a complex social media platform where communities, known as subreddits, engage in dynamic content sharing and discussions, creating a digital battlefield of moderation. Your experience on Reddit depends heavily on how moderators enforce rules to balance free speech with community standards, often leading to intense debates over content appropriateness. Effective moderation strategies on Reddit are crucial for maintaining constructive interactions and preventing the spread of misinformation across its diverse user base.

Who Are Reddit Mods? Roles and Responsibilities

Reddit moderators, or mods, are volunteer users who manage specific subreddit communities by enforcing rules, removing inappropriate content, and facilitating discussions to maintain a positive environment. Their responsibilities include approving or removing posts and comments, addressing user reports, and setting subreddit guidelines tailored to their community's focus. Effective moderation ensures that subreddits remain engaging, relevant, and safe for all participants.

Understanding Trolls: Motives and Tactics

Trolls on social media often seek attention, disruption, or influence through provocative comments and inflammatory content. Their tactics include spreading misinformation, personal attacks, and manipulating emotions to provoke reactions and sow discord. Understanding these motives and tactics helps you recognize and respond effectively to online trolling behaviors.

Trolling Defined: From Mild Mischief to Malicious Behavior

Trolling refers to the act of intentionally provoking or upsetting individuals on social media platforms through disruptive comments, false information, or inflammatory content. This behavior ranges from mild mischief, such as harmless pranks, to malicious actions aimed at causing emotional distress or spreading hate speech. Understanding the spectrum of trolling helps you recognize and respond effectively to maintain a positive online environment.

The Daily Struggle: Mods vs Trolls

Moderating social media platforms requires constant vigilance against trolls who disrupt conversations and spread misinformation. You must balance enforcing community guidelines with allowing free expression to maintain a healthy online environment. Effective moderation tools and strategies are essential to combat the daily struggle between moderators and disruptive users.

Tools and Strategies Mods Use Against Trolling

Mods use a combination of advanced tools and strategic measures to combat trolling on social media platforms. Automated filters, AI-driven content analysis, and real-time monitoring systems help identify and remove harmful comments swiftly. Your engagement benefits from these proactive tactics, fostering safer and more respectful online communities.

How Trolls Evade Moderation and Detection

Trolls evade social media moderation and detection by exploiting platform algorithm loopholes and using coded language or misspellings to bypass automated filters. They often create fake accounts and employ bot networks to amplify harmful content, making it difficult for systems to identify genuine malicious activity. Protecting your online interactions requires awareness of these tactics and utilizing advanced moderation tools that combine AI with human oversight.

Impact of Trolling on Reddit Communities

Trolling significantly disrupts Reddit communities by fostering toxic environments that discourage genuine interactions and constructive discussions. Your participation can be undermined as troll behavior often spreads misinformation and provokes emotional responses, leading to decreased user engagement and community trust. Effective moderation and user reporting are critical to maintaining healthy, inclusive Reddit spaces where meaningful conversations thrive.

User Reactions: Reporting, Defending, and Participating

User reactions on social media encompass reporting inappropriate content, defending oneself or others, and actively participating in discussions or campaigns. Effective reporting mechanisms help maintain community standards while defenses often involve clarifying misunderstandings or standing against misinformation. Your engagement in these functions shapes the overall online environment and fosters a more accountable digital space.

The Future of Moderation: Balancing Free Speech and Safety

The future of social media moderation hinges on advanced AI-driven tools that enhance content filtering while preserving freedom of expression. Platforms employ machine learning algorithms to detect harmful behavior and misinformation without over-censoring legitimate user speech. Regulatory frameworks and transparent policies increasingly influence moderation practices aimed at safeguarding user safety and promoting responsible communication.

socmedb.com

socmedb.com