Photo illustration: vote manipulation vs trolling

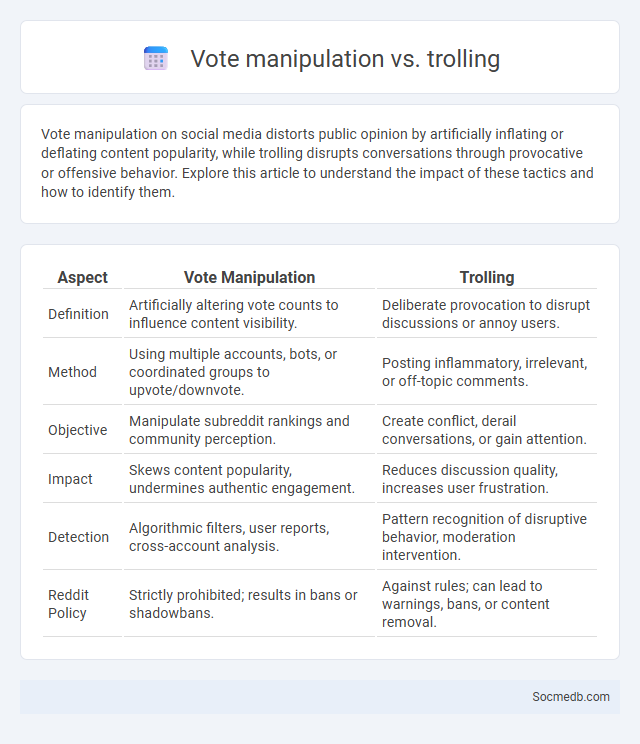

Vote manipulation on social media distorts public opinion by artificially inflating or deflating content popularity, while trolling disrupts conversations through provocative or offensive behavior. Explore this article to understand the impact of these tactics and how to identify them.

Table of Comparison

| Aspect | Vote Manipulation | Trolling |

|---|---|---|

| Definition | Artificially altering vote counts to influence content visibility. | Deliberate provocation to disrupt discussions or annoy users. |

| Method | Using multiple accounts, bots, or coordinated groups to upvote/downvote. | Posting inflammatory, irrelevant, or off-topic comments. |

| Objective | Manipulate subreddit rankings and community perception. | Create conflict, derail conversations, or gain attention. |

| Impact | Skews content popularity, undermines authentic engagement. | Reduces discussion quality, increases user frustration. |

| Detection | Algorithmic filters, user reports, cross-account analysis. | Pattern recognition of disruptive behavior, moderation intervention. |

| Reddit Policy | Strictly prohibited; results in bans or shadowbans. | Against rules; can lead to warnings, bans, or content removal. |

Understanding Vote Manipulation: Definition and Examples

Vote manipulation involves artificially inflating or deflating approval metrics on social media platforms to skew perception and influence public opinion. Techniques include bot-generated likes, coordinated downvoting campaigns, and fake accounts promoting or demoting content. Understanding these tactics helps you recognize distorted engagement, ensuring genuine interactions drive your social media strategies.

What is Trolling? Unpacking Online Disruption

Trolling refers to the act of deliberately posting provocative, offensive, or misleading content on social media to disrupt conversations and provoke emotional reactions. This behavior often targets individuals or communities with the intent to incite arguments, spread misinformation, or cause distress. Understanding trolling is essential for protecting your online presence and fostering a respectful digital environment.

Key Differences Between Vote Manipulation and Trolling

Vote manipulation involves artificially inflating or deflating the popularity of content through coordinated actions, often using bots or fake accounts, to skew perception and reach on social media platforms. Trolling, on the other hand, consists of posting provocative, disruptive, or inflammatory messages aimed at eliciting emotional responses or derailing conversations without necessarily trying to alter content visibility metrics. Understanding these distinctions helps you navigate social media interactions effectively and recognize the intent behind negative behaviors.

Motivations Behind Vote Manipulation Online

Vote manipulation on social media platforms is driven primarily by the desire to influence public opinion and enhance visibility of specific content or agendas. Algorithms prioritize engagement metrics such as likes, shares, and upvotes, motivating actors to artificially inflate these signals to gain prominence. This manipulation undermines authentic user interactions and skews the perceived popularity of posts, impacting decision-making and information credibility.

The Tactics and Psychology of Internet Trolling

Internet trolling involves deliberate provocation to elicit emotional responses and disrupt online communities, leveraging anonymity and social media algorithms that amplify controversial content. Trolls exploit psychological triggers such as outrage, curiosity, and social validation to manipulate user engagement and spread misinformation rapidly. Understanding the motives behind trolling, including attention-seeking, social influence, and entertainment, is crucial for developing effective moderation strategies and fostering healthier digital environments.

How Vote Manipulation Impacts Online Communities

Vote manipulation undermines the integrity of online communities by distorting genuine user feedback and skewing content visibility. This practice can lead to the promotion of misleading or low-quality information, damaging trust among members. Protecting your online interactions requires vigilance against artificial voting patterns to maintain a fair and authentic digital environment.

Identifying Signs of Vote Manipulation vs Trolling

Vote manipulation on social media is characterized by coordinated activities such as mass upvoting or downvoting, often linked to bot accounts or organized groups aiming to skew public opinion. In contrast, trolling typically involves provocative or inflammatory comments intended to incite reactions without the systematic engagement seen in manipulation. Detecting vote manipulation requires analyzing patterns like sudden vote spikes, account behavior, and IP similarities, whereas trolling is identified through content analysis and user interaction context.

Platform Responses: Policies Against Manipulation and Trolling

Social media platforms implement strict policies against manipulation and trolling to maintain user trust and platform integrity. These policies include automated detection systems, content moderation teams, and user reporting tools aimed at identifying and removing harmful behavior. Enforcement measures often involve account suspensions, shadow banning, and content removal to curb misinformation and harassment.

Case Studies: Notorious Incidents of Manipulation and Trolling

High-profile social media case studies of manipulation and trolling reveal patterns of coordinated misinformation campaigns influencing elections and public opinion, exemplified by the 2016 US presidential election interference via fake accounts and automated bots. The Cambridge Analytica scandal exposed how personal data harvested from millions of Facebook users was exploited to micro-target voters with manipulated content, highlighting privacy violations and behavioral influence tactics. Persistent trolling ecosystems leverage anonymity to spread disinformation, harass individuals, and polarize communities, demonstrating significant challenges in platform moderation and digital ethics.

Protecting Digital Spaces: Strategies to Combat Manipulation and Trolling

Effective protection of digital spaces involves implementing advanced AI-driven content moderation systems that detect and remove harmful manipulation and trolling in real time. Utilizing behavioral analytics helps identify coordinated disinformation campaigns and flag malicious accounts before they impact user interactions. Encouraging community-driven reporting mechanisms and promoting media literacy enhances resilience against online harassment and misinformation.

socmedb.com

socmedb.com