Photo illustration: AI Bias vs Human Moderation in TikTok

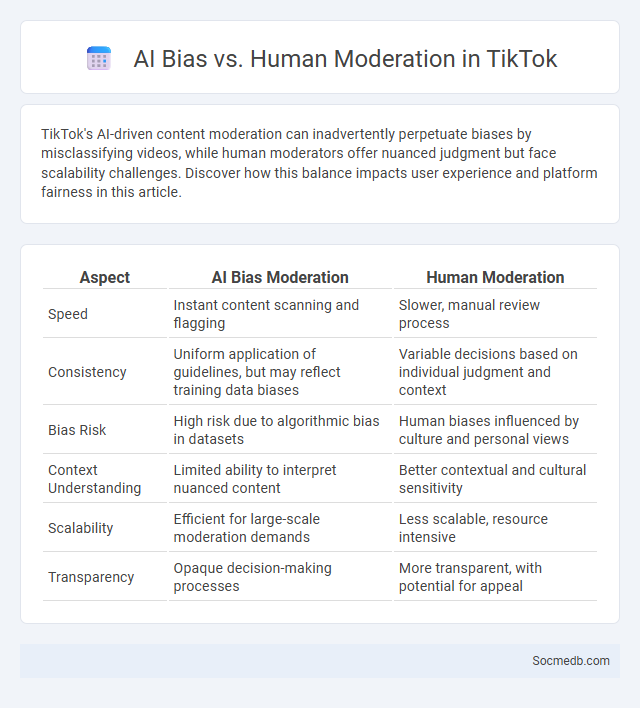

TikTok's AI-driven content moderation can inadvertently perpetuate biases by misclassifying videos, while human moderators offer nuanced judgment but face scalability challenges. Discover how this balance impacts user experience and platform fairness in this article.

Table of Comparison

| Aspect | AI Bias Moderation | Human Moderation |

|---|---|---|

| Speed | Instant content scanning and flagging | Slower, manual review process |

| Consistency | Uniform application of guidelines, but may reflect training data biases | Variable decisions based on individual judgment and context |

| Bias Risk | High risk due to algorithmic bias in datasets | Human biases influenced by culture and personal views |

| Context Understanding | Limited ability to interpret nuanced content | Better contextual and cultural sensitivity |

| Scalability | Efficient for large-scale moderation demands | Less scalable, resource intensive |

| Transparency | Opaque decision-making processes | More transparent, with potential for appeal |

Understanding AI Bias in TikTok Content Moderation

AI bias in TikTok content moderation significantly impacts the platform's ability to fairly regulate videos, often amplifying existing social inequalities by disproportionately targeting marginalized groups. Machine learning algorithms trained on biased datasets can misclassify content, leading to inconsistent enforcement of community guidelines and censorship controversies. Recognizing and addressing these biases is crucial for developing transparent, equitable moderation systems that uphold user trust and promote diverse, inclusive digital spaces.

Human Moderation: Pros and Cons in Social Platforms

Human moderation on social platforms ensures nuanced understanding of context and cultural sensitivity, reducing the risk of misinterpreting content compared to automated systems. However, it involves higher operational costs and slower response times, which can limit scalability and delay content review. Balancing human judgment with AI tools often provides the most effective approach to maintaining community standards and user safety.

Algorithmic Bias: Causes and Consequences on TikTok

Algorithmic bias on TikTok stems from machine learning models trained on skewed data reflecting societal prejudices, resulting in uneven content exposure and engagement rates. This bias disproportionately affects marginalized communities by amplifying stereotypes and limiting diverse representation within TikTok's For You page recommendations. Consequently, algorithmic bias not only hinders user experience but also perpetuates social inequalities through reinforced digital echo chambers.

Comparing Human vs AI Decision-Making in Moderation

Social media moderation involves complex decision-making processes where human judgment is crucial for understanding context, tone, and cultural nuances that AI algorithms may miss. AI excels at processing vast amounts of data quickly, identifying patterns, and flagging content based on predefined rules, but it often struggles with ambiguity and evolving social norms. Your social media experience benefits from a hybrid approach combining the precision of AI with the empathy and discretion of human moderators to ensure fair and effective content management.

Key Examples of Bias in TikTok’s Feed

TikTok's algorithm has been shown to exhibit biases by disproportionately promoting content that aligns with popular trends, often sidelining niche or minority creators. Studies reveal that the platform's feed can amplify stereotypes, such as favoring certain body types, ethnicities, or gender representations, which impacts users' content exposure and diversity. These biases in TikTok's feed affect user experience and perpetuate social inequalities by limiting the visibility of underrepresented voices.

The Role of Training Data in Algorithmic Bias

Training data significantly influences social media algorithms, as biased or unrepresentative datasets can perpetuate stereotypes and misinformation. Algorithms trained on skewed data may amplify harmful content, affecting user experience and societal perceptions. Ensuring diverse and balanced training data is essential to minimize algorithmic bias and promote fair content distribution.

Transparency and Accountability in Moderation Systems

Transparency and accountability in social media moderation systems are crucial to maintaining user trust and platform integrity. Clear policies and open communication about content rules, enforcement actions, and appeal processes empower you to understand how decisions are made and ensure fair treatment. Regular audits and public reporting of moderation outcomes reinforce accountability and help combat misinformation and harmful content effectively.

Impacts of Biased Moderation on Marginalized Users

Biased moderation on social media platforms disproportionately silences marginalized users, limiting their visibility and restricting their ability to participate in public discourse. This unequal enforcement of community guidelines often reinforces existing social inequalities by suppressing diverse perspectives and perpetuating systemic discrimination. As a result, marginalized groups face increased digital exclusion and diminished empowerment in online spaces.

Strategies to Reduce Bias in TikTok’s Moderation

TikTok employs advanced machine learning algorithms designed to minimize bias by continuously updating its moderation policies and training data based on diverse, global content. You can contribute to more equitable moderation by flagging inappropriate content and providing feedback, helping refine TikTok's AI sensitivity. These strategies enhance the platform's ability to create a fair, inclusive social media environment that respects varied cultural contexts.

The Future of Fairness: AI, Humans, and Ethical Moderation

Emerging advances in AI-driven content moderation aim to balance algorithmic efficiency with human ethical judgment to promote fairness on social media platforms. Integrating transparent AI systems with diverse human oversight can reduce bias and uphold community standards while respecting freedom of expression. This collaboration fosters accountable moderation frameworks that adapt to evolving societal values and protect user rights in digital spaces.

socmedb.com

socmedb.com