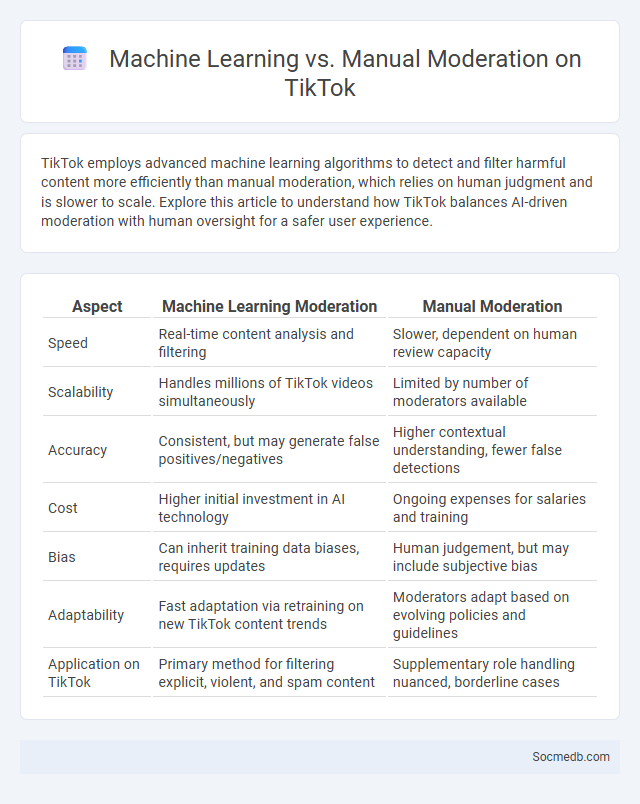

Photo illustration: Machine Learning vs Manual Moderation TikTok

TikTok employs advanced machine learning algorithms to detect and filter harmful content more efficiently than manual moderation, which relies on human judgment and is slower to scale. Explore this article to understand how TikTok balances AI-driven moderation with human oversight for a safer user experience.

Table of Comparison

| Aspect | Machine Learning Moderation | Manual Moderation |

|---|---|---|

| Speed | Real-time content analysis and filtering | Slower, dependent on human review capacity |

| Scalability | Handles millions of TikTok videos simultaneously | Limited by number of moderators available |

| Accuracy | Consistent, but may generate false positives/negatives | Higher contextual understanding, fewer false detections |

| Cost | Higher initial investment in AI technology | Ongoing expenses for salaries and training |

| Bias | Can inherit training data biases, requires updates | Human judgement, but may include subjective bias |

| Adaptability | Fast adaptation via retraining on new TikTok content trends | Moderators adapt based on evolving policies and guidelines |

| Application on TikTok | Primary method for filtering explicit, violent, and spam content | Supplementary role handling nuanced, borderline cases |

Introduction to Content Moderation on TikTok

Content moderation on TikTok involves the systematic review and management of user-generated content to ensure compliance with community guidelines and legal standards. This process employs a combination of artificial intelligence algorithms and human moderators to detect and remove harmful, inappropriate, or misleading content efficiently. TikTok's content moderation strategy aims to create a safe and enjoyable environment for its diverse global user base by balancing freedom of expression with the necessity of maintaining platform integrity.

The Evolution of Moderation: Manual vs Machine Learning

Social media moderation has evolved from solely manual review processes to advanced machine learning algorithms, enhancing the speed and accuracy of content filtering. Your ability to maintain a safe and engaging online environment now benefits from AI-powered tools that identify harmful or inappropriate posts with greater precision. Combining human judgment with automated systems ensures a balanced approach to managing community standards effectively.

Understanding TikTok’s Algorithm-Driven Moderation

TikTok's algorithm-driven moderation relies on machine learning models that analyze user behavior, content engagement, and community guidelines to identify and remove harmful or inappropriate content swiftly. The platform prioritizes personalized content delivery, leveraging real-time data to enforce moderation policies while balancing user experience and safety. Continuous updates to the algorithm enable TikTok to adapt to emerging trends, ensuring effective content moderation at scale.

Human Judgment: Strengths and Weaknesses of Manual Moderation

Human judgment plays a critical role in social media content moderation by effectively interpreting nuances, context, and cultural sensitivities that automated systems often miss. Manual moderation excels in identifying sarcasm, hate speech subtleties, and evolving slang, ensuring community standards are upheld with empathy and flexibility. However, human moderators face limitations such as scalability challenges, psychological stress from exposure to harmful content, and potential inconsistencies in decision-making across diverse cultural contexts.

Machine Learning in TikTok Moderation: Opportunities and Risks

Machine learning in TikTok moderation enhances content filtering by quickly identifying harmful or inappropriate videos using advanced algorithms trained on vast datasets, increasing user safety and platform compliance. This technology also enables real-time detection of emerging trends in misinformation, hate speech, and copyright infringements, allowing TikTok to proactively mitigate risks. However, challenges include algorithmic bias, the risk of over-censorship, and privacy concerns stemming from automated content analysis.

The Reality of Algorithmic Bias on TikTok

Algorithmic bias on TikTok significantly impacts content visibility, often favoring certain demographics and perpetuating stereotypes. Studies reveal that TikTok's recommendation system can amplify biases related to race, gender, and age, influencing user engagement and content diversity. Understanding and addressing these biases is crucial for promoting equitable representation and a more inclusive social media environment.

Comparing Accuracy: Manual Moderation vs. AI Systems

Manual moderation on social media platforms ensures high accuracy in identifying nuanced context and subtle harmful content due to human judgment but is limited by scalability and inconsistency. AI systems leverage machine learning algorithms to efficiently detect and filter large volumes of harmful posts with consistent performance but may struggle with context sensitivity and cultural nuances. Combining manual moderation with AI-driven tools enhances overall accuracy by balancing human insight and automated efficiency in content review.

Ethical Implications of Automated Moderation

Automated moderation on social media platforms raises critical ethical concerns related to bias, transparency, and accountability, as algorithms can inadvertently censor legitimate content while failing to detect harmful material. Your online experience may be shaped by these opaque systems, which often lack clear guidelines or avenues for appeal. Ensuring fairness and protecting users' rights requires continuous improvement and human oversight to balance technological efficiency with ethical responsibility.

User Experience: Impact of Bias and Errors

Social media platforms often exhibit bias and errors in content curation algorithms, leading to echo chambers and misinformation that degrade user experience. These biases affect engagement metrics and user trust by filtering diverse perspectives and amplifying misleading information. Identifying and mitigating algorithmic bias is essential for creating fairer, more accurate content delivery that enhances user satisfaction and promotes healthy online interactions.

Future Trends: Balancing AI and Human Oversight on TikTok

TikTok's future trends emphasize a strategic balance between AI-driven content personalization and human oversight to ensure ethical and accurate moderation. Advanced machine learning algorithms analyze user behavior and preferences to deliver tailored content, while human moderators address context-sensitive decisions and curb misinformation. This dual approach aims to enhance user engagement without compromising platform integrity or community safety.

socmedb.com

socmedb.com