Photo illustration: TikTok banned content vs YouTube community guidelines

TikTok prohibits content featuring hate speech, misinformation, and explicit material, while YouTube's community guidelines enforce stricter rules on copyright infringement, violent content, and harmful challenges. Explore this article to understand the nuanced differences between TikTok banned content and YouTube's community standards.

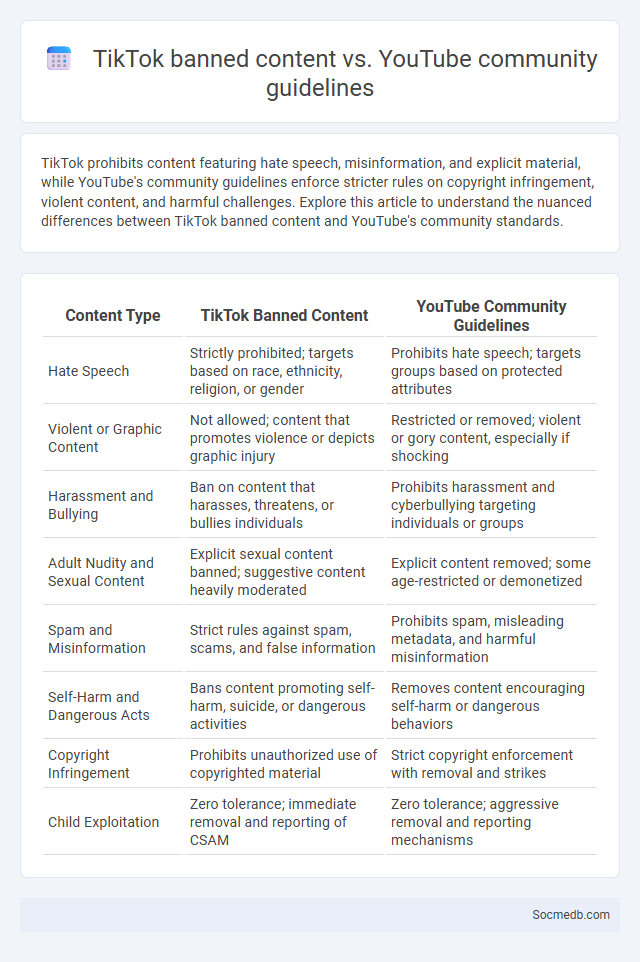

Table of Comparison

| Content Type | TikTok Banned Content | YouTube Community Guidelines |

|---|---|---|

| Hate Speech | Strictly prohibited; targets based on race, ethnicity, religion, or gender | Prohibits hate speech; targets groups based on protected attributes |

| Violent or Graphic Content | Not allowed; content that promotes violence or depicts graphic injury | Restricted or removed; violent or gory content, especially if shocking |

| Harassment and Bullying | Ban on content that harasses, threatens, or bullies individuals | Prohibits harassment and cyberbullying targeting individuals or groups |

| Adult Nudity and Sexual Content | Explicit sexual content banned; suggestive content heavily moderated | Explicit content removed; some age-restricted or demonetized |

| Spam and Misinformation | Strict rules against spam, scams, and false information | Prohibits spam, misleading metadata, and harmful misinformation |

| Self-Harm and Dangerous Acts | Bans content promoting self-harm, suicide, or dangerous activities | Removes content encouraging self-harm or dangerous behaviors |

| Copyright Infringement | Prohibits unauthorized use of copyrighted material | Strict copyright enforcement with removal and strikes |

| Child Exploitation | Zero tolerance; immediate removal and reporting of CSAM | Zero tolerance; aggressive removal and reporting mechanisms |

Overview of Content Regulation on TikTok and YouTube

Content regulation on TikTok and YouTube centers on strict policies addressing harmful, misleading, or inappropriate material to create safe user environments. TikTok employs AI-driven moderation combined with human reviewers to enforce community guidelines, focusing on real-time content removal and user reports. YouTube uses an advanced algorithmic system supported by content ID and manual reviews to manage copyright claims, hate speech, and misinformation, ensuring Your interactions remain secure and compliant with platform standards.

What Constitutes Banned Content on TikTok?

Banned content on TikTok includes material that violates community guidelines such as hate speech, harassment, explicit sexual content, and misinformation related to health or safety. Content promoting violence, self-harm, or dangerous behavior is strictly prohibited to ensure user safety and platform integrity. TikTok also removes content that infringes on intellectual property rights or involves illegal activities.

Key Provisions in YouTube’s Community Guidelines

YouTube's Community Guidelines emphasize prohibitions on harmful content, including hate speech, harassment, and violent extremism, to maintain a safe platform. Content promoting misinformation, spam, and deceptive practices is strictly monitored and removed to protect user trust. Enforcement mechanisms include strikes, account suspensions, and content removal, ensuring compliance with policies tailored to evolving social media dynamics.

Comparative Analysis: TikTok Banned Content vs. YouTube Guidelines

TikTok enforces strict prohibitions on violent extremism, misinformation, and explicit content, with a particular emphasis on protecting younger audiences through age-appropriate restrictions and automated content moderation using AI algorithms. YouTube's Community Guidelines similarly ban hate speech, harmful misinformation, and sexually explicit material but implement a three-strike policy combined with human review and machine learning to manage content takedowns and demonetization. Comparative analysis reveals TikTok prioritizes rapid AI-driven removal to maintain a family-friendly environment, whereas YouTube balances automated enforcement with structured appeals and creator monetization policies.

Categories of Prohibited Content on Both Platforms

Social media platforms strictly prohibit categories of content including hate speech, violent or graphic content, harassment, and misinformation to maintain user safety and trust. Content involving child exploitation, illegal activities, and spam are also banned and actively removed through automated systems and user reports. These policies are enforced consistently to comply with legal regulations and community standards across platforms such as Facebook and Twitter.

Enforcement Mechanisms: TikTok vs. YouTube

Enforcement mechanisms on social media platforms like TikTok and YouTube differ significantly in their approach to content regulation and policy compliance. TikTok employs a mix of automated algorithms and human moderators to swiftly identify and remove content violating community guidelines, prioritizing real-time intervention to curb misinformation and harmful videos. Your understanding of these enforcement strategies can help you navigate and comply with platform rules, ensuring a safer and more positive online experience.

Impact of Banned Content Policies on Creators

Banned content policies on social media platforms significantly affect creators by limiting the type of material they can share, potentially reducing their engagement and reach. These restrictions often lead to self-censorship, hindering creativity and innovation in digital content production. You must stay informed about platform guidelines to adapt your content strategy and maintain visibility amidst evolving regulations.

Regional Differences in Content Moderation

Social media platforms implement varying content moderation policies based on regional legal frameworks and cultural norms, resulting in differences in acceptable content across countries. Your experience on these platforms may differ significantly, as certain posts or topics allowed in one region might be restricted or removed in another. Understanding these regional differences in content moderation helps users navigate platform restrictions and comply with local regulations.

Notable Cases of Content Removal and Account Bans

Social media platforms enforce strict content removal and account bans to maintain community standards, highlighted by notable cases such as the suspension of high-profile figures for hate speech or misinformation, which underscores the importance of digital accountability. These actions often involve coordinated efforts between moderators and AI to detect violations promptly, protecting users from harmful content and misinformation. Understanding these enforcement mechanisms helps you navigate the digital landscape responsibly and safeguards your online experience.

The Future of Content Regulation for Social Media Platforms

Emerging advancements in artificial intelligence and machine learning are shaping the future of content regulation on social media platforms by enhancing real-time detection of harmful or misleading information. Regulatory frameworks worldwide, such as the European Union's Digital Services Act, are imposing stricter compliance requirements to ensure transparency and accountability in content moderation. Social media companies are increasingly adopting decentralized moderation protocols to balance user privacy with effective oversight and prevent the spread of disinformation.

socmedb.com

socmedb.com