Photo illustration: TikTok content moderation vs Facebook moderation

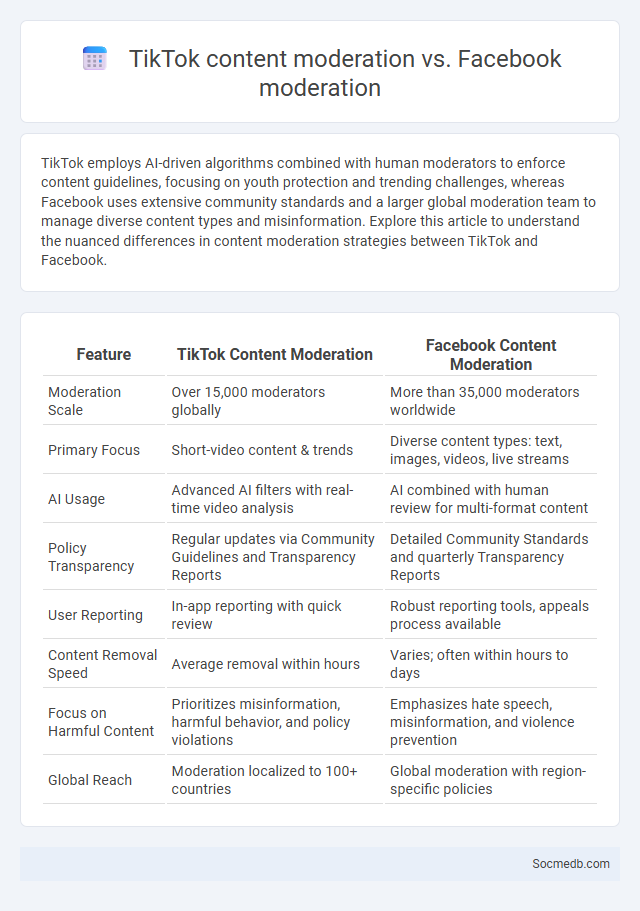

TikTok employs AI-driven algorithms combined with human moderators to enforce content guidelines, focusing on youth protection and trending challenges, whereas Facebook uses extensive community standards and a larger global moderation team to manage diverse content types and misinformation. Explore this article to understand the nuanced differences in content moderation strategies between TikTok and Facebook.

Table of Comparison

| Feature | TikTok Content Moderation | Facebook Content Moderation |

|---|---|---|

| Moderation Scale | Over 15,000 moderators globally | More than 35,000 moderators worldwide |

| Primary Focus | Short-video content & trends | Diverse content types: text, images, videos, live streams |

| AI Usage | Advanced AI filters with real-time video analysis | AI combined with human review for multi-format content |

| Policy Transparency | Regular updates via Community Guidelines and Transparency Reports | Detailed Community Standards and quarterly Transparency Reports |

| User Reporting | In-app reporting with quick review | Robust reporting tools, appeals process available |

| Content Removal Speed | Average removal within hours | Varies; often within hours to days |

| Focus on Harmful Content | Prioritizes misinformation, harmful behavior, and policy violations | Emphasizes hate speech, misinformation, and violence prevention |

| Global Reach | Moderation localized to 100+ countries | Global moderation with region-specific policies |

Introduction to Social Media Content Moderation

Social media content moderation involves the systematic review and management of user-generated content to ensure compliance with platform policies and community standards. This process utilizes a combination of automated algorithms and human moderators to identify and remove harmful, inappropriate, or illegal content such as hate speech, misinformation, and explicit material. Effective moderation balances user freedom of expression with the need to maintain a safe and respectful online environment across platforms like Facebook, Instagram, Twitter, and TikTok.

Overview of TikTok’s Moderation Policies

TikTok's moderation policies emphasize a blend of automated technology and human review to enforce community guidelines aimed at preventing harmful content such as hate speech, misinformation, and explicit material. The platform employs machine learning algorithms to detect violations quickly, while teams of moderators evaluate flagged content to ensure context-sensitive decisions. To protect your experience, TikTok promotes transparency by regularly updating policies and providing users with tools to report inappropriate content.

Facebook’s Approach to Content Moderation

Facebook's approach to content moderation relies heavily on artificial intelligence algorithms combined with human reviewers to identify and remove harmful or inappropriate content. Your online safety is prioritized through continuous updates to community standards, addressing misinformation, hate speech, and graphic violence. This dual system enables Facebook to manage billions of posts daily while adapting to emerging threats in real time.

Banned Content: Definitions and Examples

Banned content on social media encompasses material that violates platform guidelines, including hate speech, graphic violence, child exploitation, and misinformation. Examples consist of posts promoting terrorism, sharing non-consensual intimate images, or spreading false medical advice. Social media companies use content moderation algorithms and human reviewers to identify and remove such prohibited content to ensure user safety and comply with legal regulations.

Comparative Analysis of TikTok vs. Facebook Guidelines

TikTok's content guidelines emphasize creative expression and community engagement while maintaining strict policies against misinformation and harmful content, contrasting with Facebook's broader approach targeting user safety, privacy, and hate speech prevention across diverse content forms. TikTok's algorithm-driven content distribution demands adherence to rapid moderation policies, whereas Facebook employs multi-layered manual and AI interventions to monitor its extensive, global user base. Both platforms adapt their guidelines to comply with regional laws, yet TikTok prioritizes short-form video trends, while Facebook's rules cover a wider spectrum, including text, images, and video interactions.

Automated Moderation Tools: AI and Human Review

Automated moderation tools leverage AI algorithms to swiftly identify and filter harmful content on social media platforms, enhancing user safety by reducing the exposure to inappropriate material. These systems analyze text, images, and videos using natural language processing and computer vision, but often require human review to address nuanced contexts and minimize false positives. Your online experience benefits from this synergy, as AI handles large-scale content efficiently while human moderators ensure accuracy and fairness.

Handling Misinformation Across Platforms

Handling misinformation across social media platforms requires advanced algorithms to detect and flag false content swiftly. Collaboration between tech companies, fact-checking organizations, and governments enhances real-time verification and content moderation. Educating users on digital literacy empowers individuals to discern credible sources and reduce the spread of fake news.

Community Guidelines Enforcement: TikTok vs. Facebook

TikTok enforces community guidelines through AI-driven content moderation combined with human reviewers to swiftly remove harmful or violating content, prioritizing user safety and platform integrity. Facebook employs a multilayered approach integrating automated detection systems, user reports, and fact-checking partnerships to address content violations while balancing free speech concerns. Understanding these platforms' enforcement methods helps you navigate social media responsibly and contributes to a safer online environment.

Regional Variations and Cultural Sensitivities

Social media usage exhibits significant regional variations influenced by local languages, internet infrastructure, and cultural norms, with platforms like WeChat dominating in China while TikTok thrives globally. Cultural sensitivities shape content preferences and user engagement, requiring marketers to tailor messaging to respect traditions, religious beliefs, and social values unique to each region. Understanding these differences enhances targeted communication strategies and fosters authentic connections with diverse audiences worldwide.

Future Trends in Social Media Content Moderation

Future trends in social media content moderation are increasingly centered on advanced AI-driven algorithms capable of identifying harmful content with higher accuracy and speed. Your experience will benefit from enhanced transparency and user-controlled moderation settings that empower individuals to tailor content filters according to personal preferences. Expect greater integration of cross-platform moderation efforts and real-time response systems to address the growing complexity of online interactions.

socmedb.com

socmedb.com