Photo illustration: TikTok Suppression vs Censorship

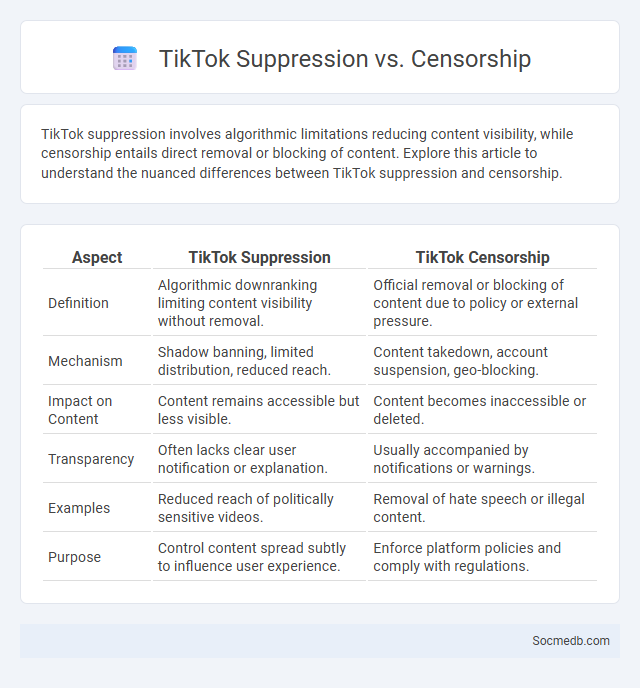

TikTok suppression involves algorithmic limitations reducing content visibility, while censorship entails direct removal or blocking of content. Explore this article to understand the nuanced differences between TikTok suppression and censorship.

Table of Comparison

| Aspect | TikTok Suppression | TikTok Censorship |

|---|---|---|

| Definition | Algorithmic downranking limiting content visibility without removal. | Official removal or blocking of content due to policy or external pressure. |

| Mechanism | Shadow banning, limited distribution, reduced reach. | Content takedown, account suspension, geo-blocking. |

| Impact on Content | Content remains accessible but less visible. | Content becomes inaccessible or deleted. |

| Transparency | Often lacks clear user notification or explanation. | Usually accompanied by notifications or warnings. |

| Examples | Reduced reach of politically sensitive videos. | Removal of hate speech or illegal content. |

| Purpose | Control content spread subtly to influence user experience. | Enforce platform policies and comply with regulations. |

Understanding TikTok Suppression: What Does It Mean?

TikTok suppression refers to the platform's algorithm limiting the visibility of certain videos or accounts, often due to content that violates community guidelines or is flagged for misinformation. Understanding this means recognizing how TikTok's content moderation impacts your reach and engagement, potentially reducing the audience for your posts. Monitoring TikTok's latest policy changes and adapting your content strategy accordingly can help maintain or improve your account's visibility.

Defining Censorship on Social Media Platforms

Censorship on social media platforms involves the regulation, restriction, or removal of content by platform administrators or algorithms based on community guidelines or legal requirements. You need to understand that this form of censorship aims to balance free expression with the prevention of harmful or illegal content, which can sometimes lead to debates over transparency and fairness. Defining censorship in this context requires analyzing platform policies, content moderation practices, and the impact on users' rights and online discourse.

Algorithmic Bias: How TikTok’s Algorithms Influence Content

TikTok's algorithms prioritize content based on user interaction patterns, often amplifying popular or engaging videos while marginalizing less mainstream perspectives. This algorithmic bias can create echo chambers, limiting exposure to diverse viewpoints and reinforcing existing preferences. As a result, users may encounter skewed content feeds that shape perceptions and influence social behavior on the platform.

Key Differences Between Suppression, Censorship, and Bias

Suppression on social media involves intentionally hiding or downranking content to reduce its visibility, whereas censorship entails the outright removal or blocking of posts deemed inappropriate or harmful by platform policies. Bias occurs when algorithms or moderators disproportionately favor or target certain viewpoints, affecting the fairness of content exposure. Understanding these distinctions helps you critically evaluate the dynamics within your social media experience and recognize potential influences on the information you consume.

Case Studies: Evidence of TikTok Suppression

TikTok suppression has been documented through multiple case studies revealing patterns of content throttling and algorithmic filtering targeting specific creators and topics. Research indicates that TikTok's moderation policies often disproportionately affect political activists, minority groups, and controversial subjects, leading to reduced visibility and engagement on your content. These empirical analyses underscore ongoing concerns about transparency and fairness in TikTok's content curation and highlight the necessity for users to remain vigilant about potential platform biases.

The Impact of Algorithmic Decisions on User Visibility

Algorithmic decisions on social media platforms significantly influence user visibility by prioritizing content based on engagement metrics, relevancy, and personalized data signals. These algorithms often favor posts with higher interaction rates, thereby amplifying popular voices while potentially marginalizing niche or emerging creators. The resultant visibility disparity shapes online discourse, affecting reach, user experience, and content diversity across platforms like Facebook, Instagram, and TikTok.

TikTok’s Official Policies on Moderation and Content Guidelines

TikTok's official policies on moderation and content guidelines emphasize maintaining a safe and supportive community by prohibiting hate speech, misinformation, and harmful content. The platform employs a combination of AI technology and human reviewers to enforce these rules, ensuring rapid removal of policy-violating videos and accounts. Transparency reports and user appeals mechanisms further reinforce TikTok's commitment to responsible content moderation and user protection.

User Experiences: Stories of Shadowbanning and Content Removal

Shadowbanning on social media platforms often leads to reduced post visibility without user notification, causing confusion and frustration among content creators. Users report sudden declines in engagement metrics despite consistent activity, signaling potential algorithmic filtering or content removal. These experiences highlight the opaque moderation practices impacting freedom of expression and community trust.

Global Perspectives: Suppression and Censorship Across Regions

Social media platforms face varying degrees of suppression and censorship worldwide, with governments in regions like China, Russia, and the Middle East employing strict controls to limit content and restrict access. These measures often target political dissent, misinformation, and cultural content deemed sensitive, impacting the free flow of information and user experience. Understanding these regional differences helps you navigate social media more effectively and remain aware of the diverse challenges affecting global communication.

Solutions and Reforms: Making Algorithms More Transparent

Improving transparency in social media algorithms involves implementing clear guidelines that disclose how content is prioritized and recommended to users. Incorporating algorithmic audits and user control features allows individuals to customize their feed experience and understand content relevance. These reforms enhance trust, reduce misinformation spread, and promote fair content distribution across diverse online communities.

socmedb.com

socmedb.com