Photo illustration: Trolls vs Disinformation

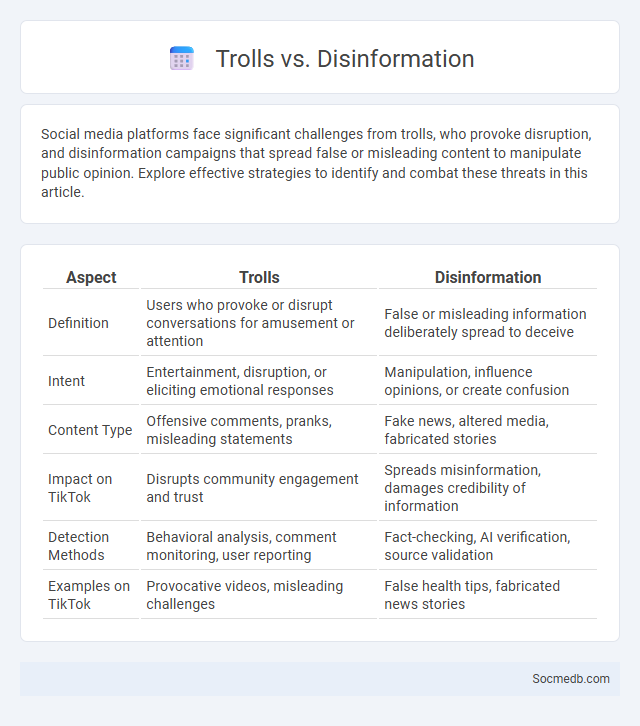

Social media platforms face significant challenges from trolls, who provoke disruption, and disinformation campaigns that spread false or misleading content to manipulate public opinion. Explore effective strategies to identify and combat these threats in this article.

Table of Comparison

| Aspect | Trolls | Disinformation |

|---|---|---|

| Definition | Users who provoke or disrupt conversations for amusement or attention | False or misleading information deliberately spread to deceive |

| Intent | Entertainment, disruption, or eliciting emotional responses | Manipulation, influence opinions, or create confusion |

| Content Type | Offensive comments, pranks, misleading statements | Fake news, altered media, fabricated stories |

| Impact on TikTok | Disrupts community engagement and trust | Spreads misinformation, damages credibility of information |

| Detection Methods | Behavioral analysis, comment monitoring, user reporting | Fact-checking, AI verification, source validation |

| Examples on TikTok | Provocative videos, misleading challenges | False health tips, fabricated news stories |

Understanding Trolls: Definition and Types

Trolls in social media are individuals who deliberately provoke, deceive, or disrupt online conversations to elicit emotional responses or cause conflict. These online antagonists can be categorized into types such as flame trolls who incite arguments, spammers who flood platforms with irrelevant content, and impersonators who spread misinformation by assuming false identities. Understanding these distinct troll behaviors helps you recognize and effectively manage interactions to maintain a positive and safe digital environment.

What is Disinformation? Key Concepts Explained

Disinformation refers to false or misleading information deliberately created and spread to deceive people, often for political, financial, or social influence. It differs from misinformation, which is false information shared without intent to harm. You can recognize disinformation by examining sources critically, checking facts, and being aware of the manipulation techniques commonly used in social media platforms.

The Overlap: When Trolls Spread Disinformation

Social media platforms often become breeding grounds for trolls who deliberately spread disinformation to manipulate public opinion and sow discord. These malicious actors exploit algorithmic amplification and viral sharing mechanisms to maximize the reach of false narratives. Understanding the overlap between trolling behavior and disinformation campaigns is critical for developing effective moderation policies and enhancing digital literacy.

Motivations Behind Trolling vs Disinformation Campaigns

Trolling on social media is primarily motivated by the desire for attention, disruption, or amusement, often stemming from individual users seeking instant gratification or social validation. In contrast, disinformation campaigns are strategically driven by political, economic, or ideological objectives aiming to manipulate public opinion, destabilize institutions, or influence elections. Understanding these distinct motivations is essential for developing targeted content moderation policies and improving digital literacy initiatives.

Tactics Used by Trolls and Disinformation Agents

Trolls and disinformation agents exploit social media algorithms by amplifying polarizing content and creating fake accounts to manipulate public opinion. They employ strategies such as spreading inflammatory comments, using bots to increase engagement, and coordinating misinformation campaigns to erode trust in legitimate sources. Targeted harassment and deepfake technology further enhance their ability to disrupt online communities and influence discourse.

Identifying Troll Behavior vs Disinformation Tactics

Troll behavior often involves provocative comments designed to incite emotional reactions and disrupt online communities, while disinformation tactics strategically spread false information to manipulate public opinion and obscure facts. Recognizing troll patterns includes spotting repetitive inflammatory remarks and off-topic posts, whereas identifying disinformation requires analyzing the credibility of sources, cross-referencing facts, and detecting coordinated misinformation campaigns. Effective social media moderation tools leverage AI algorithms to differentiate between these behaviors, enhancing digital literacy and safeguarding information integrity.

The Impact on Online Communities and Social Discourse

Social media platforms have transformed online communities by enabling instant communication and fostering diverse interactions across global audiences. These digital spaces shape social discourse through algorithm-driven content curation, often amplifying polarizing viewpoints and influencing public opinion. The dynamics of online communities reflect evolving societal norms, highlighting both the empowerment of marginalized voices and the challenges of misinformation and echo chambers.

Case Studies: Troll Operations vs Disinformation Campaigns

Case studies on troll operations versus disinformation campaigns reveal distinct tactics and objectives used to manipulate social media audiences. Troll operations typically exploit emotional reactions through provocative content, whereas disinformation campaigns systematically spread false information to influence public opinion and policy. Understanding these strategies helps you identify and mitigate the impact of malicious online behavior on your digital environment.

Strategies for Combating Trolls and Disinformation

Effective strategies for combating trolls and disinformation on social media include implementing advanced AI algorithms that detect and remove harmful content in real-time. Platforms employ community guidelines enforcement coupled with user reporting mechanisms to swiftly address malicious behavior and false information. Collaboration with fact-checking organizations and promoting digital literacy among users further mitigate the spread of misinformation and toxic interactions.

Building Digital Resilience: Tools and Resources

Building digital resilience requires utilizing tools such as secure password managers, two-factor authentication apps, and privacy-focused browsers to protect personal data on social media platforms. Resources like online cybersecurity courses, digital literacy workshops, and updated guidelines from organizations such as the Cybersecurity and Infrastructure Security Agency (CISA) empower users to recognize phishing attempts and manage privacy settings effectively. Engaging with these tools and educational materials enhances the ability to maintain privacy, prevent cyber threats, and recover from digital attacks in social media environments.

socmedb.com

socmedb.com