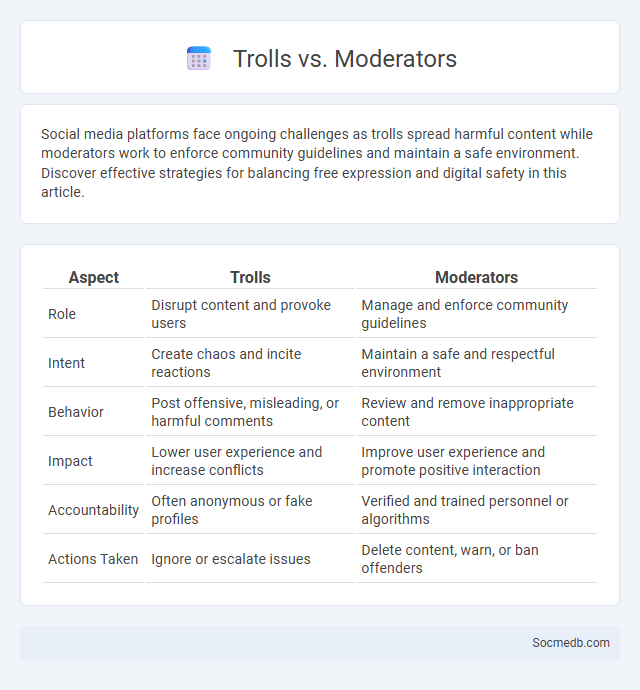

Photo illustration: Trolls vs Moderators

Social media platforms face ongoing challenges as trolls spread harmful content while moderators work to enforce community guidelines and maintain a safe environment. Discover effective strategies for balancing free expression and digital safety in this article.

Table of Comparison

| Aspect | Trolls | Moderators |

|---|---|---|

| Role | Disrupt content and provoke users | Manage and enforce community guidelines |

| Intent | Create chaos and incite reactions | Maintain a safe and respectful environment |

| Behavior | Post offensive, misleading, or harmful comments | Review and remove inappropriate content |

| Impact | Lower user experience and increase conflicts | Improve user experience and promote positive interaction |

| Accountability | Often anonymous or fake profiles | Verified and trained personnel or algorithms |

| Actions Taken | Ignore or escalate issues | Delete content, warn, or ban offenders |

Understanding the Troll Phenomenon

Social media platforms have become breeding grounds for internet trolls who deliberately provoke and disrupt conversations by posting inflammatory or off-topic messages. Understanding the troll phenomenon requires analyzing psychological motivations such as desire for attention, power, or entertainment, as well as the impact on online communities, including decreased engagement and emotional distress among users. Effective strategies to counteract trolling involve a combination of platform moderation policies, community guidelines, and user education on digital resilience.

The Essential Role of Moderators

Moderators play an essential role in maintaining safe and respectful environments across social media platforms by filtering harmful content, enforcing community guidelines, and preventing the spread of misinformation. Their work ensures user interactions remain constructive and protects against cyberbullying, hate speech, and spam, fostering healthier online communities. Effective moderation directly impacts user trust and platform reputation, making it a critical component of social media management strategies.

Trolls vs Moderators: The Ongoing Battle

Social media platforms constantly battle between trolls, who spread harmful and disruptive content, and moderators tasked with maintaining safe and respectful online environments. Advanced AI tools and human oversight work together to detect and remove abusive posts, ensuring community guidelines are enforced. The dynamic tension between trolling behavior and moderation strategies shapes user experience and platform reputation.

Motivations Behind Trolling Behavior

Trolling behavior on social media is often driven by motivations such as seeking attention, expressing frustration, or gaining a sense of power and control over others. Many trolls exploit the anonymity of online platforms to provoke emotional reactions and disrupt conversations for personal amusement or social influence. Psychological factors like social dominance, boredom, and the desire for notoriety also play significant roles in perpetuating trolling activities.

Effective Moderation Strategies

Effective moderation strategies on social media involve proactive content filtering using AI-powered tools combined with human oversight to accurately identify and remove harmful content, such as hate speech, misinformation, and spam. Implementing transparent community guidelines and empowering users with reporting mechanisms enhances trust and encourages responsible behavior. Regular updates to moderation policies aligned with platform growth and emerging threats ensure a safe and engaging online environment.

The Impact of Trolls on Online Communities

Trolls disrupt online communities by spreading misinformation, provoking conflicts, and diminishing user engagement and trust. Their negative behavior can lead to toxic environments where constructive discussions decline, affecting community growth and retention. Effective moderation and community guidelines are essential to mitigate the harmful effects of trolls and maintain a healthy social media ecosystem.

Escalation: When Trolls Target Moderators

Social media platforms face significant challenges when trolls target moderators, escalating conflicts and disrupting community management. This targeted harassment undermines moderators' authority, leading to increased stress and reduced effectiveness in content regulation. Effective escalation protocols and support systems are crucial in maintaining a safe and respectful online environment.

Tools and Technologies for Moderation

Advanced tools and technologies for social media moderation include AI-powered content analysis algorithms that detect harmful speech, spam, and hate content in real-time. Machine learning models are increasingly employed to understand context and sentiment, improving accuracy in filtering inappropriate posts while minimizing false positives. Integration of automated moderation platforms with human review systems enhances scalability and ensures compliance with community guidelines and legal regulations.

Balancing Free Speech and Community Safety

Social media platforms face the challenge of balancing free speech with community safety by implementing clear content moderation policies that protect users from harmful or violent content while respecting individual expression. Your experience on these platforms depends on sophisticated algorithms and community guidelines designed to prevent misinformation, hate speech, and harassment without over-censoring legitimate discourse. Effective moderation fosters a safer online environment that encourages healthy dialogue and diversity of opinions.

Building Resilient Online Spaces

Building resilient online spaces requires implementing robust moderation policies and leveraging AI-powered tools to detect and remove harmful content promptly. Protecting Your digital community involves fostering inclusive interactions, promoting respectful communication, and ensuring transparent enforcement of platform rules. Prioritizing user safety and emotional well-being creates a trustworthy environment where social media users can engage confidently.

socmedb.com

socmedb.com