Photo illustration: Community Notes vs Reddit Community Moderation

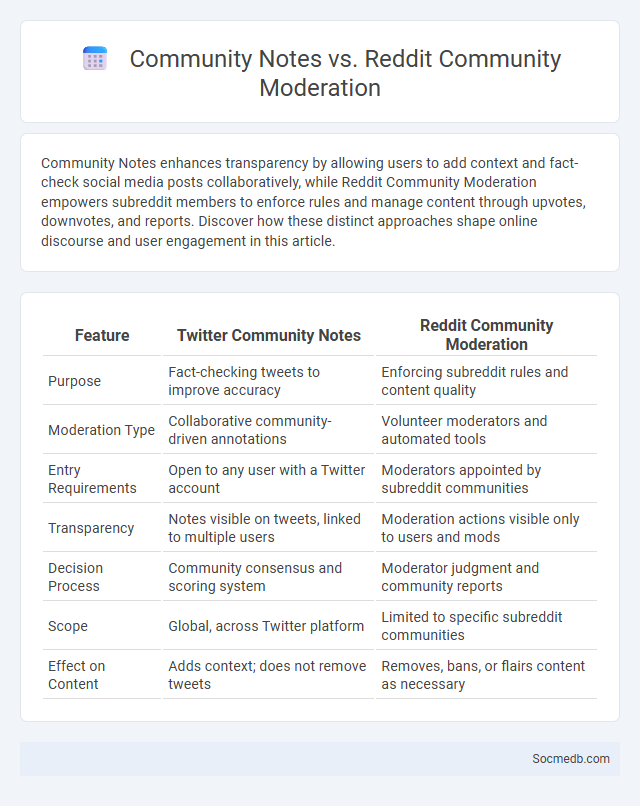

Community Notes enhances transparency by allowing users to add context and fact-check social media posts collaboratively, while Reddit Community Moderation empowers subreddit members to enforce rules and manage content through upvotes, downvotes, and reports. Discover how these distinct approaches shape online discourse and user engagement in this article.

Table of Comparison

| Feature | Twitter Community Notes | Reddit Community Moderation |

|---|---|---|

| Purpose | Fact-checking tweets to improve accuracy | Enforcing subreddit rules and content quality |

| Moderation Type | Collaborative community-driven annotations | Volunteer moderators and automated tools |

| Entry Requirements | Open to any user with a Twitter account | Moderators appointed by subreddit communities |

| Transparency | Notes visible on tweets, linked to multiple users | Moderation actions visible only to users and mods |

| Decision Process | Community consensus and scoring system | Moderator judgment and community reports |

| Scope | Global, across Twitter platform | Limited to specific subreddit communities |

| Effect on Content | Adds context; does not remove tweets | Removes, bans, or flairs content as necessary |

Introduction to Community Moderation Models

Community moderation models in social media platforms are designed to maintain healthy online environments by regulating user interactions and content. These models include centralized moderation by platform authorities, decentralized moderation involving community members, and hybrid systems combining both approaches. Effective community moderation enhances user engagement, reduces harmful behavior, and supports platform trust and safety.

Understanding Twitter/X Community Notes

Twitter/X Community Notes enable users to collaboratively identify and flag misleading or false information within tweets. This crowdsourced fact-checking system uses diverse perspectives to improve the accuracy of content, increasing transparency on the platform. By leveraging user contributions and algorithmic insights, Community Notes helps maintain informed discussions and reduce misinformation.

The Structure of Reddit Community Moderation

Reddit community moderation operates through a decentralized system where volunteer moderators manage specific subreddits, enforcing rules and guidelines tailored to each community. This structure empowers moderators with tools to remove content, ban users, and curate discussions in alignment with subreddit policies. Your engagement relies on understanding these moderation practices that shape the quality and safety of interactions within Reddit's diverse forums.

Core Principles: Transparency and Accountability

Transparency on social media involves clearly disclosing data usage, advertising practices, and content algorithms to foster user trust and informed decision-making. Accountability requires platforms to implement robust mechanisms for addressing misinformation, harmful content, and user privacy violations, ensuring responsible digital interaction. Upholding these core principles enhances platform credibility and supports a safer, more ethical online environment.

How Fact-Checking Works in Community Notes

Community Notes uses a collaborative approach where volunteers review and rate the accuracy of social media content, helping to identify misinformation. You contribute by submitting factual corrections and providing context, which then undergo peer evaluation for reliability and transparency. This process enhances the platform's trustworthiness by promoting verified information and reducing the spread of false narratives.

Democratic Participation: Voting and Reporting

Social media platforms play a crucial role in enhancing democratic participation by providing accessible channels for voting information and real-time election reporting. You can stay informed about candidates, polling locations, and election deadlines through official social media accounts, which promote transparency and accountability. These platforms also enable citizens to share election news, report irregularities, and engage in public discourse, strengthening the democratic process.

Preventing Bias: Moderation Safeguards

Effective social media moderation safeguards employ algorithmic bias detection and human review to prevent discriminatory content and ensure fair representation. Incorporating diverse training data and real-time monitoring helps minimize algorithmic biases that may skew content visibility or promote harmful stereotypes. These measures foster an inclusive online environment while enhancing platform credibility and user trust.

Effectiveness in Combating Misinformation

Social media platforms utilize advanced algorithms and fact-checking collaborations to enhance the effectiveness in combating misinformation, reducing the spread of false content. You can benefit from real-time alerts and verified information tags that improve your ability to discern credible sources. Engagement with trusted accounts and reporting mechanisms further empower users to maintain a safer and more accurate online environment.

Challenges Faced by Each Moderation System

Content moderation on social media faces significant challenges, including the difficulty of detecting nuanced hate speech or misinformation with automated systems, which can lead to both false positives and negatives. Human moderators grapple with emotional burnout and inconsistency in enforcing guidelines, impacting platform trust and user safety. Your experience can be affected as platforms struggle to balance freedom of expression with effective removal of harmful content across diverse cultural contexts.

Future Prospects for Community-Driven Moderation

Community-driven moderation is poised to revolutionize social media by empowering users to collectively manage content quality and enforce platform guidelines. Advances in AI and machine learning will enhance real-time detection of harmful posts, enabling communities to flag and resolve issues swiftly. This approach fosters greater transparency, accountability, and inclusivity, strengthening trust and user engagement across social media platforms.

socmedb.com

socmedb.com