Photo illustration: Troll vs Spam

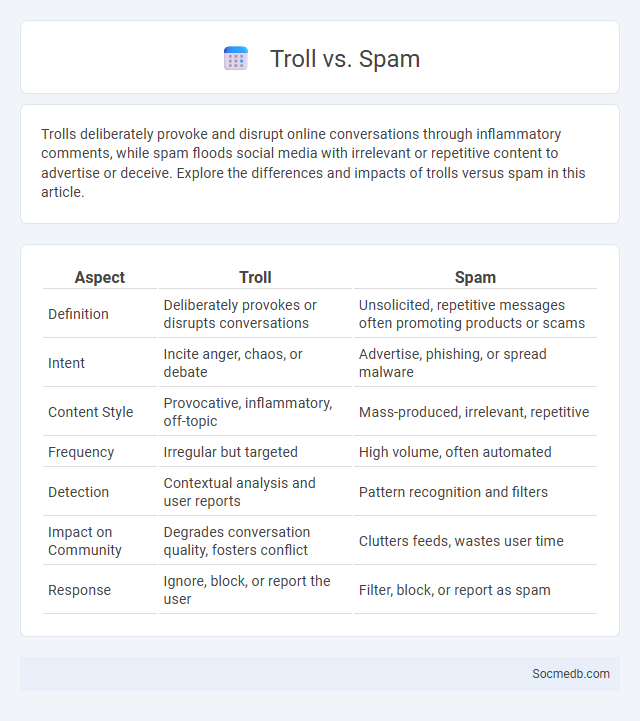

Trolls deliberately provoke and disrupt online conversations through inflammatory comments, while spam floods social media with irrelevant or repetitive content to advertise or deceive. Explore the differences and impacts of trolls versus spam in this article.

Table of Comparison

| Aspect | Troll | Spam |

|---|---|---|

| Definition | Deliberately provokes or disrupts conversations | Unsolicited, repetitive messages often promoting products or scams |

| Intent | Incite anger, chaos, or debate | Advertise, phishing, or spread malware |

| Content Style | Provocative, inflammatory, off-topic | Mass-produced, irrelevant, repetitive |

| Frequency | Irregular but targeted | High volume, often automated |

| Detection | Contextual analysis and user reports | Pattern recognition and filters |

| Impact on Community | Degrades conversation quality, fosters conflict | Clutters feeds, wastes user time |

| Response | Ignore, block, or report the user | Filter, block, or report as spam |

Understanding Internet Trolling

Internet trolling involves deliberately posting provocative, offensive, or misleading content on social media platforms to elicit strong emotional reactions or disrupt conversations. Recognizing trolling behavior helps you avoid engaging with harmful content and protects your online experience from negativity. Effective strategies include ignoring trolls, reporting abusive posts, and promoting respectful digital communication.

What is Online Spam?

Online spam refers to unsolicited and irrelevant messages sent over the internet, often in bulk, which clutter social media platforms and disrupt user experiences. These messages may include promotional content, phishing links, or malicious software intended to deceive or exploit users. Protecting Your social media accounts from online spam enhances security and maintains the quality of your digital interactions.

Troll vs. Spam: Key Differences

Trolls deliberately provoke and manipulate social media users by posting inflammatory or off-topic messages to incite emotional responses and disrupt conversations, often thriving on controversy and attention. Spam involves mass posting of irrelevant or repetitive content, usually promoting products or scams, aiming to deceive or overwhelm users without engaging in genuine dialogue. Understanding these key differences helps develop targeted moderation strategies to maintain community integrity and user experience.

Common Behaviors of Trolls

Trolls frequently engage in provocative comments, aim to incite emotional responses, and disrupt online conversations across social media platforms. They often use inflammatory language, spread misinformation, and target individuals or groups to create discord. Understanding these common behaviors helps you recognize and avoid falling victim to their manipulative tactics.

How Spammers Operate

Spammers typically deploy automated bots to flood social media platforms with unsolicited content, often targeting popular hashtags and trending topics to maximize visibility. They exploit weak security measures and user interactions by creating fake profiles to send phishing messages or malicious links, aiming to steal personal information or spread malware. Understanding how spammers operate helps you recognize suspicious activities and protect your online presence from scams and data breaches.

Impact of Trolls on Online Communities

Trolls significantly disrupt online communities by fostering hostility and diminishing user engagement through the spread of inflammatory and misleading content. Their persistent negativity often leads to decreased trust among members and compromises the quality of discourse on platforms like Facebook, Twitter, and Reddit. Effective moderation and community guidelines are critical in mitigating these impacts to maintain healthy digital environments.

Spam Threats: Security and Annoyance

Spam threats on social media platforms compromise user security by spreading malicious links, phishing attempts, and malware, which can lead to data breaches and identity theft. These unwanted messages disrupt user experience and degrade platform trust, causing significant annoyance and fostering a hostile digital environment. Advanced spam detection algorithms and user education are crucial to mitigating these risks and protecting social media communities.

Identifying Trolls vs. Spammers

Identifying trolls involves analyzing behavior patterns such as provocative comments, persistent negativity, and attempts to incite emotional responses, whereas spammers typically exhibit repetitive, irrelevant posts aimed at advertising or phishing. Advanced machine learning algorithms utilize natural language processing to detect sentiment anomalies and keyword patterns unique to trolls or spam content. Social media platforms implement these detection methods to maintain community integrity by reducing harmful interactions and minimizing unsolicited promotional material.

Techniques for Handling Trolls and Spam

Effective techniques for handling trolls and spam on social media include implementing robust content moderation tools powered by AI to detect and remove harmful messages quickly. Establishing clear community guidelines and empowering users to report abusive behavior enhances platform safety and user experience. Utilizing automated filters to block suspicious accounts and employing CAPTCHA challenges during account creation reduces the influx of spam and bot activities.

Preventing Troll and Spam Attacks Online

Effective prevention of troll and spam attacks online involves implementing robust moderation tools powered by AI algorithms that detect and filter harmful content in real-time. Platforms enhance user security by deploying CAPTCHA systems, multi-factor authentication, and spam detection filters that monitor suspicious account activities and message patterns. Community-driven reporting mechanisms combined with machine learning enable platforms to swiftly identify and remove malicious users, maintaining a safe and engaging social media environment.

socmedb.com

socmedb.com