Photo illustration: Twitter vs Clubhouse ban evasion

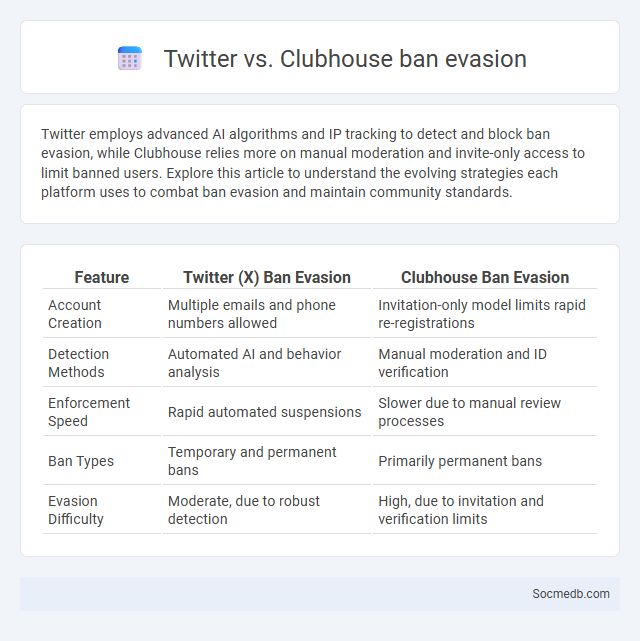

Twitter employs advanced AI algorithms and IP tracking to detect and block ban evasion, while Clubhouse relies more on manual moderation and invite-only access to limit banned users. Explore this article to understand the evolving strategies each platform uses to combat ban evasion and maintain community standards.

Table of Comparison

| Feature | Twitter (X) Ban Evasion | Clubhouse Ban Evasion |

|---|---|---|

| Account Creation | Multiple emails and phone numbers allowed | Invitation-only model limits rapid re-registrations |

| Detection Methods | Automated AI and behavior analysis | Manual moderation and ID verification |

| Enforcement Speed | Rapid automated suspensions | Slower due to manual review processes |

| Ban Types | Temporary and permanent bans | Primarily permanent bans |

| Evasion Difficulty | Moderate, due to robust detection | High, due to invitation and verification limits |

Understanding Ban Evasion: Twitter vs. Clubhouse

Ban evasion involves users circumventing account suspensions by creating new profiles or using alternate platforms, with Twitter employing stricter detection algorithms compared to Clubhouse's audio-based moderation challenges. Your ability to identify ban evasion tactics on Twitter is enhanced through automated AI filters and account link analysis, whereas Clubhouse relies more on real-time human moderation and user reporting for enforcement. Recognizing these differences is crucial for effective platform management and compliance strategies.

Defining Ban Evasion on Social Media Platforms

Ban evasion on social media platforms refers to the deliberate actions taken by individuals or entities to circumvent account suspensions or bans imposed by platform policies. This behavior often includes creating new accounts with altered personal information or using proxy IP addresses to regain access after being restricted for violations such as harassment or misinformation. Understanding ban evasion is crucial for protecting the integrity of online communities and ensuring your interactions remain within platform guidelines.

Detection Methods: Twitter’s vs. Clubhouse’s Approaches

Twitter employs advanced machine learning algorithms and natural language processing techniques for content moderation, enabling real-time detection of harmful or misleading posts through keyword scanning and user behavior analysis. Clubhouse relies heavily on real-time human moderation paired with automated flagging systems to monitor audio conversations, focusing on identifying policy violations during live interactions. Both platforms prioritize proactive detection but differ in modality, with Twitter optimizing for text-based content while Clubhouse emphasizes monitoring ephemeral audio streams.

Common Tactics Users Employ to Evade Bans

Users often employ tactics like creating multiple accounts, using VPNs to mask their IP addresses, and frequently changing profile details to evade social media bans. By exploiting platform weaknesses and automated moderation gaps, they bypass restrictions and continue engaging despite enforcement efforts. Understanding these tactics can help you better navigate and comply with social media guidelines.

Policy Enforcement: How Twitter Handles Ban Evasion

Twitter employs advanced machine learning algorithms and behavioral analysis to detect ban evasion by identifying patterns such as repeated IP addresses, similar usernames, and coordinated activity. Enforcement includes automatic suspension of accounts violating suspension rules, combined with manual review by Twitter's Trust and Safety team to ensure accuracy. These measures aim to maintain platform integrity by preventing banned users from circumventing restrictions and spreading harmful content.

Clubhouse’s Measures Against Repeat Offenders

Clubhouse employs a system that identifies and restricts repeat offenders through automated detection tools and user reporting mechanisms to maintain a safe community environment. Violators who frequently breach content guidelines face temporary suspensions or permanent bans, reinforcing platform accountability. The moderation team collaborates with AI algorithms to review flagged content promptly, ensuring consistent enforcement of community standards.

Comparing User Verification Systems

User verification systems on social media platforms vary significantly in security and user experience, with Twitter implementing a blue checkmark system to confirm public figures' identities, while Instagram uses two-factor authentication and AI-driven flagging to reduce impersonation. Facebook combines government ID verification with facial recognition technology to enhance account authenticity, whereas TikTok relies heavily on phone number verification and machine learning algorithms to detect suspicious behavior. Each platform balances privacy concerns and verification rigor differently, impacting the effectiveness of combating fake accounts and boosting trust among users.

Legal Implications and Platform Accountability

Social media platforms face increasing legal implications related to content moderation, user privacy, and misinformation control, requiring robust compliance with global regulations such as GDPR and the Communications Decency Act. Platform accountability involves implementing transparent policies and effective mechanisms to address harmful content and protect user rights without compromising freedom of expression. You should stay informed about platform terms and legal updates to navigate your online presence responsibly and safeguard your digital interactions.

The Impact of Ban Evasion on Community Safety

Ban evasion on social media platforms undermines community safety by allowing harmful content and malicious actors to reemerge despite restrictions. Users who bypass bans can spread misinformation, harass others, and disrupt the online environment, increasing risks for your digital well-being. Effective detection and enforcement strategies are essential to preserving trust and security within social media communities.

Future Solutions: Strengthening Ban Policies

Future solutions for social media focus on strengthening ban policies to curb harmful content and misinformation effectively. Enhanced AI-driven detection systems and transparent appeal processes can improve enforcement fairness and accuracy. Collaborations between platforms, regulators, and experts aim to create unified standards that balance free speech with user safety.

socmedb.com

socmedb.com