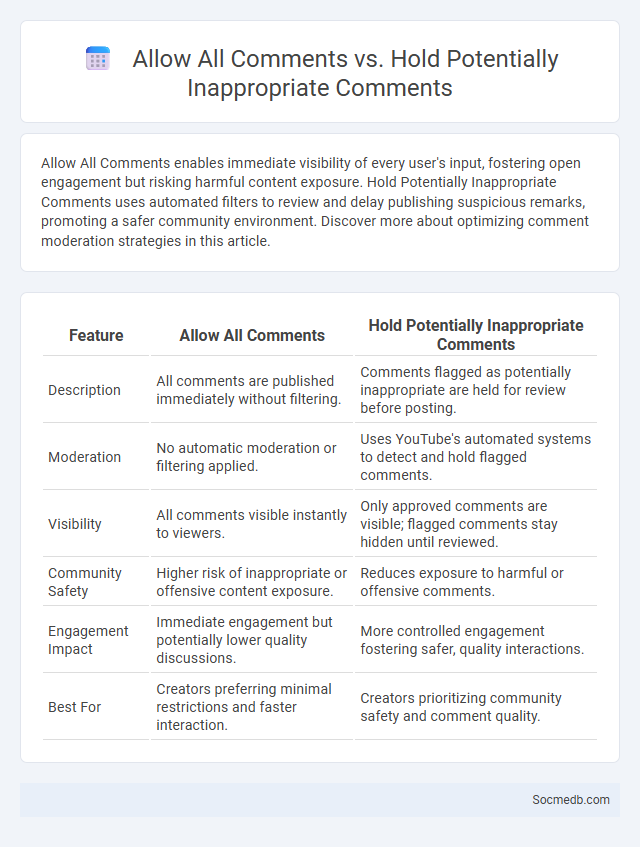

Photo illustration: Allow All Comments vs Hold Potentially Inappropriate Comments

Allow All Comments enables immediate visibility of every user's input, fostering open engagement but risking harmful content exposure. Hold Potentially Inappropriate Comments uses automated filters to review and delay publishing suspicious remarks, promoting a safer community environment. Discover more about optimizing comment moderation strategies in this article.

Table of Comparison

| Feature | Allow All Comments | Hold Potentially Inappropriate Comments |

|---|---|---|

| Description | All comments are published immediately without filtering. | Comments flagged as potentially inappropriate are held for review before posting. |

| Moderation | No automatic moderation or filtering applied. | Uses YouTube's automated systems to detect and hold flagged comments. |

| Visibility | All comments visible instantly to viewers. | Only approved comments are visible; flagged comments stay hidden until reviewed. |

| Community Safety | Higher risk of inappropriate or offensive content exposure. | Reduces exposure to harmful or offensive comments. |

| Engagement Impact | Immediate engagement but potentially lower quality discussions. | More controlled engagement fostering safer, quality interactions. |

| Best For | Creators preferring minimal restrictions and faster interaction. | Creators prioritizing community safety and comment quality. |

Introduction to Comment Moderation Strategies

Effective comment moderation strategies are essential for maintaining a positive and engaging social media environment. By implementing clear guidelines, automated filtering tools, and timely human review, you can minimize spam, offensive content, and misinformation on your platforms. These measures help foster constructive interactions and protect your brand's reputation.

Understanding "Allow All Comments" Policy

The "Allow All Comments" policy on social media platforms permits every user to post comments without restrictions, aiming to foster open communication and diverse opinions. Understanding this policy helps you manage potential challenges such as spam, offensive remarks, or irrelevant content while encouraging authentic engagement with your audience. Leveraging moderation tools and clearly defining community guidelines ensures your social media space remains respectful and constructive.

Benefits and Risks of Allowing All Comments

Allowing all comments on social media platforms fosters open dialogue and enhances user engagement by encouraging diverse opinions and real-time feedback. However, it also increases the risk of harmful content such as spam, hate speech, and misinformation, which can damage community trust and brand reputation. Effective moderation tools and clear commenting guidelines are essential to balance inclusivity with safety and maintain a positive user experience.

Exploring "Hold Potentially Inappropriate Comments" Option

The "Hold Potentially Inappropriate Comments" option on social media platforms uses advanced algorithms to detect and temporarily withhold comments containing offensive language, hate speech, or spam. This feature enhances community safety by giving users control over content visibility and reducing exposure to harmful interactions. By filtering comments proactively, users can maintain a positive environment while fostering respectful online engagement.

How Automated Moderation Filters Work

Automated moderation filters leverage machine learning algorithms and natural language processing to identify and remove harmful or inappropriate content on social media platforms. These filters analyze text, images, and videos in real-time by scanning for keywords, patterns, and contextual cues associated with spam, hate speech, and misinformation. Constantly updated with new data, moderation tools improve accuracy and reduce false positives by learning from user reports and platform policies.

What Is Manual Comment Moderation?

Manual comment moderation involves the deliberate review and management of user comments on social media platforms by human moderators. This process helps ensure that content adheres to community guidelines, filters out spam, hate speech, or inappropriate language, and fosters positive engagement. Human oversight allows nuanced understanding and context-sensitive decisions that automated systems may miss.

Pros and Cons of Each Comment Moderation Strategy

Comment moderation strategies on social media include automated filtering, manual review, and community moderation, each offering distinct pros and cons. Automated filtering provides efficient real-time control over inappropriate content but may wrongly censor legitimate comments, impacting Your engagement. Manual review ensures nuanced decision-making with human judgment but can be time-consuming and costly, while community moderation leverages user involvement to maintain quality yet risks bias and inconsistent enforcement.

Impact on User Engagement and Community Building

Social media platforms significantly enhance user engagement by enabling real-time interactions, personalized content, and instant feedback, which foster deeper connections between brands and their audiences. Your active participation in social networks contributes to vibrant community building, where shared interests and collaborative discussions strengthen loyalty and trust. These dynamic digital environments leverage algorithms and user data to create tailored experiences that encourage ongoing involvement and meaningful relationships.

Choosing the Best Moderation Approach for Your Platform

Selecting the right social media moderation approach depends on your platform's size, community guidelines, and content volume. Automated tools using AI can efficiently filter harmful content, while human moderators provide nuanced judgment for complex cases. Balancing these methods ensures your platform maintains a safe and engaging environment tailored to your users' needs.

Best Practices for Effective Comment Moderation

Effective comment moderation on social media platforms requires timely responses to user interactions, clear community guidelines, and consistent enforcement to maintain a positive environment. Utilizing automated moderation tools with keyword filters and AI-driven content analysis enhances the identification and removal of inappropriate or spam comments. Encouraging constructive dialogue through transparency and respectful engagement strengthens community trust and boosts overall user experience.

socmedb.com

socmedb.com