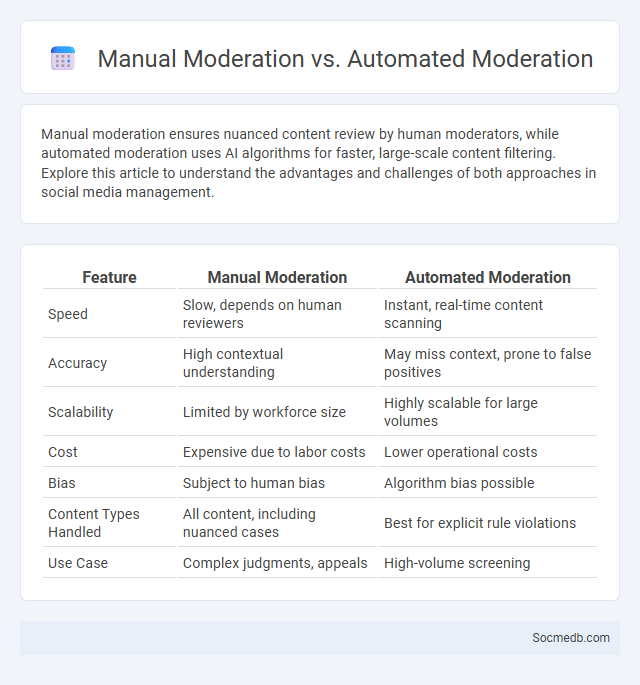

Photo illustration: Manual Moderation vs Automated Moderation

Manual moderation ensures nuanced content review by human moderators, while automated moderation uses AI algorithms for faster, large-scale content filtering. Explore this article to understand the advantages and challenges of both approaches in social media management.

Table of Comparison

| Feature | Manual Moderation | Automated Moderation |

|---|---|---|

| Speed | Slow, depends on human reviewers | Instant, real-time content scanning |

| Accuracy | High contextual understanding | May miss context, prone to false positives |

| Scalability | Limited by workforce size | Highly scalable for large volumes |

| Cost | Expensive due to labor costs | Lower operational costs |

| Bias | Subject to human bias | Algorithm bias possible |

| Content Types Handled | All content, including nuanced cases | Best for explicit rule violations |

| Use Case | Complex judgments, appeals | High-volume screening |

Introduction to Content Moderation

Content moderation involves the review and management of user-generated content on social media platforms to ensure compliance with community guidelines and legal standards. It employs a combination of artificial intelligence tools and human moderators to detect and remove harmful, offensive, or inappropriate material. Effective moderation helps maintain a safe online environment, fosters positive user interactions, and protects platform reputation.

Manual Moderation: Definition and Process

Manual moderation involves human reviewers who evaluate and manage user-generated content to ensure compliance with community guidelines and legal standards. The process includes scrutinizing text, images, and videos for harmful, inappropriate, or offensive material, categorizing content for removal, approval, or further review. Effective manual moderation relies on trained moderators equipped with clear policies and tools to maintain platform safety and foster positive user interaction.

Automated Moderation: Technology and Tools

Automated moderation in social media leverages advanced artificial intelligence algorithms and natural language processing to detect and filter inappropriate content in real time. Tools such as machine learning classifiers, image recognition software, and sentiment analysis systems optimize the identification of spam, hate speech, and misinformation across platforms like Facebook, Twitter, and Instagram. This technology enhances user safety, reduces the burden on human moderators, and ensures compliance with community guidelines efficiently.

Comment Moderation: Focus and Importance

Effective comment moderation on social media platforms is essential for maintaining a positive user experience and protecting community standards. It helps prevent the spread of harmful content such as hate speech, spam, and misinformation while fostering constructive conversations among users. Implementing advanced AI-driven moderation tools enhances accuracy and scalability, ensuring timely review and enforcement of platform policies.

Human Touch vs Algorithmic Precision

Human touch in social media fosters authentic connections and emotional engagement, enhancing user loyalty and trust. Algorithmic precision customizes content delivery, increasing relevance and user retention through data-driven insights and behavior analysis. Balancing empathetic communication with advanced algorithms optimizes user experience and maximizes platform effectiveness.

Advantages of Manual Moderation

Manual moderation offers precise control over content quality, ensuring that sensitive or nuanced posts are accurately assessed for appropriateness and relevance. It enables personalized responses and a deeper understanding of community context, fostering trust and positive engagement within your social media platform. Human moderators can identify subtle violations and cultural nuances that automated systems may overlook, enhancing overall user safety and satisfaction.

Benefits of Automated Moderation

Automated moderation significantly enhances social media platforms by efficiently filtering harmful content, reducing the spread of misinformation, and maintaining community guidelines adherence. Machine learning algorithms and natural language processing enable real-time detection of spam, hate speech, and inappropriate posts, fostering safer user interactions. This automation also minimizes the need for extensive human intervention, allowing faster response times and improving overall platform trustworthiness.

Challenges in Comment Moderation

Comment moderation on social media platforms faces challenges such as detecting harmful content, managing large volumes of comments, and addressing diverse cultural contexts. AI-driven moderation tools often struggle with understanding nuanced language, sarcasm, and evolving slang, impacting the accuracy of filtering inappropriate remarks. Your experience as a user depends on effective moderation strategies that balance safety, free expression, and timely response.

Hybrid Moderation Approaches

Hybrid moderation approaches combine automated algorithms and human reviewers to effectively manage social media content, ensuring accurate detection of harmful posts while minimizing censorship errors. Machine learning models analyze vast amounts of data in real time, flagging potential violations, whereas human moderators provide contextual judgment for ambiguous cases. This synergy improves platform safety, user experience, and compliance with community guidelines and legal regulations.

Choosing the Best Moderation Strategy

Selecting the best social media moderation strategy involves balancing proactive content filtering with responsive community management to maintain a safe and engaging environment. Your approach should leverage AI-driven tools for real-time threat detection while incorporating human moderators for nuanced judgment, ensuring accuracy and fairness. Understanding platform-specific risks and user behavior patterns enhances the effectiveness of your chosen moderation techniques.

socmedb.com

socmedb.com