Photo illustration: YouTube age restriction vs mature content

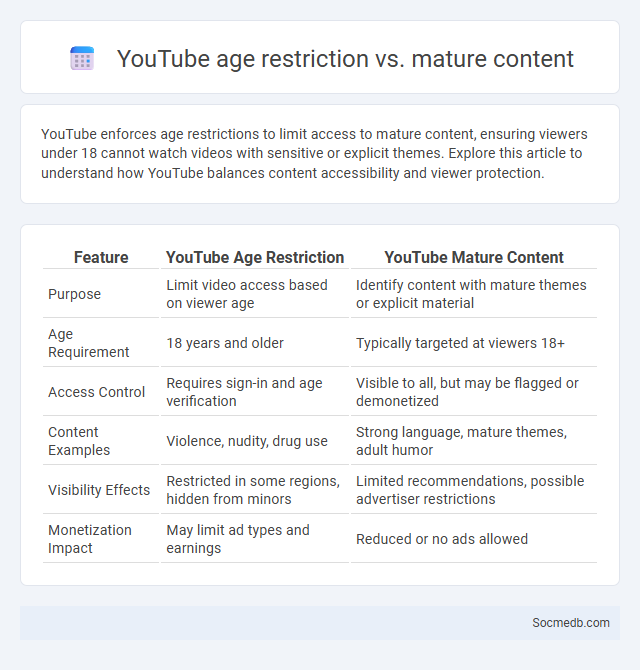

YouTube enforces age restrictions to limit access to mature content, ensuring viewers under 18 cannot watch videos with sensitive or explicit themes. Explore this article to understand how YouTube balances content accessibility and viewer protection.

Table of Comparison

| Feature | YouTube Age Restriction | YouTube Mature Content |

|---|---|---|

| Purpose | Limit video access based on viewer age | Identify content with mature themes or explicit material |

| Age Requirement | 18 years and older | Typically targeted at viewers 18+ |

| Access Control | Requires sign-in and age verification | Visible to all, but may be flagged or demonetized |

| Content Examples | Violence, nudity, drug use | Strong language, mature themes, adult humor |

| Visibility Effects | Restricted in some regions, hidden from minors | Limited recommendations, possible advertiser restrictions |

| Monetization Impact | May limit ad types and earnings | Reduced or no ads allowed |

Understanding YouTube’s Age Restriction Policy

YouTube's age restriction policy limits access to videos that may contain mature content to users aged 18 and above, ensuring compliance with legal standards and community guidelines. Understanding this policy helps You optimize your content strategy by targeting the appropriate audience and avoiding penalties such as video removal or demonetization. Your awareness of age restrictions can improve engagement and protect your channel's reputation across diverse demographics.

What Qualifies as Mature Content on YouTube?

Mature content on YouTube includes videos featuring explicit language, graphic violence, sexual content, and drug use, which require age restrictions to protect younger audiences. Content depicting nudity, sexual acts, or highly suggestive themes falls under this category, often flagged by YouTube's community guidelines and automated systems. Violations can result in content removal, demonetization, or channel suspension to maintain platform safety and compliance.

Key Differences: Age Restriction vs. Mature Content Labels

Age restriction on social media platforms strictly limits access based on the user's age, typically requiring verification to prevent underage users from viewing content. Mature content labels serve as warnings, indicating that the material may contain sensitive themes or explicit language, allowing adults to make informed viewing choices. Platforms implement these measures to comply with legal regulations and promote safer digital environments for diverse user demographics.

How YouTube Implements Age Restrictions

YouTube enforces age restrictions by requiring users to verify their age through account settings or age-gated content warnings to ensure compliance with legal regulations. Videos deemed inappropriate for younger audiences are flagged with age-restriction filters, limiting access to users who are signed in and over 18 years old. This system helps protect minors from exposure to sensitive or mature content on the platform.

Impacts of Age Restrictions on Content Creators

Age restrictions on social media platforms significantly influence content creators by limiting their audience reach and monetization opportunities, often resulting in reduced visibility and engagement metrics. Younger creators face challenges in platform compliance, while older creators may experience content oversight that stifles creative expression. Your ability to grow and connect with diverse demographics can be hindered, making strategic navigation of these restrictions essential for sustained success.

Viewer Experience: Navigating Age-Restricted and Mature Content

Navigating age-restricted and mature content on social media platforms requires careful attention to content warnings and privacy settings designed to protect younger audiences. You can enhance your viewer experience by customizing filters and parental controls that limit exposure to sensitive material while ensuring access to relevant and engaging content. Social media algorithms prioritize user preferences and compliance with regulatory standards to provide a safer and more tailored browsing environment.

Avoiding False Age Restrictions on Your Videos

Implementing accurate age verification methods on social media videos helps prevent false age restrictions that limit audience reach and engagement. Utilizing metadata analysis and AI-driven content classification improves the precision of age-appropriate labeling, reducing unwarranted content blocking. Regularly updating platform policies and moderation algorithms ensures compliance with legal standards while maintaining fair access to video content.

The Role of Community Guidelines in Content Classification

Community guidelines critically shape how social media platforms classify and manage content by establishing clear rules that define acceptable behavior and content types. Your interactions and contributions are continually evaluated against these standards to prevent misinformation, hate speech, and harmful material, ensuring a safer online environment. Effective guideline enforcement helps maintain platform integrity by promoting positive engagement and limiting content that violates established norms.

Appeals and Disputes over Age Restriction Decisions

Social media platforms enforce age restriction policies to comply with legal standards and protect younger users, often leading to appeals when Your age verification is contested or incorrectly flagged. Users frequently dispute these decisions by submitting identification or parental consent, aiming to restore access and maintain account functionality. Understanding the appeal process and providing accurate documentation is crucial to resolving age-related restrictions efficiently on social networks.

Best Practices for Labeling and Managing Mature Content

Implement clear and consistent labeling of mature content on social media platforms by using age verification tools and explicit content warnings to protect younger audiences. Employ algorithmic moderation combined with human review to accurately identify and flag sensitive material while minimizing false positives. Establish transparent content guidelines and provide users with easy access to reporting mechanisms to maintain a safe and respectful online environment.

socmedb.com

socmedb.com