Photo illustration: YouTube Flagging vs YouTube Moderation

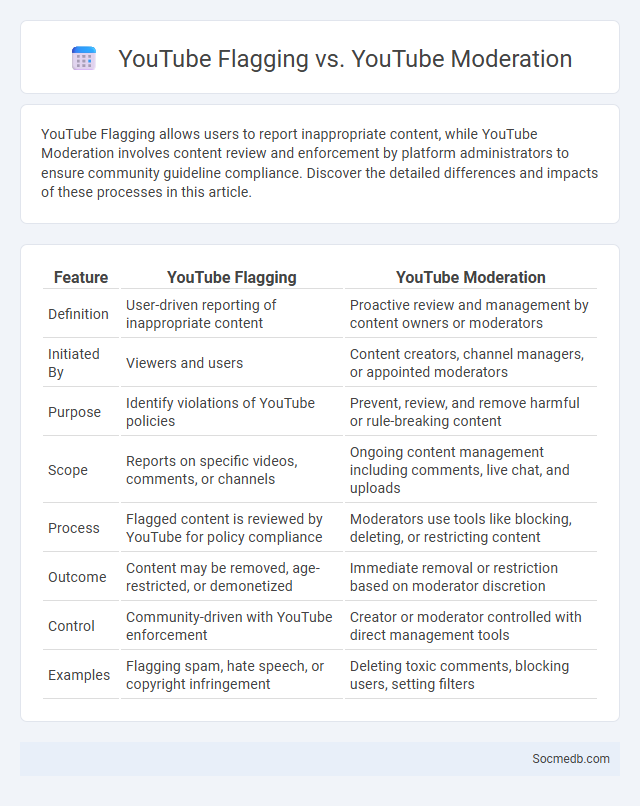

YouTube Flagging allows users to report inappropriate content, while YouTube Moderation involves content review and enforcement by platform administrators to ensure community guideline compliance. Discover the detailed differences and impacts of these processes in this article.

Table of Comparison

| Feature | YouTube Flagging | YouTube Moderation |

|---|---|---|

| Definition | User-driven reporting of inappropriate content | Proactive review and management by content owners or moderators |

| Initiated By | Viewers and users | Content creators, channel managers, or appointed moderators |

| Purpose | Identify violations of YouTube policies | Prevent, review, and remove harmful or rule-breaking content |

| Scope | Reports on specific videos, comments, or channels | Ongoing content management including comments, live chat, and uploads |

| Process | Flagged content is reviewed by YouTube for policy compliance | Moderators use tools like blocking, deleting, or restricting content |

| Outcome | Content may be removed, age-restricted, or demonetized | Immediate removal or restriction based on moderator discretion |

| Control | Community-driven with YouTube enforcement | Creator or moderator controlled with direct management tools |

| Examples | Flagging spam, hate speech, or copyright infringement | Deleting toxic comments, blocking users, setting filters |

Understanding YouTube Flagging: What It Is and How It Works

YouTube flagging is a community-driven system where users report videos that violate platform policies, such as inappropriate content, copyright infringement, or misleading information. When you flag a video, it triggers a review process by YouTube's moderation team, who evaluate the content against community guidelines to decide if removal or restriction is necessary. Understanding this process helps protect your experience and ensures the platform remains safe and compliant with standards.

YouTube Moderation Explained: Processes and Policies

YouTube moderation involves a combination of automated systems and human reviewers to enforce community guidelines and remove harmful content, such as hate speech, misinformation, and violent material. The platform uses machine learning algorithms to detect violations in real-time, supplemented by user reports and human judgment to ensure accuracy and context understanding. YouTube's policies emphasize transparency, timely content removal, and appeals processes to maintain a safe and respectful user environment.

Comparing YouTube Flagging and Moderation: Key Differences

YouTube flagging allows users to report inappropriate content, triggering a review process by YouTube's moderation team, which evaluates the context and severity before taking action. Moderation on YouTube involves a combination of automated systems and human reviewers who enforce community guidelines by removing content or issuing penalties. The key difference lies in flagging as a user-initiated reporting tool, whereas moderation is the platform's proactive enforcement mechanism ensuring content compliance.

The Role of Flagging in YouTube’s Content Safety

Flagging plays a crucial role in YouTube's content safety by empowering users to report videos that violate community guidelines, such as hate speech, misinformation, or inappropriate content. This user-driven moderation helps YouTube quickly identify and review potentially harmful material, enhancing platform integrity and viewer protection. Your active participation in flagging ensures a safer and more positive environment for all content consumers.

How YouTube Moderation Teams Handle Reported Content

YouTube moderation teams utilize advanced AI algorithms combined with human reviewers to efficiently identify and evaluate reported content against community guidelines. Reported videos are queued for assessment where moderators analyze context, intent, and potential harm before applying actions such as removal, age restriction, or strikes. Your reports help these teams maintain a safe platform by prioritizing content that may violate policies related to hate speech, harassment, or misinformation.

Community Flagging vs Automated Moderation Systems

Community Flagging empowers users to identify and report inappropriate content, enhancing the overall quality of social media platforms by leveraging collective vigilance and real-time feedback. Automated Moderation Systems utilize advanced algorithms and machine learning to efficiently detect and remove harmful content at scale, ensuring consistent enforcement of platform policies. You benefit from the combined strengths of both approaches, fostering safer and more engaging online communities.

Impact of Flagging on YouTube Creators

Flagging on YouTube can significantly affect your content's visibility and revenue, as multiple flags often trigger automated demonetization or content restrictions. YouTube's algorithm prioritizes videos with fewer flags, impacting your channel's growth and audience reach. Understanding the flagging system is crucial for creators to protect their content and maintain engagement.

Best Practices for Flagging Content on YouTube

Flagging content on YouTube requires precise identification of violations such as hate speech, spam, or explicit material to help maintain community standards. You should use YouTube's reporting tools to select the most accurate reason for flagging and provide clear, concise details if necessary, ensuring effective review by moderators. Consistent and responsible flagging supports a safer community and improves content quality across the platform.

Challenges and Limitations of YouTube Moderation

YouTube moderation faces significant challenges, including the vast volume of content uploaded every minute, making it difficult to detect and remove harmful videos promptly. Automated systems struggle with accurately identifying nuanced violations, leading to false positives or missed harmful content. Your experience on the platform may be affected by these limitations, as moderation efforts balance content freedom with community safety.

Future Trends: Innovations in YouTube Flagging and Moderation

YouTube is advancing its flagging and moderation systems by integrating AI-powered tools that enhance content detection accuracy and reduce false positives. Innovations include real-time video analysis and multilingual support to better identify harmful or inappropriate content globally. These improvements aim to foster safer online communities while maintaining creators' freedom of expression.

socmedb.com

socmedb.com