Photo illustration: YouTube Flagging vs YouTube Removal

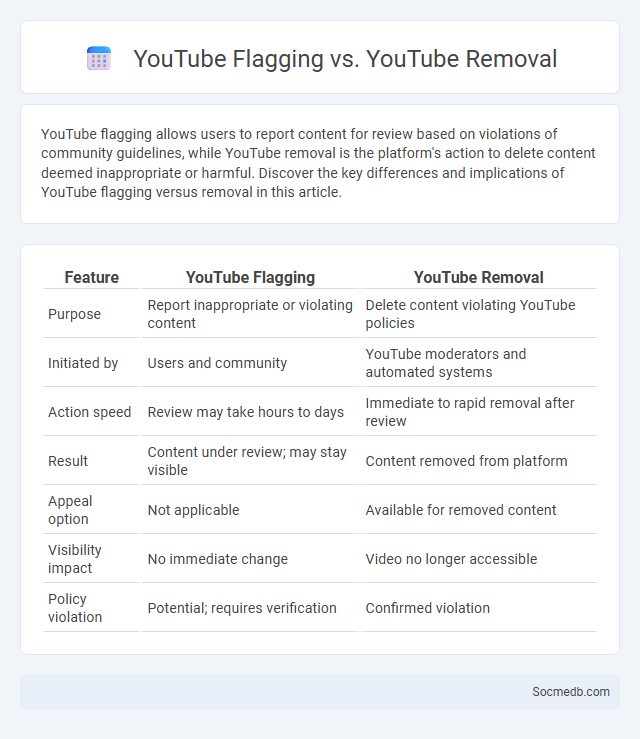

YouTube flagging allows users to report content for review based on violations of community guidelines, while YouTube removal is the platform's action to delete content deemed inappropriate or harmful. Discover the key differences and implications of YouTube flagging versus removal in this article.

Table of Comparison

| Feature | YouTube Flagging | YouTube Removal |

|---|---|---|

| Purpose | Report inappropriate or violating content | Delete content violating YouTube policies |

| Initiated by | Users and community | YouTube moderators and automated systems |

| Action speed | Review may take hours to days | Immediate to rapid removal after review |

| Result | Content under review; may stay visible | Content removed from platform |

| Appeal option | Not applicable | Available for removed content |

| Visibility impact | No immediate change | Video no longer accessible |

| Policy violation | Potential; requires verification | Confirmed violation |

Understanding YouTube Flagging: Definition and Purpose

YouTube flagging is a system that allows users to report content violating community guidelines such as hate speech, spam, or inappropriate material, helping maintain platform integrity. The purpose of flagging is to alert YouTube moderators for review and potential removal, ensuring a safer and more enjoyable experience for all users. By understanding how flagging works, you can actively contribute to a respectful and secure online environment.

What Happens After a Video is Flagged on YouTube?

When a video is flagged on YouTube, it undergoes a review process by YouTube's content moderation team or automated systems to determine if it violates community guidelines such as hate speech, misinformation, or copyright infringement. If the video is found in violation, it may be removed, age-restricted, or demonetized, and repeated offenses can lead to channel strikes or termination. Users can appeal decisions, prompting a secondary review, which ensures fair assessment and maintains platform integrity.

YouTube Removal: Criteria and Process

YouTube removal criteria primarily focus on violations of community guidelines, including hate speech, harassment, copyright infringement, and explicit content. The removal process involves content review by automated systems and human moderators who assess reports from users before taking down videos or channels. Appeals can be submitted by creators to challenge removals, ensuring compliance with YouTube's policies and maintaining platform integrity.

Key Differences Between Flagging and Removal on YouTube

Flagging on YouTube allows users to report content they find inappropriate or violating community guidelines, initiating a review process by the platform's moderators. Removal occurs only after YouTube evaluates the flagged content and determines it breaches policies, resulting in the video being taken down. Understanding these key differences helps you effectively participate in maintaining a safe and respectful online environment.

Community Guidelines: How They Impact Flagging and Removal

Social media platforms enforce Community Guidelines to maintain a safe and respectful environment, directly influencing the flagging and removal of content that violates these rules. Your reports help identify inappropriate material, prompting automated systems and moderators to evaluate and, if necessary, remove posts that spread misinformation, hate speech, or harmful behavior. Understanding these guidelines empowers you to navigate social media more responsibly and contribute to a positive online community.

User Reporting vs Automated Detection Systems

User reporting on social media allows individuals to flag inappropriate content based on personal judgment, providing a human perspective that can identify context-specific nuances often missed by algorithms. Automated detection systems utilize machine learning models to scan vast amounts of data for patterns indicative of violations such as hate speech, misinformation, or spam, enabling real-time moderation at scale. Combining user reporting with automated detection systems creates a more robust approach by balancing human insight with technological efficiency.

Consequences of Flagged vs Removed Content

Flagged content on social media often leads to reduced visibility and potential restrictions on Your account, impacting engagement and reach without complete deletion. Removed content results in immediate loss of the post and may trigger algorithmic penalties or account suspensions depending on platform policies. Understanding the differences helps You navigate content guidelines to minimize negative consequences and maintain a positive online presence.

Appeal Process: Challenging Flagging and Removals

When your content is flagged or removed on social media, understanding the appeal process is crucial to protect your online presence. Platforms like Facebook, Instagram, and Twitter offer structured procedures allowing you to challenge moderation decisions by submitting detailed explanations and evidence. Engaging promptly with these appeals can help restore your posts while ensuring compliance with community guidelines and algorithmic standards.

Best Practices: Avoiding Flags and Content Removal

To avoid flags and content removal on social media, strictly adhere to platform community guidelines, ensuring all posts respect copyright laws and refrain from hate speech or misinformation. Use accurate tagging and provide clear context to reduce misinterpretations that can trigger automated moderation systems. Regularly update privacy settings and monitor audience engagement to maintain compliance and prevent account suspensions.

The Future of Content Moderation on YouTube

YouTube's future of content moderation is increasingly driven by advanced AI technologies and machine learning algorithms designed to detect and remove harmful or misleading content swiftly. Human moderators will still play a crucial role in nuanced decision-making, especially in complex cases involving context and cultural sensitivity. Enhanced transparency through expanded community guidelines and real-time feedback mechanisms aims to balance free expression while ensuring a safer platform for users worldwide.

socmedb.com

socmedb.com