Photo illustration: YouTube Flagging vs YouTube Reporting

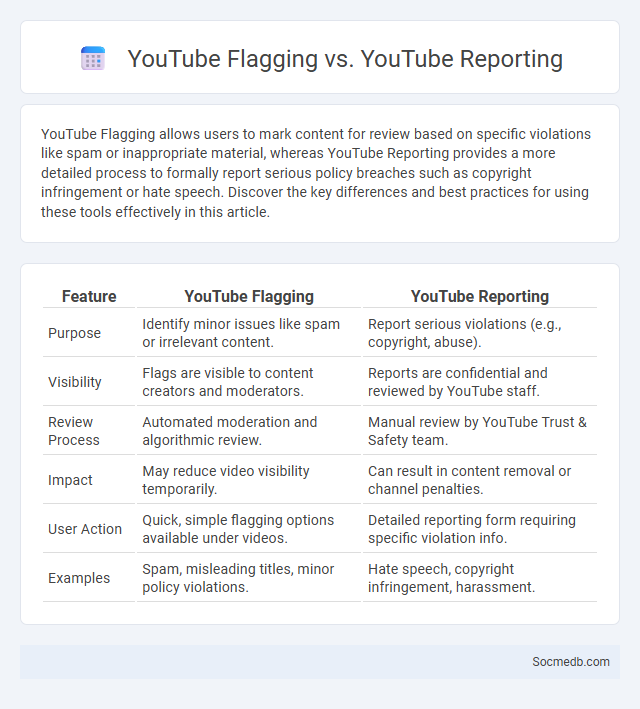

YouTube Flagging allows users to mark content for review based on specific violations like spam or inappropriate material, whereas YouTube Reporting provides a more detailed process to formally report serious policy breaches such as copyright infringement or hate speech. Discover the key differences and best practices for using these tools effectively in this article.

Table of Comparison

| Feature | YouTube Flagging | YouTube Reporting |

|---|---|---|

| Purpose | Identify minor issues like spam or irrelevant content. | Report serious violations (e.g., copyright, abuse). |

| Visibility | Flags are visible to content creators and moderators. | Reports are confidential and reviewed by YouTube staff. |

| Review Process | Automated moderation and algorithmic review. | Manual review by YouTube Trust & Safety team. |

| Impact | May reduce video visibility temporarily. | Can result in content removal or channel penalties. |

| User Action | Quick, simple flagging options available under videos. | Detailed reporting form requiring specific violation info. |

| Examples | Spam, misleading titles, minor policy violations. | Hate speech, copyright infringement, harassment. |

Understanding YouTube’s Flagging System

YouTube's flagging system allows users to report content that violates community guidelines, such as hate speech, misinformation, or copyright infringement. Your report triggers an automated review process combined with human moderation to evaluate whether the flagged content should be removed or restricted. Recognizing how this system functions helps you navigate content responsibly and contributes to a safer online environment.

What is YouTube Reporting?

YouTube Reporting is a powerful analytics tool that allows creators and businesses to track detailed performance metrics of their video content and channel activities. By providing data on audience demographics, watch time, traffic sources, and engagement rates, YouTube Reporting helps optimize content strategy for better reach and monetization. This reporting feature is essential for making data-driven decisions to enhance viewer retention and channel growth.

Flagging vs Reporting: Key Differences

Flagging involves marking content as inappropriate or suspicious to alert platform moderators, while reporting formally submits violations for review based on community guidelines. You should use flagging for quick attention to potential issues and reporting for detailed complaints requiring action. Understanding these differences ensures effective management of harmful or misleading social media content.

How YouTube Flags Content

YouTube uses advanced algorithms and machine learning models to scan videos for content that violates its community guidelines, such as hate speech, misinformation, or copyright infringement. The platform also relies on user reports and human reviewers to identify and flag inappropriate or harmful content. By analyzing metadata, video frames, and audio transcripts, YouTube ensures that Your viewing experience remains safe and compliant with its policies.

The User Process: How to Report Videos

To report videos on social media platforms, locate the video and tap on the options menu, usually represented by three dots or a gear icon. Select the "Report" option, then choose the reason for the report from a predefined list such as harmful content, misinformation, or inappropriate behavior. You must provide specific details about your concern to help the platform's moderation team review and take appropriate action swiftly.

Types of Content Flagged on YouTube

YouTube flags various types of content including hate speech, violent or graphic content, misinformation, and sexually explicit material to maintain platform safety and compliance with community guidelines. Content promoting harmful or dangerous acts, spam, and deceptive practices is also subject to removal or demonetization. Understanding these flagged categories helps you create content that adheres to YouTube's policies and avoids penalties or account suspension.

Community Guidelines and Policy Enforcement

Social media platforms enforce Community Guidelines to maintain safe and respectful online environments, addressing issues such as hate speech, misinformation, and harassment. Policy Enforcement employs automated algorithms and human moderators to detect and remove content that violates these rules, ensuring compliance and protecting user experience. Regular updates to guidelines reflect evolving societal norms and legal requirements, promoting transparency and accountability across digital communities.

Impact of Flagging and Reporting on Creators

Flagging and reporting mechanisms on social media directly influence creators by affecting content visibility and monetization opportunities. A high volume of flags can trigger algorithmic restrictions, leading to reduced reach and engagement for creators. This system incentivizes adherence to platform guidelines while posing challenges related to content censorship and creator accountability.

Automated Detection vs Manual Review

Automated detection leverages AI algorithms to quickly identify harmful content across social media platforms, improving scalability and response times. Manual review ensures nuanced understanding and context-sensitive judgments that machines often miss, maintaining content accuracy and fairness. Balancing your social media strategy with both methods enhances moderation efficiency and protects user experience effectively.

Best Practices for Using Flagging and Reporting Tools

Using flagging and reporting tools effectively on social media platforms helps maintain community safety and content quality. Users should provide clear, specific information when reporting violations such as hate speech, harassment, or misinformation to ensure swift moderation. Regularly reviewing platform guidelines and encouraging responsible reporting supports accurate enforcement and minimizes misuse of these tools.

socmedb.com

socmedb.com