Photo illustration: Facebook AI vs Human Moderators

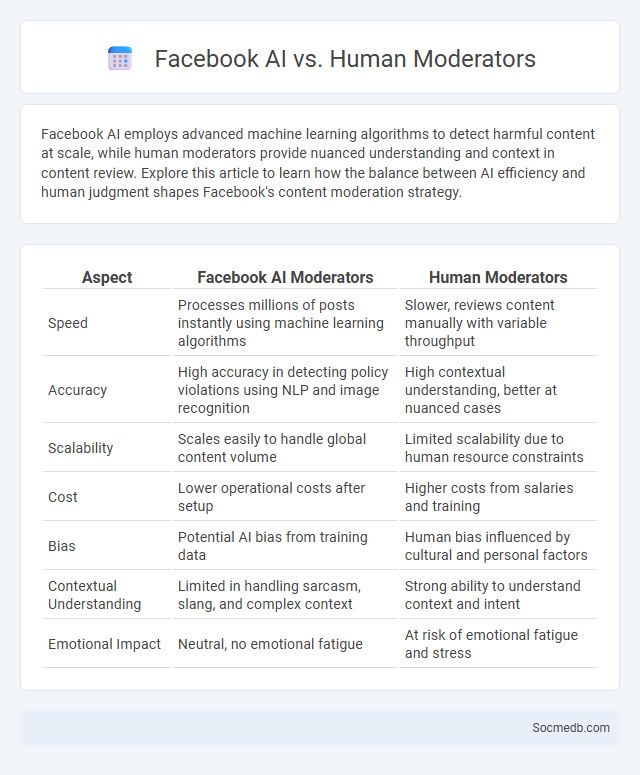

Facebook AI employs advanced machine learning algorithms to detect harmful content at scale, while human moderators provide nuanced understanding and context in content review. Explore this article to learn how the balance between AI efficiency and human judgment shapes Facebook's content moderation strategy.

Table of Comparison

| Aspect | Facebook AI Moderators | Human Moderators |

|---|---|---|

| Speed | Processes millions of posts instantly using machine learning algorithms | Slower, reviews content manually with variable throughput |

| Accuracy | High accuracy in detecting policy violations using NLP and image recognition | High contextual understanding, better at nuanced cases |

| Scalability | Scales easily to handle global content volume | Limited scalability due to human resource constraints |

| Cost | Lower operational costs after setup | Higher costs from salaries and training |

| Bias | Potential AI bias from training data | Human bias influenced by cultural and personal factors |

| Contextual Understanding | Limited in handling sarcasm, slang, and complex context | Strong ability to understand context and intent |

| Emotional Impact | Neutral, no emotional fatigue | At risk of emotional fatigue and stress |

Introduction to Facebook's Content Moderation Landscape

Facebook's content moderation landscape relies on advanced artificial intelligence algorithms combined with human reviewers to identify and remove harmful or inappropriate content promptly. The platform enforces strict community standards aimed at preventing misinformation, hate speech, and violent content while allowing diverse voices to express themselves freely. Your experience on Facebook is shaped by ongoing efforts to balance safety, authenticity, and engagement across its global user base.

Understanding AI-Powered Moderation Tools

AI-powered moderation tools analyze user-generated content using natural language processing and image recognition to detect harmful or inappropriate material. These tools help social media platforms maintain community guidelines by automatically flagging or removing content that violates policies, reducing the need for extensive human oversight. Machine learning algorithms continuously improve accuracy by learning from moderator feedback and evolving social trends.

The Role of Human Moderators on Facebook

Human moderators on Facebook play a crucial role in reviewing complex content that automated systems cannot accurately assess, ensuring adherence to community standards. They evaluate context, intent, and nuance in posts, comments, and images to prevent misinformation, hate speech, and harmful behaviors from spreading. Your online safety and experience depend significantly on these moderators' judgment and decision-making in maintaining a healthy digital environment.

Accuracy: AI vs Human Judgment

AI-driven social media analysis leverages algorithms that process vast amounts of data instantly, offering high accuracy in detecting patterns and trends that human judgment might miss. Human judgment excels in interpreting context, sarcasm, and emotional nuances, which AI can struggle to fully comprehend. Balancing AI's data precision with your critical thinking ensures more reliable and insightful social media insights.

Speed and Scalability of Content Review

Social media platforms utilize advanced AI algorithms and machine learning models to accelerate content review processes, enabling the analysis of millions of posts in real time. Cloud-based infrastructure and distributed computing systems ensure scalability, handling spikes in user-generated content without compromising review speed. Automated moderation tools combined with human oversight enhance accuracy and efficiency, maintaining platform safety while supporting exponential growth.

Handling Nuance and Context in Moderation

Effective social media moderation requires understanding the nuance and context behind user-generated content to accurately distinguish between harmful behavior and free expression. Your moderation strategies should incorporate advanced AI tools combined with human judgment to interpret sarcasm, cultural references, and slang, preventing over-censorship or misjudgment. Prioritizing context-aware moderation enhances user trust and fosters respectful online communities while minimizing false positives and negatives.

Bias and Fairness in AI vs Human Decisions

Bias in AI-driven social media algorithms can amplify existing societal prejudices by promoting content that aligns with user profiles, potentially creating echo chambers and limiting diverse perspectives. Human decisions in content moderation may introduce subjective biases, but AI systems must be continuously audited and trained on diverse datasets to enhance fairness. Your awareness of these challenges is crucial for advocating transparent and equitable social media environments.

Privacy and Ethical Considerations

Social media platforms collect vast amounts of personal data, raising critical privacy concerns regarding user consent and data security. Ethical considerations include the responsible handling of sensitive information, prevention of misinformation, and ensuring algorithmic transparency to avoid bias. Users must be aware of privacy settings and platform policies to protect their digital footprint effectively.

Challenges and Limitations of Current Approaches

Current social media platforms face significant challenges including misinformation proliferation, privacy breaches, and algorithmic bias that can deepen societal divisions. Content moderation struggles to balance free expression with the need to curb harmful content, often resulting in inconsistent enforcement and user dissatisfaction. Scalability issues also hinder real-time detection of malicious activities, limiting the effectiveness of existing solutions in rapidly evolving digital environments.

The Future of Content Moderation on Facebook

Facebook's future content moderation will increasingly rely on artificial intelligence and machine learning to detect harmful content with greater accuracy and speed. Your online safety will benefit from advanced algorithms designed to identify misinformation, hate speech, and violent content while respecting user privacy. Enhanced transparency and user control features are expected to empower you in managing what appears on your feed.

socmedb.com

socmedb.com