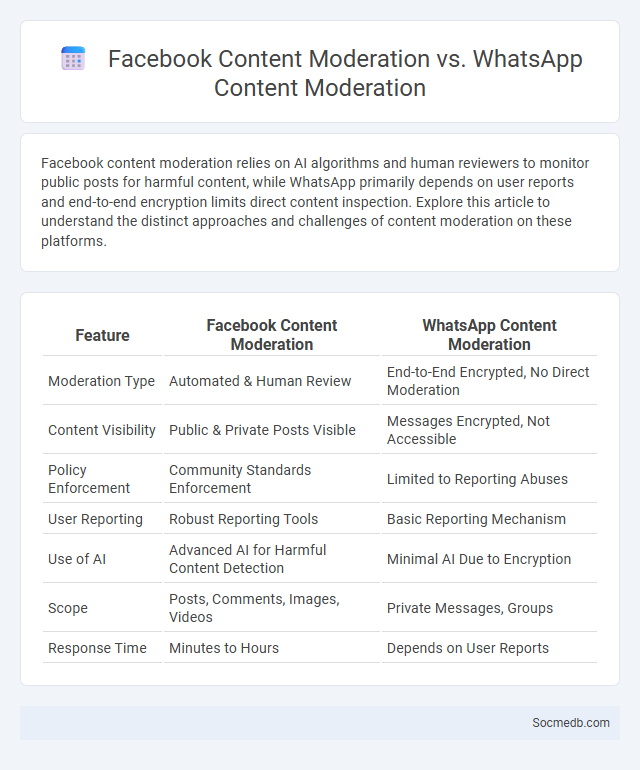

Photo illustration: Facebook Content Moderation vs WhatsApp Content Moderation

Facebook content moderation relies on AI algorithms and human reviewers to monitor public posts for harmful content, while WhatsApp primarily depends on user reports and end-to-end encryption limits direct content inspection. Explore this article to understand the distinct approaches and challenges of content moderation on these platforms.

Table of Comparison

| Feature | Facebook Content Moderation | WhatsApp Content Moderation |

|---|---|---|

| Moderation Type | Automated & Human Review | End-to-End Encrypted, No Direct Moderation |

| Content Visibility | Public & Private Posts Visible | Messages Encrypted, Not Accessible |

| Policy Enforcement | Community Standards Enforcement | Limited to Reporting Abuses |

| User Reporting | Robust Reporting Tools | Basic Reporting Mechanism |

| Use of AI | Advanced AI for Harmful Content Detection | Minimal AI Due to Encryption |

| Scope | Posts, Comments, Images, Videos | Private Messages, Groups |

| Response Time | Minutes to Hours | Depends on User Reports |

Introduction to Content Moderation

Content moderation is essential for maintaining safe and respectful interactions across social media platforms by filtering inappropriate, harmful, or offensive material. Effective moderation employs a combination of AI algorithms and human reviewers to identify and remove content that violates community guidelines or legal standards. Your experience on social media improves when platforms consistently enforce rules that protect users from harassment, misinformation, and harmful behavior.

Overview: Facebook vs WhatsApp Content Moderation

Facebook employs advanced AI algorithms combined with human reviewers to monitor billions of posts, images, and videos daily, focusing on hate speech, misinformation, and graphic content. WhatsApp utilizes end-to-end encryption, limiting content visibility and relying more on user reports and automated detection of mass forwarding to combat misinformation and harmful content. Your experience on these platforms depends on their distinct moderation strategies designed to balance privacy with user safety.

Key Goals of Platform-Specific Moderation

Platform-specific moderation aims to protect community standards by addressing unique content challenges on each social media site, such as hate speech on Facebook or misinformation on Twitter. You benefit from tailored strategies that enhance user safety, promote authentic interactions, and ensure compliance with legal regulations. Effective moderation reduces toxicity, supports positive user engagement, and upholds the platform's reputation.

Content Moderation Techniques on Facebook

Facebook employs advanced AI-driven content moderation techniques, including machine learning algorithms and natural language processing, to identify and remove harmful content such as hate speech, misinformation, and graphic violence. Human moderators review flagged posts to ensure nuanced understanding and contextual accuracy, balancing automated efficiency with ethical oversight. Ongoing updates to Facebook's moderation policies address evolving online behaviors and emerging threats, enhancing platform safety and user experience.

WhatsApp’s Approach to Content Moderation

WhatsApp employs end-to-end encryption to prioritize user privacy while implementing content moderation through automated detection tools and user reporting mechanisms. The platform leverages AI algorithms to identify potentially harmful or inappropriate content without accessing message contents directly. WhatsApp's approach balances maintaining secure communications with minimizing the spread of misinformation and harmful behaviors.

Privacy and Encryption Challenges

Social media platforms face significant privacy and encryption challenges due to the vast amount of personal data shared by users, making them prime targets for cyberattacks and data breaches. Strong encryption methods often conflict with regulatory demands for data access, complicating efforts to protect user information while complying with law enforcement requests. Balancing effective encryption with user privacy is critical to maintaining trust and preventing unauthorized data exposure in the evolving social media landscape.

Automated vs Manual Moderation Methods

Automated moderation uses AI algorithms and machine learning to quickly filter inappropriate content, ensuring real-time protection for your social media platform. Manual moderation relies on human reviewers to assess context and nuance, offering higher accuracy in detecting subtle violations and maintaining community standards. Balancing both methods improves efficiency and content quality, enhancing user experience and trust.

Misinformation and Hate Speech Management

Effective misinformation and hate speech management on social media platforms relies on advanced AI-driven content moderation systems that detect and flag harmful posts in real-time. Collaboration with fact-checking organizations and implementation of clear community guidelines significantly reduce the spread of false information and offensive language. Continuous user education and transparency in enforcement policies enhance trust and promote safer online environments.

Regulatory and Ethical Considerations

Regulatory frameworks such as the GDPR and CCPA enforce strict data privacy and user consent protocols on social media platforms, ensuring compliance and protecting user information. Ethical considerations include transparency in advertising, combating misinformation, and preventing algorithmic bias to maintain platform integrity and user trust. Social media companies must implement robust content moderation policies and AI ethics guidelines to address user safety and misinformation effectively.

Future Trends in Content Moderation Platforms

Future trends in content moderation platforms emphasize the integration of advanced AI technologies, such as natural language processing and machine learning, to improve accuracy and scalability in identifying harmful or misleading content. Your growing demand for real-time moderation pushes platforms to adopt automated systems that reduce human error while safeguarding user experience. Enhanced transparency and user empowerment through customizable moderation settings are also expected to shape the next generation of content moderation solutions.

socmedb.com

socmedb.com