Photo illustration: Facebook Censorship vs Freedom of Speech

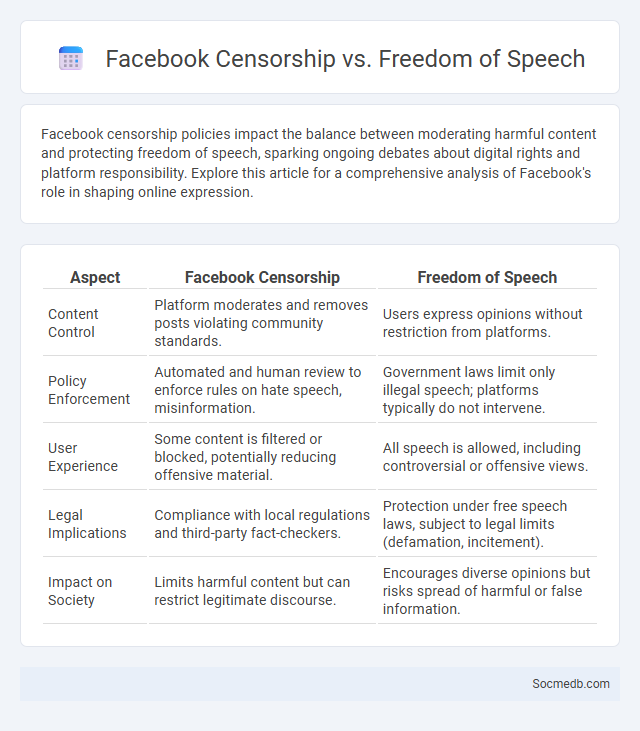

Facebook censorship policies impact the balance between moderating harmful content and protecting freedom of speech, sparking ongoing debates about digital rights and platform responsibility. Explore this article for a comprehensive analysis of Facebook's role in shaping online expression.

Table of Comparison

| Aspect | Facebook Censorship | Freedom of Speech |

|---|---|---|

| Content Control | Platform moderates and removes posts violating community standards. | Users express opinions without restriction from platforms. |

| Policy Enforcement | Automated and human review to enforce rules on hate speech, misinformation. | Government laws limit only illegal speech; platforms typically do not intervene. |

| User Experience | Some content is filtered or blocked, potentially reducing offensive material. | All speech is allowed, including controversial or offensive views. |

| Legal Implications | Compliance with local regulations and third-party fact-checkers. | Protection under free speech laws, subject to legal limits (defamation, incitement). |

| Impact on Society | Limits harmful content but can restrict legitimate discourse. | Encourages diverse opinions but risks spread of harmful or false information. |

Understanding Facebook’s Censorship Policies

Facebook's censorship policies prioritize removing content that violates community standards, including hate speech, misinformation, and harmful behavior, ensuring a safer platform for users. You should familiarize yourself with Facebook's guidelines and reporting tools to understand how content is moderated and what types of posts may be restricted or removed. Understanding these policies helps maintain compliance and effectively manage your presence on the platform.

Defining Freedom of Speech in the Digital Age

Freedom of speech in the digital age encompasses the right to express opinions and share information across social media platforms without undue censorship or restriction, aligning with international human rights standards like Article 19 of the Universal Declaration of Human Rights. Social media companies balance content moderation policies with preserving open dialogue, navigating challenges related to misinformation, hate speech, and platform governance. As digital communication evolves, defining freedom of speech involves ongoing legal, ethical, and technological considerations to protect expression while ensuring user safety and accountability.

The Role of Content Moderation on Social Platforms

Content moderation on social platforms plays a critical role in maintaining safe and respectful online communities by filtering harmful or inappropriate content. Effective algorithms combined with human oversight help protect users from misinformation, hate speech, and cyberbullying, enhancing your digital experience. Ensuring transparency and consistency in moderation policies builds trust and encourages positive interactions across social media networks.

Facebook’s Algorithms: Balancing Safety and Expression

Facebook's algorithms prioritize content that fosters meaningful interactions while filtering out harmful or misleading information. Your experience on the platform is shaped by machine learning models that assess posts based on relevance, engagement, and community standards to maintain a safe yet open environment. Continuous updates aim to strike a balance between protecting users and supporting free expression across diverse communities.

Case Studies of Facebook Content Removal

Facebook's content removal policies have evolved through high-profile case studies involving misinformation, hate speech, and harmful content, highlighting its commitment to community standards enforcement. In 2023, Facebook removed over 20 million posts related to COVID-19 misinformation, demonstrating your platform's proactive approach to safeguarding public health. These actions reflect the complexity and scale of content moderation challenges faced by social media giants worldwide.

The Legal Landscape: Rights and Responsibilities

Navigating the legal landscape of social media requires understanding your rights to privacy, intellectual property, and freedom of expression alongside your responsibilities to avoid defamation, harassment, and copyright infringement. Laws such as the Digital Millennium Copyright Act (DMCA) and General Data Protection Regulation (GDPR) directly impact your content sharing and data handling practices. Staying informed about platform-specific policies and legal statutes helps protect your online presence and ensures compliance with evolving regulations.

Public Backlash and Accusations of Bias

Public backlash on social media often arises from perceived bias in content moderation and algorithmic prioritization, impacting user trust and platform credibility. Accusations of bias typically target political, cultural, or ideological preferences, leading to widespread debates about fairness and transparency. Your engagement on these platforms can be affected as communities demand accountability and more balanced representation of diverse viewpoints.

Navigating Misinformation vs. Censorship

Navigating misinformation on social media requires balancing the removal of harmful false content with protecting free expression, ensuring Your digital experience is informed and respectful. Advanced algorithms and human moderation work together to identify misleading posts while avoiding overreach that could lead to unjust censorship. Transparency in platform policies and user education are essential for fostering trust and promoting a healthy online environment.

User Experiences: Voices Silenced or Protected?

Social media platforms shape user experiences by balancing the fine line between silencing voices and protecting individuals from harmful content. Algorithms and moderation policies influence whose voices are amplified or muted, impacting public discourse and personal expression. Your engagement on these platforms can either challenge or reinforce these dynamics, highlighting the importance of transparent and fair content regulation.

Towards Ethical and Transparent Moderation Practices

Social media platforms are increasingly implementing ethical and transparent moderation practices to combat misinformation and protect user rights. These initiatives prioritize clear content guidelines, algorithmic accountability, and user participation in decision-making processes. Transparency reports and third-party audits play a crucial role in fostering trust and ensuring fair enforcement of community standards.

socmedb.com

socmedb.com