Photo illustration: Facebook Community Standards vs Instagram Community Guidelines

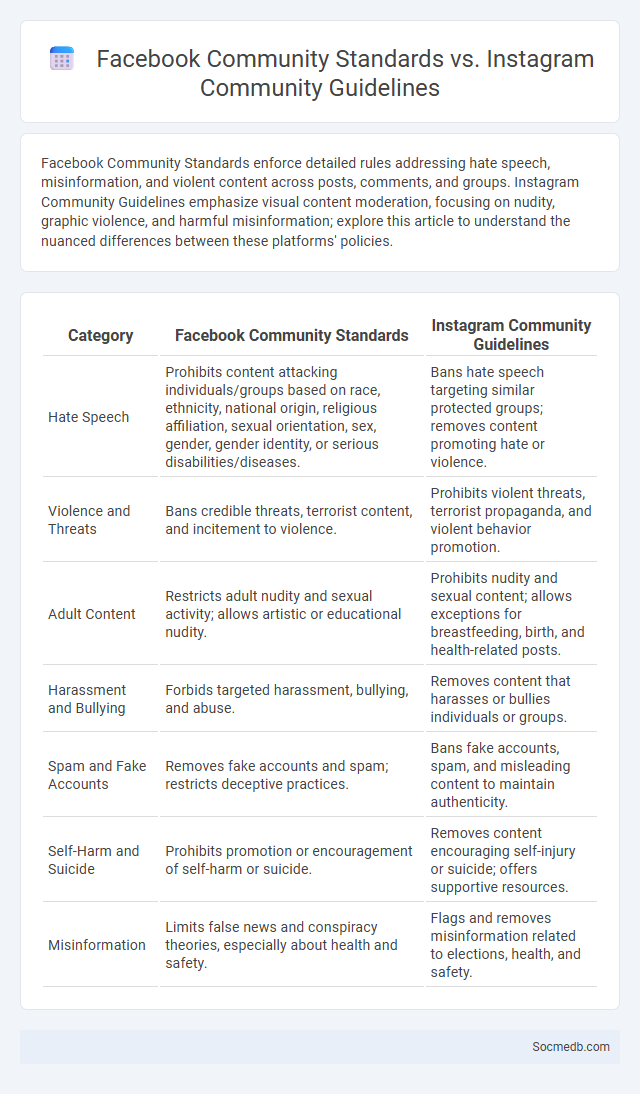

Facebook Community Standards enforce detailed rules addressing hate speech, misinformation, and violent content across posts, comments, and groups. Instagram Community Guidelines emphasize visual content moderation, focusing on nudity, graphic violence, and harmful misinformation; explore this article to understand the nuanced differences between these platforms' policies.

Table of Comparison

| Category | Facebook Community Standards | Instagram Community Guidelines |

|---|---|---|

| Hate Speech | Prohibits content attacking individuals/groups based on race, ethnicity, national origin, religious affiliation, sexual orientation, sex, gender, gender identity, or serious disabilities/diseases. | Bans hate speech targeting similar protected groups; removes content promoting hate or violence. |

| Violence and Threats | Bans credible threats, terrorist content, and incitement to violence. | Prohibits violent threats, terrorist propaganda, and violent behavior promotion. |

| Adult Content | Restricts adult nudity and sexual activity; allows artistic or educational nudity. | Prohibits nudity and sexual content; allows exceptions for breastfeeding, birth, and health-related posts. |

| Harassment and Bullying | Forbids targeted harassment, bullying, and abuse. | Removes content that harasses or bullies individuals or groups. |

| Spam and Fake Accounts | Removes fake accounts and spam; restricts deceptive practices. | Bans fake accounts, spam, and misleading content to maintain authenticity. |

| Self-Harm and Suicide | Prohibits promotion or encouragement of self-harm or suicide. | Removes content encouraging self-injury or suicide; offers supportive resources. |

| Misinformation | Limits false news and conspiracy theories, especially about health and safety. | Flags and removes misinformation related to elections, health, and safety. |

Introduction to Social Media Community Guidelines

Social media community guidelines establish clear rules and standards to foster respectful, safe, and inclusive online environments for users. These guidelines address issues such as hate speech, harassment, misinformation, and content moderation to ensure compliance with legal and ethical standards. Platforms like Facebook, Twitter, and Instagram continuously update their policies to adapt to evolving digital behaviors and enhance user experience.

Overview of Facebook Community Standards

Facebook Community Standards establish clear guidelines to maintain a safe and respectful environment for all users by prohibiting hate speech, harassment, and harmful misinformation. These standards outline specific content restrictions affecting posts, comments, and media shared within Facebook's network, ensuring compliance with legal requirements and promoting positive interactions. Understanding Facebook's rules helps you navigate the platform responsibly, protecting your account and fostering a supportive online community.

Key Points in Instagram Community Guidelines

Instagram Community Guidelines emphasize safety by prohibiting hate speech, harassment, and harmful content to create a respectful environment. Users must avoid posting graphic violence, nudity, or misinformation to maintain platform integrity. Intellectual property rights are protected, requiring users to share original content and respect others' creations.

General Principles of Community Standards

Community standards on social media are designed to create a safe, respectful, and inclusive environment for all users by prohibiting hate speech, harassment, and misinformation. Platforms enforce guidelines that balance freedom of expression with the need to protect users from harmful content, ensuring your interactions remain positive and constructive. Clear rules regarding content sharing and behavior help maintain trust and accountability within online communities.

Content Moderation: Facebook vs Instagram

Content moderation on Facebook emphasizes extensive algorithms and human review teams to manage diverse content types, including text, images, and videos, ensuring compliance with community standards across global user bases. Instagram employs similar moderation techniques but prioritizes visual content, using AI tools to detect inappropriate images and videos swiftly while fostering safer interactions through comment filtering and user reporting features. Both platforms continuously update policies to address misinformation, hate speech, and harmful content, leveraging machine learning and user feedback to enhance detection accuracy and response times.

Differences in Hate Speech Policies

Social media platforms enforce varying hate speech policies that reflect distinct definitions, enforcement mechanisms, and tolerance levels toward controversial content. Your understanding of these differences is crucial as they affect what speech remains visible and the consequences for violations across platforms like Facebook, Twitter, and TikTok. Each company calibrates its approach based on factors such as user base demographics, legal obligations, and corporate values, impacting content moderation intensity and transparency.

Approach to Misinformation and Fake News

Effective strategies to combat misinformation and fake news on social media involve robust fact-checking algorithms and the promotion of credible sources. Your engagement with verified content and critical evaluation of shared information play a crucial role in minimizing the spread of false narratives. Collaborations between platforms, fact-checkers, and users enhance transparency and trust in online communities.

Handling Personal and Sensitive Data

Handling personal and sensitive data on social media platforms requires robust encryption protocols and strict compliance with data protection regulations such as GDPR and CCPA. Implementing multi-factor authentication and regular security audits minimizes the risk of unauthorized access and data breaches. Users should be educated on privacy settings and the importance of limiting the sharing of sensitive information to safeguard their digital identity.

Enforcement Mechanisms and Appeals

Social media platforms implement enforcement mechanisms such as content removal, account suspensions, and temporary restrictions to uphold community guidelines and combat violations like misinformation and hate speech. Users can appeal enforcement actions through structured processes, often involving review teams that reassess flagged content or account status to ensure fairness and transparency. Understanding these mechanisms empowers You to navigate and address enforcement issues effectively on social media networks.

Impacts on User Experience and Online Safety

Social media platforms influence user experience by personalizing content through advanced algorithms that increase engagement but can also create echo chambers, affecting mental well-being. Online safety concerns arise from widespread issues such as cyberbullying, data breaches, and misinformation, necessitating robust privacy settings and proactive moderation. Enhanced user controls and AI-driven threat detection contribute to safer interactions and a more secure digital environment.

socmedb.com

socmedb.com