Photo illustration: Facebook Content Moderation vs Reddit Content Moderation

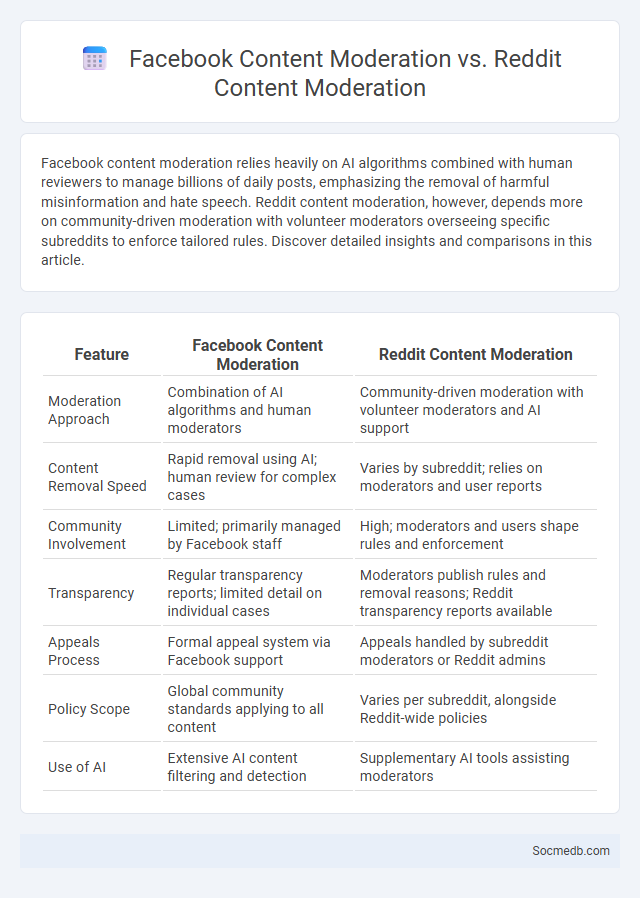

Facebook content moderation relies heavily on AI algorithms combined with human reviewers to manage billions of daily posts, emphasizing the removal of harmful misinformation and hate speech. Reddit content moderation, however, depends more on community-driven moderation with volunteer moderators overseeing specific subreddits to enforce tailored rules. Discover detailed insights and comparisons in this article.

Table of Comparison

| Feature | Facebook Content Moderation | Reddit Content Moderation |

|---|---|---|

| Moderation Approach | Combination of AI algorithms and human moderators | Community-driven moderation with volunteer moderators and AI support |

| Content Removal Speed | Rapid removal using AI; human review for complex cases | Varies by subreddit; relies on moderators and user reports |

| Community Involvement | Limited; primarily managed by Facebook staff | High; moderators and users shape rules and enforcement |

| Transparency | Regular transparency reports; limited detail on individual cases | Moderators publish rules and removal reasons; Reddit transparency reports available |

| Appeals Process | Formal appeal system via Facebook support | Appeals handled by subreddit moderators or Reddit admins |

| Policy Scope | Global community standards applying to all content | Varies per subreddit, alongside Reddit-wide policies |

| Use of AI | Extensive AI content filtering and detection | Supplementary AI tools assisting moderators |

Introduction to Content Moderation

Content moderation ensures online platforms maintain safe, respectful environments by monitoring and managing user-generated content. Techniques include automated algorithms, human reviewers, and community guidelines enforcement to detect and remove harmful, offensive, or inappropriate posts. Effective moderation balances freedom of expression with protection against misinformation, hate speech, and cyberbullying.

Overview of Facebook Content Moderation

Facebook content moderation employs advanced AI algorithms combined with a global team of human reviewers to identify and remove harmful or inappropriate content swiftly. The platform's policies cover hate speech, misinformation, violent content, and harassment, aiming to create a safer environment for Your interactions. Continuous updates to moderation guidelines ensure effective responses to emerging threats and changes in online behavior.

Overview of Reddit Content Moderation

Reddit employs a robust content moderation system combining community-driven moderators and site-wide policies enforced by Reddit administrators. The platform uses automated tools alongside human oversight to detect and remove content that violates rules, such as hate speech, spam, and misinformation. Subreddit moderators have the authority to enforce specific guidelines tailored to their communities, ensuring a balance between free expression and community safety.

General Principles of Content Moderation

Effective content moderation on social media platforms relies on clear guidelines that balance free expression with community safety, ensuring harmful or illegal content is promptly addressed. Automated tools combined with human review help detect and remove spam, hate speech, and misinformation while respecting user rights. Transparency in policies and consistent enforcement foster trust and maintain a healthy online environment for all users.

Key Differences: Facebook vs Reddit Moderation

Facebook moderation relies heavily on automated algorithms combined with human reviewers to enforce community standards across a vast and diverse user base, often prioritizing content removal to comply with legal regulations. Reddit employs a decentralized moderation model where community-specific volunteer moderators enforce rules tailored to their subreddits, fostering niche discussions with flexible guidelines. Understanding these key differences helps you navigate platform policies effectively and engage responsibly within each social media environment.

Automated Moderation Tools and AI Use

Automated moderation tools leverage AI algorithms to efficiently detect and filter harmful content, spam, and misinformation across social media platforms. These technologies analyze text, images, and videos in real-time, significantly reducing the need for manual review while maintaining community standards. By integrating AI-driven moderation, your social media environment becomes safer and more engaging for all users.

Community Guidelines and Policy Enforcement

Social media platforms implement strict Community Guidelines to ensure user safety and maintain respectful interactions. Effective Policy Enforcement involves automated systems and human moderators to detect and remove content that violates terms, such as hate speech, misinformation, and harassment. Clear enforcement helps foster a positive digital environment while balancing free expression and platform integrity.

Human Moderators vs AI Moderation

Human moderators provide nuanced understanding and context-based judgment in social media content review, ensuring culturally sensitive and ethical decision-making. AI moderation processes vast amounts of data rapidly, detecting patterns and flagging harmful content like hate speech or misinformation with scalability and consistency. Combining human expertise with AI efficiency creates a balanced moderation framework that enhances accuracy and reduces bias on social media platforms.

Challenges and Controversies in Content Moderation

Content moderation on social media platforms faces significant challenges such as balancing free speech with harmful content removal, addressing misinformation, and handling biased enforcement policies. You often encounter controversies involving censorship accusations, algorithmic bias, and inconsistent rule application across diverse cultural contexts. These issues complicate efforts to maintain safe, respectful online communities while protecting users' rights.

Future Trends in Social Media Content Moderation

Future trends in social media content moderation emphasize the integration of artificial intelligence and machine learning to enhance the accuracy and speed of detecting harmful or inappropriate content. You can expect increased reliance on real-time analysis tools that adapt to evolving language patterns, images, and video formats to maintain safer online environments. Regulatory frameworks worldwide are also pushing platforms to implement more transparent and accountable moderation practices to protect user rights and data privacy.

socmedb.com

socmedb.com