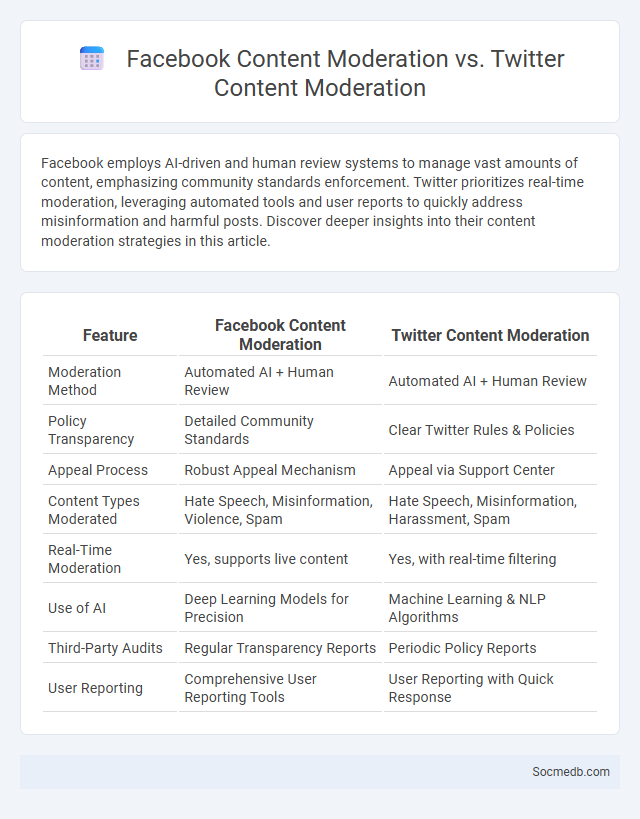

Photo illustration: Facebook Content Moderation vs Twitter Content Moderation

Facebook employs AI-driven and human review systems to manage vast amounts of content, emphasizing community standards enforcement. Twitter prioritizes real-time moderation, leveraging automated tools and user reports to quickly address misinformation and harmful posts. Discover deeper insights into their content moderation strategies in this article.

Table of Comparison

| Feature | Facebook Content Moderation | Twitter Content Moderation |

|---|---|---|

| Moderation Method | Automated AI + Human Review | Automated AI + Human Review |

| Policy Transparency | Detailed Community Standards | Clear Twitter Rules & Policies |

| Appeal Process | Robust Appeal Mechanism | Appeal via Support Center |

| Content Types Moderated | Hate Speech, Misinformation, Violence, Spam | Hate Speech, Misinformation, Harassment, Spam |

| Real-Time Moderation | Yes, supports live content | Yes, with real-time filtering |

| Use of AI | Deep Learning Models for Precision | Machine Learning & NLP Algorithms |

| Third-Party Audits | Regular Transparency Reports | Periodic Policy Reports |

| User Reporting | Comprehensive User Reporting Tools | User Reporting with Quick Response |

Introduction to Content Moderation

Content moderation is essential for maintaining safe and respectful environments across social media platforms by filtering harmful, inappropriate, or misleading content. It utilizes advanced algorithms and human reviewers to identify and remove content that violates community guidelines, protecting users from harassment, misinformation, and offensive material. Your experience on social media is shaped significantly by these moderation practices, ensuring that interactions remain positive and aligned with platform standards.

Overview of Facebook’s Content Moderation Policies

Facebook's content moderation policies prioritize maintaining a safe environment by removing harmful content such as hate speech, misinformation, and violent threats while enabling free expression within established community standards. You can expect continuous updates to these policies reflecting evolving societal norms and legal requirements, supported by AI-driven detection and human review teams. Transparency reports and appeal processes are integral to ensuring accountability and fairness in enforcement actions.

Overview of Twitter’s Content Moderation Policies

Twitter's content moderation policies focus on maintaining a safe environment by prohibiting hate speech, harassment, and misinformation while promoting respectful discourse. Your online experience is protected by mechanisms such as user reporting, automated detection systems, and human review teams that enforce rules against abuse and harmful content. Transparency reports and appeals processes ensure accountability and continuous policy refinement to adapt to emerging challenges.

Key Differences Between Facebook and Twitter Content Moderation

Facebook employs a combination of artificial intelligence and human reviewers to enforce detailed community standards across varied content types, emphasizing context and user reports for nuanced decision-making. Twitter relies heavily on real-time automated detection paired with rapid human intervention, prioritizing the moderation of conversations and misinformation dissemination due to its prioritization of public discourse. The primary distinction lies in Facebook's comprehensive policy framework targeting diverse user-generated content, whereas Twitter focuses on timely regulation of tweet visibility to prevent harm and misinformation.

Similarities in Facebook and Twitter Moderation Approaches

Facebook and Twitter both employ content moderation strategies that rely on a combination of automated algorithms and human reviewers to identify and remove harmful or inappropriate content. Each platform enforces community guidelines designed to combat hate speech, misinformation, and harassment, ensuring user safety while promoting free expression. Understanding these similarities helps you navigate the moderation processes more effectively across both social media networks.

Challenges in Social Media Content Moderation

Content moderation on social media faces significant challenges including the rapid spread of misinformation, the complexity of detecting hate speech across different languages and cultures, and the need to balance free expression with community safety. Automated algorithms often struggle with context, leading to both false positives and overlooked harmful content. Increasing volume of user-generated content requires scalable solutions that combine artificial intelligence with human oversight to effectively manage compliance with platform policies and legal regulations.

Automated vs. Human Moderation Techniques

Automated moderation techniques use algorithms and artificial intelligence to quickly scan and filter large volumes of social media content, identifying spam, hate speech, and inappropriate material with efficiency. Human moderation provides nuanced judgment and context understanding, essential for addressing subtle issues like sarcasm, cultural sensitivities, and complex violations that machines may miss. Your social media strategy benefits from combining both approaches to ensure accurate, timely, and context-aware content management, balancing speed with thoughtful decision-making.

Impact of Content Moderation on User Experience

Content moderation significantly shapes user experience by filtering harmful or inappropriate material, creating a safer and more welcoming environment on social media platforms. Effective moderation enhances content relevance and user trust, encouraging more meaningful interactions and sustained engagement. Your exposure to diverse and respectful conversations depends largely on the platform's moderation policies and their implementation efficiency.

Legal and Ethical Considerations in Moderation

Effective social media moderation must balance legal requirements like compliance with the Communications Decency Act and GDPR with ethical considerations such as user privacy and freedom of expression. Your platform should implement clear policies that prevent hate speech, misinformation, and harassment while respecting diverse viewpoints and ensuring transparency in enforcement. Leveraging AI tools alongside human moderators helps maintain fairness and accountability in content decisions.

The Future of Content Moderation on Social Platforms

The future of content moderation on social platforms hinges on advanced AI algorithms that detect harmful content with greater accuracy and speed, reducing reliance on human moderators. Integration of machine learning models enables real-time filtering of misinformation, hate speech, and explicit material while adapting to evolving linguistic patterns and context. Transparent moderation policies and user involvement through feedback mechanisms are expected to enhance accountability and trust in social networks.

socmedb.com

socmedb.com