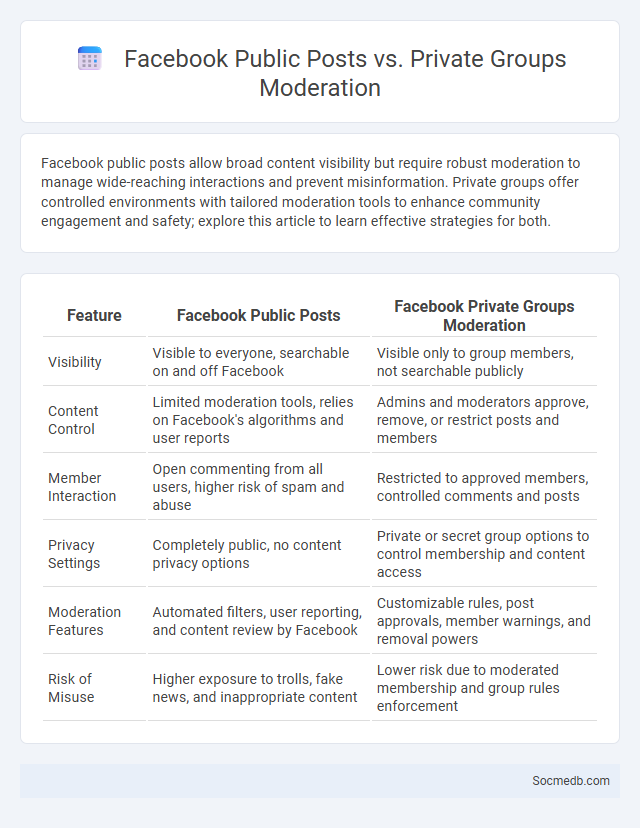

Photo illustration: Facebook Public Posts vs Private Groups Moderation

Facebook public posts allow broad content visibility but require robust moderation to manage wide-reaching interactions and prevent misinformation. Private groups offer controlled environments with tailored moderation tools to enhance community engagement and safety; explore this article to learn effective strategies for both.

Table of Comparison

| Feature | Facebook Public Posts | Facebook Private Groups Moderation |

|---|---|---|

| Visibility | Visible to everyone, searchable on and off Facebook | Visible only to group members, not searchable publicly |

| Content Control | Limited moderation tools, relies on Facebook's algorithms and user reports | Admins and moderators approve, remove, or restrict posts and members |

| Member Interaction | Open commenting from all users, higher risk of spam and abuse | Restricted to approved members, controlled comments and posts |

| Privacy Settings | Completely public, no content privacy options | Private or secret group options to control membership and content access |

| Moderation Features | Automated filters, user reporting, and content review by Facebook | Customizable rules, post approvals, member warnings, and removal powers |

| Risk of Misuse | Higher exposure to trolls, fake news, and inappropriate content | Lower risk due to moderated membership and group rules enforcement |

Understanding Facebook Public Posts

Understanding Facebook public posts is essential for managing your online privacy and digital presence. These posts are visible to anyone on or off Facebook, including people who are not your friends, making it important to review your privacy settings carefully. By controlling public posts, you can ensure that your content reaches the right audience while protecting sensitive information from unwanted viewers.

Overview of Facebook Private Groups

Facebook Private Groups offer a secure platform where members can share content exclusively with approved participants, enhancing privacy and fostering more intimate online communities. These groups allow administrators to control membership, post approval, and visibility settings, ensuring conversations remain confidential and relevant to the group's purpose. With features like event planning, file sharing, and group insights, Facebook Private Groups support collaborative engagement while protecting user privacy.

Defining Content Moderation on Facebook

Content moderation on Facebook involves systematically reviewing and filtering user-generated content to ensure compliance with community standards and legal regulations. This process employs automated algorithms alongside human moderators to identify and remove harmful or inappropriate material such as hate speech, misinformation, and explicit content. Your experience on the platform is safeguarded by these measures to foster a safe and respectful online environment.

Key Differences Between Public Posts and Private Groups

Public posts on social media platforms are accessible to a wide audience, allowing anyone to view, comment, and share the content, which increases visibility and engagement potential. Private groups offer restricted access, requiring membership approval, which ensures that conversations and content remain confidential and targeted to a specific community. This distinction impacts privacy settings, content control, and user interaction dynamics on platforms like Facebook, Instagram, and LinkedIn.

Privacy and Accessibility in Public vs Private Spaces

Social media platforms require careful navigation of privacy settings to protect Your personal data in both public and private online spaces, where information can quickly become accessible to unintended audiences. Public social media posts are often visible to a broad range of users, increasing exposure risk, whereas private groups or accounts offer enhanced control and restricted access to shared content. Prioritizing accessibility ensures that content remains usable for all individuals, including those with disabilities, while maintaining clear boundaries between public visibility and private interactions.

Content Moderation Policies: Public Posts

Content moderation policies for public posts on social media ensure that your shared information complies with community guidelines, preventing harmful or inappropriate content from spreading. These policies regulate hate speech, misinformation, and explicit material through automated filters and human review to maintain a safe online environment. Understanding these rules helps you post responsibly while protecting the platform's integrity and user experience.

Content Moderation Policies: Private Groups

Content moderation policies for private groups on social media platforms emphasize the enforcement of community standards while respecting user privacy and group autonomy. These policies typically include specific guidelines for acceptable content, reporting mechanisms, and the role of group administrators in monitoring posts and member behavior. Effective moderation balances preventing harmful content with enabling free expression within closed, interest-based communities.

Tools and Features for Moderators

Social media platforms provide moderators with advanced tools such as AI-powered content filtering, real-time flagging systems, and user behavior analytics to efficiently identify and manage inappropriate content. These features include customizable keyword filters, automated moderation bots, and detailed reporting dashboards to maintain community standards. Enhanced moderator permissions and collaboration tools enable swift responses to violations, ensuring safer and more inclusive online environments.

Challenges in Enforcing Community Standards

Social media platforms face significant challenges in enforcing community standards due to the vast volume of user-generated content and the complexity of context in different cultures and languages. Automated moderation tools often struggle with nuanced judgments, leading to inconsistent enforcement and potential over-censorship or under-enforcement. Continuous development of AI algorithms and human oversight is essential to balance free expression with the prevention of harmful content across networks like Facebook, Twitter, and Instagram.

Future Trends in Facebook Content Moderation

Future trends in Facebook content moderation emphasize the integration of advanced artificial intelligence and machine learning algorithms to improve the accuracy and speed of detecting harmful content. Enhanced transparency tools and user empowerment features are expected to provide clearer guidelines and facilitate community reporting. Increasing collaboration with external fact-checkers and regulatory bodies aims to create a more accountable and trustworthy platform environment.

socmedb.com

socmedb.com