Photo illustration: Facebook Reported Content vs Proactively Detected Content

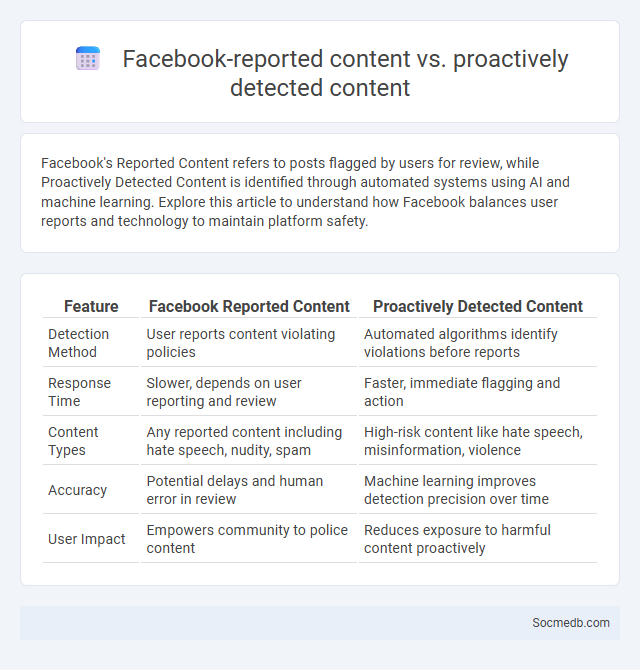

Facebook's Reported Content refers to posts flagged by users for review, while Proactively Detected Content is identified through automated systems using AI and machine learning. Explore this article to understand how Facebook balances user reports and technology to maintain platform safety.

Table of Comparison

| Feature | Facebook Reported Content | Proactively Detected Content |

|---|---|---|

| Detection Method | User reports content violating policies | Automated algorithms identify violations before reports |

| Response Time | Slower, depends on user reporting and review | Faster, immediate flagging and action |

| Content Types | Any reported content including hate speech, nudity, spam | High-risk content like hate speech, misinformation, violence |

| Accuracy | Potential delays and human error in review | Machine learning improves detection precision over time |

| User Impact | Empowers community to police content | Reduces exposure to harmful content proactively |

Introduction to Facebook Content Regulation

Facebook content regulation involves a comprehensive framework of community standards designed to address harmful behavior, misinformation, and inappropriate content. These rules rely on artificial intelligence combined with human moderators to identify and remove content that violates policies related to hate speech, violence, and misinformation. Enforcement processes include content removal, account suspension, and transparency reports to ensure compliance and maintain user safety.

Defining Reported Content on Facebook

Reported content on Facebook refers to posts, comments, or profiles flagged by users or automated systems for violating community standards. This includes hate speech, harassment, misinformation, nudity, and violent content, which triggers Facebook's review process. The platform employs AI algorithms and human moderators to assess reports, ensuring swift removal or restriction of harmful or inappropriate material.

What is Proactively Detected Content?

Proactively detected content refers to any material identified and removed or flagged on social media platforms before users report it, using automated technologies like artificial intelligence and machine learning. This content includes harmful posts such as hate speech, misinformation, or violent imagery detected through algorithmic scanning and behavioral analysis. Understanding proactively detected content helps you maintain a safer online environment by ensuring harmful or violating posts are addressed swiftly and efficiently.

Facebook’s Content Moderation Policies Explained

Facebook's content moderation policies enforce community standards designed to prevent hate speech, misinformation, and violent content. The platform employs AI algorithms alongside human reviewers to identify and remove posts that violate these guidelines. Transparency reports reveal ongoing efforts to refine these policies in response to emerging threats and user feedback.

The Role of User Reports in Identifying Harmful Content

User reports play a critical role in identifying harmful content on social media platforms by enabling real-time detection and removal of offensive posts, hate speech, and misinformation. Algorithms alone often miss nuanced context, making user feedback essential for effective content moderation and maintaining community standards. This collaborative monitoring approach enhances platform safety, promoting a healthier online environment and reducing the spread of harmful material.

AI and Automation in Proactive Content Detection

AI-driven algorithms enable proactive content detection by analyzing patterns and context in social media posts to identify harmful or inappropriate content before it spreads. Automated systems leverage natural language processing (NLP) and machine learning to detect hate speech, misinformation, and explicit material in real time. These technologies enhance platform safety by reducing manual moderation efforts and enabling faster response to policy violations.

Challenges in Content Moderation on Facebook

Content moderation on Facebook faces significant challenges due to the vast volume of daily user-generated content, making it difficult to accurately identify and remove harmful or misleading posts. The platform struggles with balancing freedom of expression while enforcing community standards, often leading to controversies over censorship and bias. Advanced AI tools are employed to detect violations, but they frequently miss context or nuance, resulting in both false positives and negatives.

Comparing Reported vs Proactively Detected Content

Comparing reported versus proactively detected content reveals crucial insights into social media platform safety and user experience. Reported content relies on users identifying and flagging inappropriate posts, which can result in delayed responses and underreporting, while proactive detection uses advanced algorithms and AI to identify harmful content in real-time, enhancing platform security. Your social media engagement benefits from proactive monitoring systems that reduce exposure to offensive material faster than traditional reporting methods.

Impact of Content Moderation on Community Standards

Content moderation significantly influences community standards by ensuring that online interactions remain respectful and safe, thereby fostering a positive digital environment. Effective moderation reduces the spread of harmful content such as hate speech, misinformation, and harassment, which can otherwise degrade the quality of social discourse. As a user, your experience is shaped by these policies, which aim to balance freedom of expression with the need for maintaining a supportive and inclusive community.

Future Trends in Facebook Content Management

Future trends in Facebook content management emphasize AI-driven personalization, enabling you to deliver highly targeted and engaging posts by analyzing user behavior and preferences. Enhanced video content, including live streaming and AR filters, is poised to dominate, increasing interaction and retention on your page. Automation tools will streamline content scheduling and performance tracking, maximizing efficiency and audience reach.

socmedb.com

socmedb.com