Photo illustration: Facebook Misinformation Policy vs Twitter Misinformation Policy

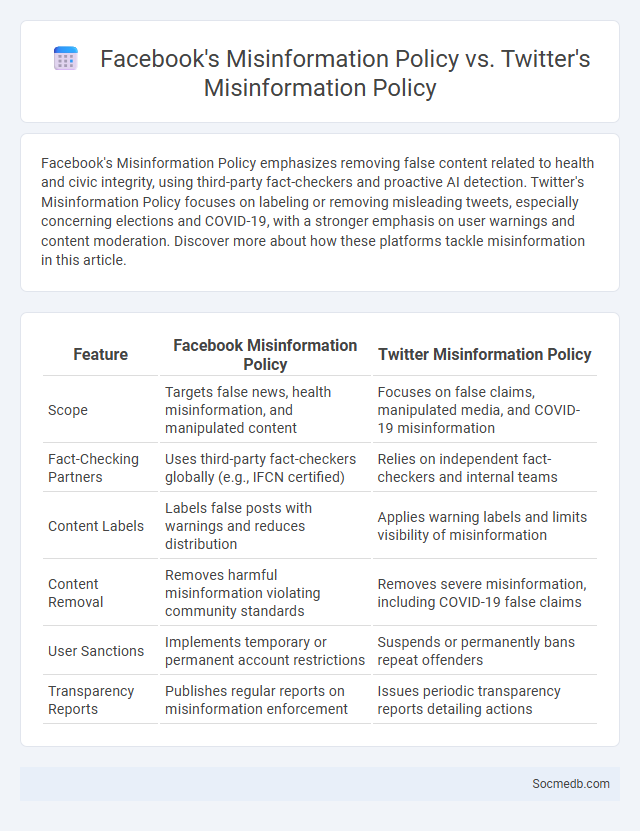

Facebook's Misinformation Policy emphasizes removing false content related to health and civic integrity, using third-party fact-checkers and proactive AI detection. Twitter's Misinformation Policy focuses on labeling or removing misleading tweets, especially concerning elections and COVID-19, with a stronger emphasis on user warnings and content moderation. Discover more about how these platforms tackle misinformation in this article.

Table of Comparison

| Feature | Facebook Misinformation Policy | Twitter Misinformation Policy |

|---|---|---|

| Scope | Targets false news, health misinformation, and manipulated content | Focuses on false claims, manipulated media, and COVID-19 misinformation |

| Fact-Checking Partners | Uses third-party fact-checkers globally (e.g., IFCN certified) | Relies on independent fact-checkers and internal teams |

| Content Labels | Labels false posts with warnings and reduces distribution | Applies warning labels and limits visibility of misinformation |

| Content Removal | Removes harmful misinformation violating community standards | Removes severe misinformation, including COVID-19 false claims |

| User Sanctions | Implements temporary or permanent account restrictions | Suspends or permanently bans repeat offenders |

| Transparency Reports | Publishes regular reports on misinformation enforcement | Issues periodic transparency reports detailing actions |

Overview of Misinformation Policies

Social media platforms implement comprehensive misinformation policies designed to curb the spread of false information and protect public discourse. These policies typically include fact-checking partnerships, content warnings, and removal of harmful or misleading posts to maintain the integrity of information shared on their networks. Understanding these measures helps Your critical evaluation of content and promotes a safer online environment.

Facebook’s Approach to Misinformation

Facebook employs advanced AI algorithms and a team of fact-checkers to identify and reduce the spread of misinformation on its platform. Your content is subjected to stringent checks, with false information being flagged, demoted in news feeds, or removed entirely to protect users from inaccurate news. Collaboration with third-party organizations enhances Facebook's ability to maintain a safer and more reliable information ecosystem.

Twitter’s Misinformation Policy Explained

Twitter's Misinformation Policy addresses the spread of false information by targeting content that could cause harm, such as misleading health claims, election-related falsehoods, and manipulated media. You can expect prompt labeling, reduction in visibility, or removal of tweets that violate these standards to protect public discourse. The policy prioritizes transparency and user safety while collaborating with fact-checkers to maintain accurate information on the platform.

Key Differences Between Facebook and Twitter Policies

Facebook's policies emphasize comprehensive content regulation with detailed community standards addressing hate speech, misinformation, and user privacy, while Twitter prioritizes real-time public conversation moderation with a stronger focus on combating harassment and misinformation through prompt intervention. Facebook enforces stricter data privacy controls and detailed user data management policies, contrasting with Twitter's emphasis on transparency and accountability in tweet visibility and content takedowns. Both platforms implement unique approaches to content moderation, reflecting their distinct user engagement models and regulatory challenges.

Content Moderation: Definition and Importance

Content moderation involves reviewing and managing user-generated content on social media platforms to ensure it complies with community guidelines and legal standards. Effective content moderation protects users from harmful material such as hate speech, misinformation, and explicit content, fostering a safer online environment. Your experience on social media is enhanced when platforms maintain a balanced approach to free expression and user safety through rigorous moderation practices.

Enforcement Mechanisms on Facebook

Facebook employs advanced enforcement mechanisms to uphold community standards by utilizing AI-powered content detection systems and a robust reporting infrastructure. Its automated algorithms identify and remove harmful content, such as hate speech, misinformation, and violent material, while human moderators review flagged posts for contextual accuracy. Enforcement effectiveness is enhanced through user feedback loops and periodic transparency reports detailing actions taken against policy violations.

Enforcement Strategies on Twitter

Effective enforcement strategies on Twitter involve a combination of automated detection tools and human moderation to identify and address policy violations such as hate speech, misinformation, and harassment. Twitter uses algorithms to flag harmful content quickly, while enforcement teams review reports from users to ensure accurate action. Your account safety depends on these robust enforcement practices designed to maintain a respectful and secure online environment.

Challenges in Content Moderation

Content moderation on social media faces challenges such as detecting harmful misinformation, hate speech, and graphic violence at scale while balancing user privacy and free expression. Automated systems struggle with context and nuance, leading to both false positives and negatives that impact user experience and platform integrity. Ensuring transparency and consistent enforcement of community standards remains a critical issue for maintaining trust and safety online.

Impact on User Experience and Public Discourse

Social media platforms significantly shape user experience by personalizing content through algorithms that analyze your interactions and preferences, enhancing engagement but sometimes creating echo chambers. Public discourse is transformed as these platforms facilitate rapid information exchange and diverse viewpoints, yet they also contribute to misinformation and polarization due to the speed and volume of shared content. Balancing algorithmic curation with content moderation is essential to improve social media's impact on both individual experience and societal conversations.

Future Trends in Platform Misinformation Policies

Social media platforms are increasingly adopting advanced AI-driven technologies to detect and mitigate misinformation, enhancing real-time content verification and user reporting systems. You can expect stricter enforcement of transparency guidelines and collaborative efforts with fact-checking organizations to curb the spread of false information. These future trends emphasize user trust and platform accountability to create a safer digital environment.

socmedb.com

socmedb.com