Photo illustration: Instagram Community Guidelines vs Twitter Rules

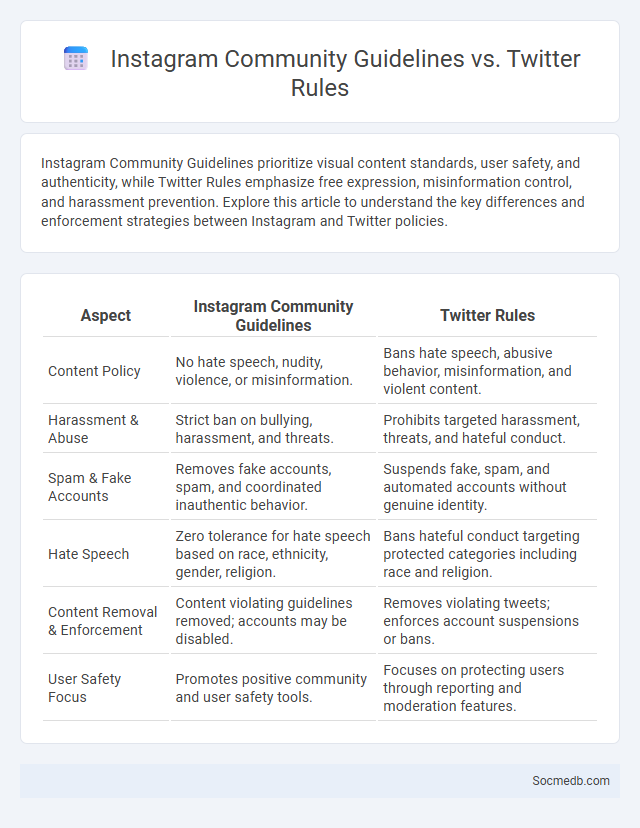

Instagram Community Guidelines prioritize visual content standards, user safety, and authenticity, while Twitter Rules emphasize free expression, misinformation control, and harassment prevention. Explore this article to understand the key differences and enforcement strategies between Instagram and Twitter policies.

Table of Comparison

| Aspect | Instagram Community Guidelines | Twitter Rules |

|---|---|---|

| Content Policy | No hate speech, nudity, violence, or misinformation. | Bans hate speech, abusive behavior, misinformation, and violent content. |

| Harassment & Abuse | Strict ban on bullying, harassment, and threats. | Prohibits targeted harassment, threats, and hateful conduct. |

| Spam & Fake Accounts | Removes fake accounts, spam, and coordinated inauthentic behavior. | Suspends fake, spam, and automated accounts without genuine identity. |

| Hate Speech | Zero tolerance for hate speech based on race, ethnicity, gender, religion. | Bans hateful conduct targeting protected categories including race and religion. |

| Content Removal & Enforcement | Content violating guidelines removed; accounts may be disabled. | Removes violating tweets; enforces account suspensions or bans. |

| User Safety Focus | Promotes positive community and user safety tools. | Focuses on protecting users through reporting and moderation features. |

Overview of Social Media Community Guidelines

Social media community guidelines are a set of rules designed to promote respectful, safe, and positive interactions among users across platforms like Facebook, Instagram, Twitter, and LinkedIn. These guidelines typically address issues such as hate speech, harassment, misinformation, and content moderation to ensure compliance with legal standards and platform policies. Understanding and following these rules helps protect your account from suspension and fosters a constructive online environment.

Instagram Community Guidelines: Key Principles

Instagram Community Guidelines emphasize respect, safety, and authenticity to foster a positive environment. Users must avoid hate speech, harassment, and misinformation while sharing genuine content that aligns with Instagram's policies. Enforcement involves content moderation through AI tools and user reporting to maintain platform integrity.

Twitter Rules: Essential Policies

Twitter's essential policies regulate user behavior by prohibiting hate speech, harassment, and misinformation to maintain a safe platform. Violations of Twitter Rules can result in account suspension, content removal, or permanent bans. Understanding and following these guidelines ensures your Twitter experience remains secure and respectful for all users.

Commonalities Among Social Media Guidelines

Social media guidelines commonly emphasize maintaining respectful communication, protecting user privacy, and adhering to legal regulations. They promote transparency by advising clear disclosure of affiliations and discourage sharing of misleading or harmful content. Consistent enforcement of these rules helps foster a safe and trustworthy online environment across platforms like Facebook, Twitter, and LinkedIn.

Differences Between Instagram and Twitter Policies

Instagram emphasizes visual content and enforces strict guidelines on image quality and nudity, while Twitter prioritizes text-based communication with broader allowances for diverse opinions but imposes rules against hate speech and misinformation. Your engagement on Instagram must adhere to community standards that protect personal privacy and promote positive interactions, whereas Twitter's policies focus heavily on preventing harassment and promoting transparency with real-time content moderation. Understanding these platform-specific rules helps you navigate posting restrictions and content visibility effectively.

Enforcement Mechanisms: Instagram vs Twitter

Instagram enforces its content policies through automated AI detection systems combined with user flagging, prioritizing the removal of misinformation, hate speech, and graphic content within hours. Twitter relies on a hybrid approach, including machine learning algorithms and human review teams, to moderate tweets, especially focusing on harassment, disinformation, and election-related content. Both platforms utilize escalating penalties such as warnings, temporary suspensions, and permanent bans to ensure policy compliance and maintain community standards.

Content Moderation Approaches

Content moderation approaches on social media platforms include automated algorithms, human review, and community flagging systems, each designed to identify and manage harmful or inappropriate content. Machine learning models analyze text, images, and videos to detect hate speech, misinformation, and spam with increasing accuracy. Human moderators intervene in complex cases requiring context-sensitive judgment to maintain platform safety and compliance with legal standards.

User Privacy and Data Protection

User privacy and data protection are critical concerns on social media platforms, where personal information is often collected, shared, and stored. Implementing robust encryption, strict access controls, and transparent privacy policies can significantly reduce the risk of data breaches and unauthorized use. To safeguard your digital identity, regularly review privacy settings and stay informed about how your data is being utilized.

Penalties for Violations: Instagram vs Twitter

Instagram and Twitter enforce penalties for violations differently, with Instagram primarily using content removal, temporary account suspensions, and permanent bans for repeated infractions against their community guidelines. Twitter employs a similar tiered approach, including tweet deletion, temporary locks, or permanent suspensions often tied to policy breaches like hate speech or misinformation. Your understanding of each platform's enforcement mechanisms helps navigate their community standards while minimizing risks of penalties.

Future Trends in Social Media Regulation

Future trends in social media regulation emphasize enhanced data privacy measures and stricter content moderation policies driven by global legislative frameworks such as the EU's Digital Services Act and the U.S. proposed transparency bills. Artificial intelligence and machine learning will play a critical role in identifying harmful content and ensuring compliance with evolving regulations. Platforms are expected to adopt more transparent algorithms and provide users with greater control over their data and online experience, addressing concerns around misinformation and user safety.

socmedb.com

socmedb.com