Photo illustration: Reddit Troll Accounts vs Main Accounts

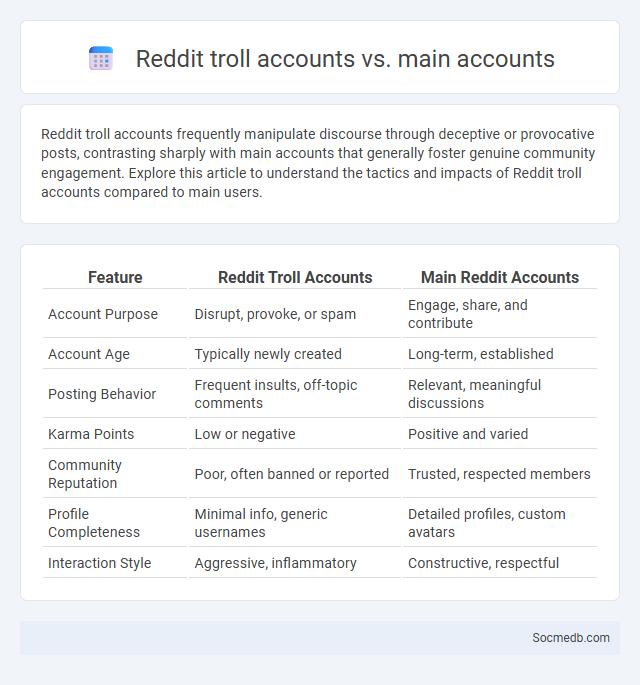

Reddit troll accounts frequently manipulate discourse through deceptive or provocative posts, contrasting sharply with main accounts that generally foster genuine community engagement. Explore this article to understand the tactics and impacts of Reddit troll accounts compared to main users.

Table of Comparison

| Feature | Reddit Troll Accounts | Main Reddit Accounts |

|---|---|---|

| Account Purpose | Disrupt, provoke, or spam | Engage, share, and contribute |

| Account Age | Typically newly created | Long-term, established |

| Posting Behavior | Frequent insults, off-topic comments | Relevant, meaningful discussions |

| Karma Points | Low or negative | Positive and varied |

| Community Reputation | Poor, often banned or reported | Trusted, respected members |

| Profile Completeness | Minimal info, generic usernames | Detailed profiles, custom avatars |

| Interaction Style | Aggressive, inflammatory | Constructive, respectful |

Understanding Reddit’s Account Types

Reddit offers several account types tailored to different user needs, including personal accounts for individual users, brand accounts for businesses, and moderator accounts for community management. Each account type provides distinct features such as posting privileges, moderation tools, and community engagement options. Understanding Reddit's account types helps you select the best option for maximizing your interaction and influence on the platform.

Defining Troll Accounts: Intent and Impact

Troll accounts are social media profiles created with the intent to provoke, disrupt, or manipulate conversations by posting inflammatory, off-topic, or false information. These accounts often amplify misinformation, fuel online harassment, and degrade the quality of digital discourse, impacting user experience and community trust. Understanding troll behavior is essential for platforms to implement effective moderation strategies and protect genuine user engagement.

Characteristics of Main Accounts on Reddit

Main accounts on Reddit typically feature a high post and comment karma, indicating active engagement and community trust. These accounts often exhibit consistent participation across multiple relevant subreddits, fostering specialization and influence within niche topics. Profile history demonstrates a balance of content creation and meaningful interaction, underpinning credibility and sustained community presence.

What Counts as Trolling on Reddit?

Trolling on Reddit involves posting inflammatory, off-topic, or disruptive comments with the intent to provoke emotional responses or disrupt discussions. Examples include deliberately posting misleading information, attacking users personally, or spamming threads with irrelevant content. Reddit's community guidelines define trolling as behavior that undermines constructive conversation and violates subreddit-specific rules.

Differences Between Troll and Main Accounts

Troll accounts on social media often use fake identities to spread misinformation, provoke conflicts, or manipulate discussions, while main accounts represent real users sharing authentic experiences and personal content. Your main account is tied to your genuine online identity and interactions, promoting trust and meaningful connections. Troll accounts lack credibility and are primarily designed to disrupt or deceive within online communities.

Motivations Behind Creating Troll Accounts

Creating troll accounts is often motivated by a desire to provoke reactions, spread misinformation, or influence public opinion on social media platforms. Some individuals seek anonymity to express controversial or unpopular views without personal repercussions, while others aim to disrupt communities for entertainment or political gain. Understanding your motivations behind engaging with troll accounts can help in identifying and mitigating their impact effectively.

Community Responses to Trolling Behavior

Community responses to trolling behavior on social media significantly impact user experience and platform culture. Effective strategies include collective reporting, supportive comment threads, and moderation interventions that discourage negativity and reinforce positive interactions. Your engagement in these responses can help cultivate a safer, more respectful online environment.

Detecting Troll Accounts: Signals and Red Flags

Detecting troll accounts on social media involves analyzing behavior patterns such as high-frequency posting, use of inflammatory language, and repetitive sharing of controversial content. Key signals include anonymous or newly created profiles, disproportionate activity during coordinated campaigns, and consistent targeting of specific individuals or groups with harassment. Advanced tools leverage AI algorithms to spot unnatural engagement metrics and linguistic cues, helping platforms mitigate troll-driven misinformation and toxicity.

Moderation Strategies for Trolling on Reddit

Effective moderation strategies for trolling on Reddit include implementing automated filters that detect offensive language and spam, empowering community moderators to enforce clear rules consistently, and encouraging users to report harmful behavior promptly. Utilizing AI-driven tools can help identify patterns of troll activity while fostering constructive discussions within subreddits. Your proactive engagement combined with these measures enhances the overall user experience and maintains a positive platform environment.

Ethical and Legal Implications of Trolling

Trolling on social media platforms raises significant ethical concerns regarding harassment, misinformation, and emotional harm to individuals or groups. Legally, actions such as defamation, cyberbullying, and hate speech associated with trolling can lead to criminal charges or civil lawsuits under laws like the Communications Decency Act and Anti-Cyberbullying statutes. Platforms often implement content moderation policies to mitigate trolling, balancing free speech rights with users' protection.

socmedb.com

socmedb.com