Photo illustration: vote manipulation vs shadowbanning

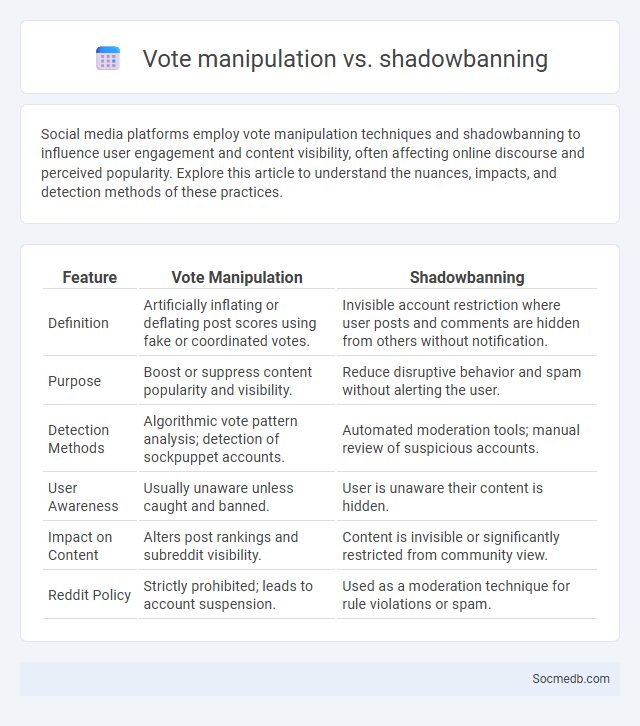

Social media platforms employ vote manipulation techniques and shadowbanning to influence user engagement and content visibility, often affecting online discourse and perceived popularity. Explore this article to understand the nuances, impacts, and detection methods of these practices.

Table of Comparison

| Feature | Vote Manipulation | Shadowbanning |

|---|---|---|

| Definition | Artificially inflating or deflating post scores using fake or coordinated votes. | Invisible account restriction where user posts and comments are hidden from others without notification. |

| Purpose | Boost or suppress content popularity and visibility. | Reduce disruptive behavior and spam without alerting the user. |

| Detection Methods | Algorithmic vote pattern analysis; detection of sockpuppet accounts. | Automated moderation tools; manual review of suspicious accounts. |

| User Awareness | Usually unaware unless caught and banned. | User is unaware their content is hidden. |

| Impact on Content | Alters post rankings and subreddit visibility. | Content is invisible or significantly restricted from community view. |

| Reddit Policy | Strictly prohibited; leads to account suspension. | Used as a moderation technique for rule violations or spam. |

Understanding Vote Manipulation: Definition and Types

Vote manipulation on social media involves artificially influencing user opinions or poll outcomes through tactics such as fake accounts, bots, or coordinated voting campaigns. Common types include ballot stuffing, where multiple votes are cast fraudulently, and vote buying, which entails incentivizing users to vote a certain way. Identifying these manipulations is crucial for maintaining the integrity of online polls and social engagement metrics.

What is Shadowbanning? How It Works in Online Communities

Shadowbanning is a technique used in online communities and social media platforms to limit the visibility of a user's content without their knowledge. When shadowbanned, Your posts or comments become hidden or less visible to others, reducing interaction and reach without outright banning or notifying you. This method helps moderators control spam, inappropriate behavior, or violations of community guidelines while maintaining a seamless user experience.

Vote Manipulation vs Shadowbanning: Key Differences

Vote manipulation involves artificially inflating or deflating engagement metrics like likes, upvotes, or downvotes to mislead algorithms and users about content popularity. Shadowbanning discreetly limits the visibility of your posts or comments without notifying you, effectively reducing organic reach and interaction. Understanding these key differences helps you identify tactics undermining authentic social media influence and engagement.

The Impact of Vote Manipulation on Online Discourse

Vote manipulation on social media platforms disrupts authentic online discourse by artificially inflating or deflating the popularity of content, skewing public perception and engagement metrics. This manipulation undermines the integrity of democratic participation in digital spaces, leading to misinformation proliferation and reduced trust in user-generated feedback mechanisms. Platforms grappling with vote manipulation face challenges in maintaining unbiased algorithms and fostering genuine community interactions.

How Shadowbanning Affects User Engagement

Shadowbanning significantly reduces user engagement by limiting the visibility of posts without notifying the user, causing content to reach fewer followers and diminishing interaction rates. This hidden suppression leads to decreased likes, comments, and shares, which can lower motivation to create content and stifle community growth. Platforms employing shadowbanning disrupt organic reach, ultimately impacting user satisfaction and platform loyalty.

Common Signs of Vote Manipulation and Detection Methods

Common signs of vote manipulation on social media include sudden spikes in engagement, repetitive posting patterns, and an unusually high number of similar comments or reactions from new or inactive accounts. Detection methods leverage machine learning algorithms to identify anomalous activity, analyze network patterns for coordinated behavior, and use sentiment analysis to spot unnatural content trends. Platforms often employ real-time monitoring tools combined with user reports to mitigate the impact of fraudulent voting and maintain integrity.

Strategies Platforms Use to Combat Vote Manipulation

Social media platforms implement advanced algorithms and AI-driven tools to detect and remove fake accounts, disinformation, and coordinated inauthentic behavior aimed at vote manipulation. These strategies include real-time monitoring, partnership with fact-checkers, and transparency reports to ensure election integrity. Your engagement can be safeguarded by these proactive efforts designed to maintain authentic and reliable discourse during election periods.

Identifying and Preventing Shadowbanning

Shadowbanning on social media can significantly reduce Your content's visibility without an official notice, often triggered by violating platform guidelines or engaging in spam-like behavior. Identifying shadowbanning involves checking engagement drops, using tool diagnostics, or testing posts with different accounts to verify limited reach. Prevent shadowbanning by adhering to community rules, avoiding excessive hashtags, and maintaining authentic interactions to ensure consistent audience engagement.

Ethical Implications of Vote Manipulation and Shadowbanning

Vote manipulation and shadowbanning on social media platforms raise significant ethical concerns by undermining democratic processes and silencing user voices without transparency. These practices distort genuine user engagement, erode trust, and can disproportionately affect marginalized communities by limiting their online visibility. Protecting Your digital rights requires advocating for accountability and clearer policies against such covert moderation tactics.

Building Transparent Moderation Policies for Fair Voting Systems

Building transparent moderation policies enhances trust in social media voting systems by clearly defining rules for content review and user participation. Incorporating audit trails and community feedback mechanisms ensures accountability and reduces bias in decision-making processes. Transparent moderation fosters fair voting environments that encourage user engagement and uphold platform integrity.

socmedb.com

socmedb.com