Photo illustration: TikTok banned content vs Reddit rules

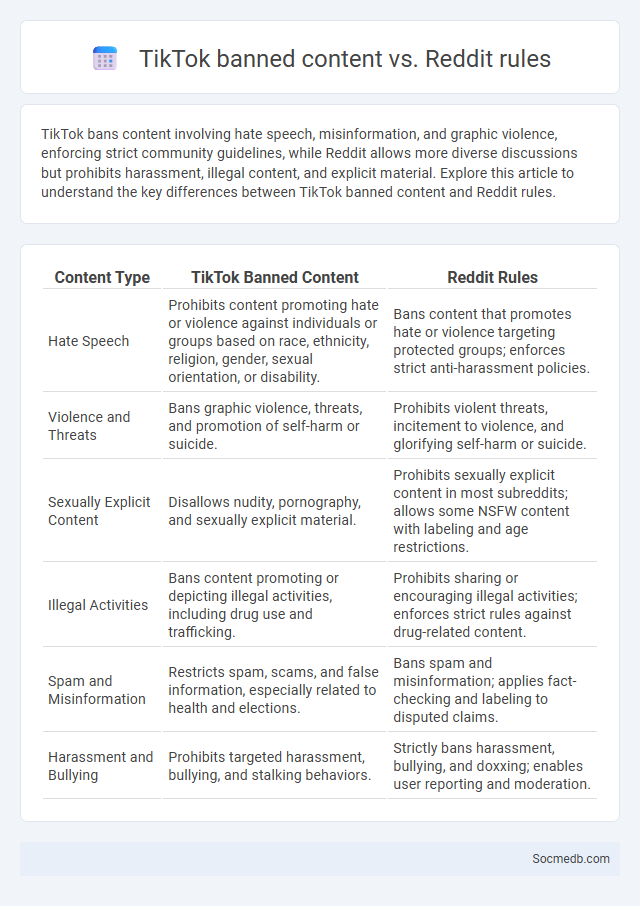

TikTok bans content involving hate speech, misinformation, and graphic violence, enforcing strict community guidelines, while Reddit allows more diverse discussions but prohibits harassment, illegal content, and explicit material. Explore this article to understand the key differences between TikTok banned content and Reddit rules.

Table of Comparison

| Content Type | TikTok Banned Content | Reddit Rules |

|---|---|---|

| Hate Speech | Prohibits content promoting hate or violence against individuals or groups based on race, ethnicity, religion, gender, sexual orientation, or disability. | Bans content that promotes hate or violence targeting protected groups; enforces strict anti-harassment policies. |

| Violence and Threats | Bans graphic violence, threats, and promotion of self-harm or suicide. | Prohibits violent threats, incitement to violence, and glorifying self-harm or suicide. |

| Sexually Explicit Content | Disallows nudity, pornography, and sexually explicit material. | Prohibits sexually explicit content in most subreddits; allows some NSFW content with labeling and age restrictions. |

| Illegal Activities | Bans content promoting or depicting illegal activities, including drug use and trafficking. | Prohibits sharing or encouraging illegal activities; enforces strict rules against drug-related content. |

| Spam and Misinformation | Restricts spam, scams, and false information, especially related to health and elections. | Bans spam and misinformation; applies fact-checking and labeling to disputed claims. |

| Harassment and Bullying | Prohibits targeted harassment, bullying, and stalking behaviors. | Strictly bans harassment, bullying, and doxxing; enables user reporting and moderation. |

Overview of Platform Policies: TikTok vs Reddit

TikTok enforces strict content guidelines emphasizing community safety, copyright protection, and banned behaviors like hate speech and misinformation. Reddit's policies center on fostering diverse community-driven content with individualized subreddit rules, emphasizing transparency and user moderation. Understanding these platform policies helps you navigate content creation and engagement effectively while avoiding violations.

Defining Banned Content on TikTok

Banned content on TikTok includes material that violates community guidelines such as hate speech, graphic violence, harassment, nudity, and misinformation. TikTok enforces strict policies to remove content promoting self-harm, illegal activities, and extremist behavior to maintain a safe platform environment. Understanding these restrictions is crucial for creators to avoid account suspension and ensure compliance with TikTok's content moderation standards.

Reddit’s Rule Enforcement and Content Guidelines

Reddit enforces strict rule enforcement and content guidelines to maintain community standards and user safety across its platform. These guidelines prohibit harassment, hate speech, and the sharing of illegal content, with moderators actively reviewing reports and applying removals or bans as necessary. Compliance with Reddit's policies ensures a respectful environment while promoting open discussions within diverse subreddits.

Key Differences in Prohibited Content

Social media platforms vary significantly in their prohibited content policies, with some banning hate speech and misinformation more strictly than others. Platforms like Facebook and Twitter enforce comprehensive rules against harassment, graphic violence, and adult content, while TikTok places strong emphasis on removing content related to dangerous challenges and misinformation about health. Understanding these key differences helps you navigate community guidelines effectively and ensures your content complies with specific platform standards.

Community Moderation on Reddit vs TikTok’s Automated Systems

Reddit incorporates community moderation through a network of volunteer moderators who enforce subreddit-specific rules, fostering tailored and context-aware content control. TikTok relies heavily on automated systems powered by AI algorithms to detect and remove violating content, enabling rapid but sometimes less nuanced moderation at scale. The contrast highlights Reddit's human-centric approach versus TikTok's technology-driven model in managing community standards and user behavior.

Case Studies: Viral Bans on TikTok and Reddit

Viral bans on TikTok and Reddit highlight the challenges platforms face in moderating user content while maintaining user engagement, as seen in TikTok's 2020 ban in India, which led to a 60% drop in daily active users. Reddit's temporary ban of certain subreddits, such as r/WallStreetBets during the GameStop stock surge, illustrated the tension between preventing misinformation and preserving free speech. These case studies underscore the balance platforms must achieve between regulatory compliance and community trust to sustain long-term growth.

Appeals and Content Restoration Processes

Social media platforms prioritize Appeals and Content Restoration Processes to maintain fair communication and user trust by allowing you to challenge content removals or account suspensions. These procedures involve a structured review system where submitted appeals are evaluated against platform policies to ensure compliance and rectify potential errors. Efficient Appeals and Content Restoration Processes are essential for upholding freedom of expression while combating misinformation and harmful content.

Cultural Impact of Content Restrictions

Content restrictions on social media shape cultural expression by limiting diverse voices and creative freedoms, which can suppress minority perspectives and cultural innovation. Algorithms enforcing these rules often prioritize mainstream content, leading to homogenization of cultural narratives and reducing the richness of global digital discourse. The tension between regulation and free expression highlights ongoing debates around censorship, cultural preservation, and the democratization of online spaces.

User Reactions to Content Bans

User reactions to content bans on social media vary widely, including frustration, support, and calls for transparency. Studies reveal that content removal often leads to debates about censorship, freedom of speech, and platform responsibility. Social media platforms must navigate these responses to maintain community trust and adherence to policies.

Future Trends in Content Moderation for Social Platforms

Emerging technologies like artificial intelligence and machine learning are revolutionizing content moderation by enabling faster, more accurate detection of harmful or inappropriate material. Your social platform will increasingly rely on automated tools combined with human oversight to balance free expression and safety effectively. Privacy-focused algorithms and transparency in moderation policies are set to become industry standards, enhancing user trust and compliance with global regulations.

socmedb.com

socmedb.com