Photo illustration: Trolls vs Comment Section

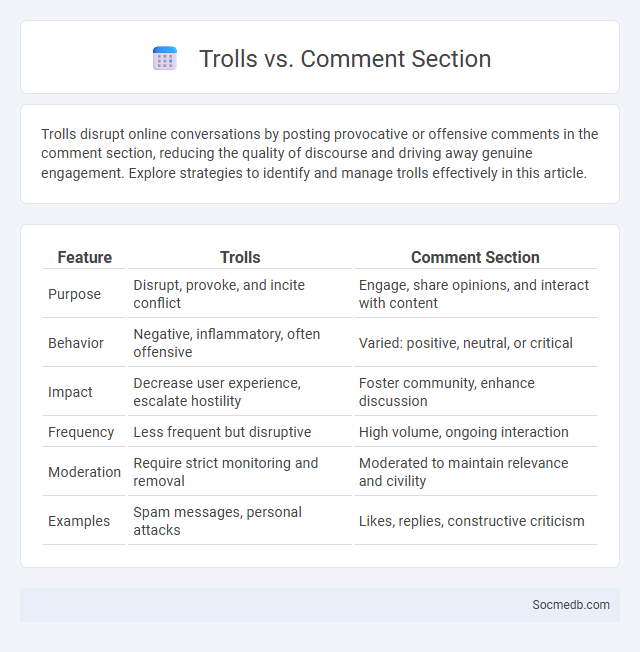

Trolls disrupt online conversations by posting provocative or offensive comments in the comment section, reducing the quality of discourse and driving away genuine engagement. Explore strategies to identify and manage trolls effectively in this article.

Table of Comparison

| Feature | Trolls | Comment Section |

|---|---|---|

| Purpose | Disrupt, provoke, and incite conflict | Engage, share opinions, and interact with content |

| Behavior | Negative, inflammatory, often offensive | Varied: positive, neutral, or critical |

| Impact | Decrease user experience, escalate hostility | Foster community, enhance discussion |

| Frequency | Less frequent but disruptive | High volume, ongoing interaction |

| Moderation | Require strict monitoring and removal | Moderated to maintain relevance and civility |

| Examples | Spam messages, personal attacks | Likes, replies, constructive criticism |

Understanding Internet Trolls: Definition and Motivation

Internet trolls are individuals who deliberately provoke, disrupt, or upset online communities by posting inflammatory or off-topic messages to elicit emotional responses. Understanding your interaction with trolls involves recognizing their motivation, which often stems from a desire for attention, boredom, or a need to exert control. Effective strategies to manage trolls include avoiding engagement and using platform tools to report or block disruptive behavior.

Anatomy of a Comment Section: Purpose and Pitfalls

The comment section on social media platforms serves as a critical space for user engagement, enabling conversations, feedback, and community building. You must navigate potential pitfalls such as misinformation, cyberbullying, and off-topic discussions that can undermine constructive interaction. Leveraging moderation tools and clear community guidelines helps maintain a positive and purposeful comment environment.

Trolls vs Commenters: How Interactions Escalate

Online interactions often escalate when trolls deliberately provoke others by posting inflammatory or off-topic comments to elicit emotional responses. Commenters who engage with trolls may unintentionally fuel conflicts, leading to more aggressive exchanges and polarizing discussions. Managing your responses and recognizing trolls' tactics helps prevent escalation and promotes healthier social media environments.

The Evolution of Online Trolling Behavior

Online trolling behavior has evolved from simple prank-like comments to complex, coordinated campaigns targeting individuals and communities across multiple platforms. Advanced algorithms and anonymity features on social media enable trolls to spread misinformation rapidly, influencing public opinion and destabilizing social discourse. Research indicates that the rise of deepfake technology and AI-driven bots has further intensified the scope and impact of trolling, making detection and prevention increasingly challenging.

Psychological Impact of Trolls in Comment Sections

Trolls in social media comment sections trigger significant psychological distress by fostering feelings of anxiety, self-doubt, and lowered self-esteem among users. Persistent exposure to hostile comments can contribute to increased stress levels and exacerbate symptoms of depression or social withdrawal. Understanding the psychological impact of such negative interactions is crucial for developing effective moderation strategies and promoting healthier online communities.

Moderation Strategies: Managing Trolls Effectively

Effective moderation strategies for managing trolls on social media involve setting clear community guidelines that define unacceptable behavior and enforcing these rules consistently. Utilizing automated tools like AI-powered filters and machine learning algorithms helps detect and remove harmful content swiftly while minimizing manual effort. You can maintain a positive online environment by combining proactive monitoring with transparent communication to deter trolls and protect genuine user engagement.

Free Speech vs Toxicity: A Delicate Balance

Social media platforms struggle to maintain a delicate balance between protecting free speech and curbing toxicity, as unrestricted expression often leads to harassment and misinformation. Algorithms and moderation policies are critical in filtering harmful content while respecting user rights, yet the challenge lies in avoiding censorship that undermines democratic discourse. Data shows that platforms employing transparent, community-driven guidelines experience higher user trust and engagement while reducing toxic interactions.

Community Guidelines: Setting Boundaries for Engagement

Community guidelines establish clear boundaries for respectful and constructive interactions on social media platforms, ensuring a safe environment for all users. These rules address content standards, including prohibitions on hate speech, harassment, and misinformation, which help maintain the integrity of online communities. Enforcing consistent moderation policies supports positive engagement and protects users from harmful behaviors, fostering trust and inclusivity.

Real-Life Examples: Trolls Disrupting Comment Sections

Trolls frequently disrupt comment sections on social media platforms such as Twitter, Facebook, and Instagram by posting inflammatory or off-topic messages designed to provoke others. Real-life examples include organized attacks during political debates, where trolls flood comments to spread misinformation or harass users, significantly impacting genuine conversations. You can protect your online community by using moderation tools and encouraging positive engagement to minimize the effects of these disruptive behaviors.

Building Healthy Online Discussions Amid Troll Activity

Effective strategies for building healthy online discussions amid troll activity include establishing clear community guidelines that promote respectful dialogue and swiftly addressing disruptive behavior through moderation tools. Encouraging users to engage with evidence-based content and fostering digital literacy helps reduce misinformation spread and defuse inflammatory comments. Platforms like Reddit and Twitter implement AI-driven moderation to detect and mitigate trolling, creating safer digital environments for meaningful conversations.

socmedb.com

socmedb.com