Photo illustration: Community Notes vs Block

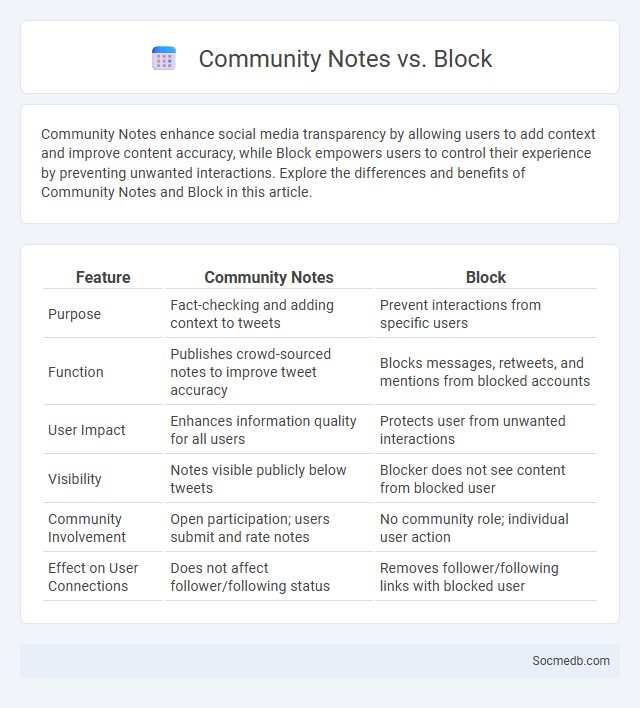

Community Notes enhance social media transparency by allowing users to add context and improve content accuracy, while Block empowers users to control their experience by preventing unwanted interactions. Explore the differences and benefits of Community Notes and Block in this article.

Table of Comparison

| Feature | Community Notes | Block |

|---|---|---|

| Purpose | Fact-checking and adding context to tweets | Prevent interactions from specific users |

| Function | Publishes crowd-sourced notes to improve tweet accuracy | Blocks messages, retweets, and mentions from blocked accounts |

| User Impact | Enhances information quality for all users | Protects user from unwanted interactions |

| Visibility | Notes visible publicly below tweets | Blocker does not see content from blocked user |

| Community Involvement | Open participation; users submit and rate notes | No community role; individual user action |

| Effect on User Connections | Does not affect follower/following status | Removes follower/following links with blocked user |

Introduction to Community Notes

Community Notes is a collaborative feature on social media platforms designed to enhance content accuracy by allowing users to add context to posts. This system leverages crowdsourced contributions to identify and mitigate misinformation, promoting transparent and reliable information sharing. Engagement with Community Notes encourages critical thinking and fosters a more informed online community.

What is a Block in Social Platforms?

A block in social media platforms is a powerful privacy tool that prevents specific users from interacting with your profile, viewing your posts, or contacting you. By blocking someone, you maintain control over your online space and avoid unwanted interactions or harassment. Your social media experience becomes safer and more personalized by managing who can access your content.

Community Notes vs Block: Key Differences

Community Notes foster transparency by allowing users to add context to posts, improving information accuracy and reducing misinformation. Block features directly prevent interactions from specific users, enhancing your online safety by limiting exposure to unwanted content. Understanding these differences helps you effectively manage your social media experience for both information quality and personal security.

How Community Notes Enhance User Discourse

Community Notes enhance user discourse by empowering diverse participants to add context and fact-check content collaboratively on platforms like Twitter. This crowdsourced approach improves information accuracy, reduces misinformation spread, and fosters transparency by highlighting multiple perspectives. Ultimately, Community Notes create a more informed and constructive social media environment through collective moderation.

The Purpose and Limitations of Blocking

Blocking on social media serves the primary purpose of protecting users from harassment, unwanted interactions, and privacy invasions by restricting specific accounts from viewing or contacting them. This feature helps maintain mental well-being and online safety by giving individuals control over their digital environment. However, blocking has limitations as it cannot prevent all forms of indirect harm, such as content sharing by third parties, and may sometimes lead to social isolation or misunderstandings among users.

Impact on Transparency: Community Notes vs Block

Community Notes enhance transparency on social media by allowing users to collaboratively fact-check and provide context to posts, increasing accountability and reducing misinformation. In contrast, the Block feature limits transparency by hiding content and user interactions, potentially creating echo chambers and reducing exposure to diverse perspectives. You can foster a more informed social media environment by engaging with Community Notes rather than relying solely on blocking.

Moderation: Community-driven vs Platform-controlled

Social media moderation balances between community-driven approaches, where users actively report and regulate content, and platform-controlled systems relying on automated algorithms and internal policies. Your experience and safety on the platform depend on how effectively these methods collaborate to filter harmful content, maintain respectful dialogue, and uphold platform standards. Effective moderation requires a strategic blend of human judgment and technological enforcement to foster a trustworthy online environment.

User Experience: Notes Versus Blocking

User experience on social media improves significantly when platforms offer granular controls like notes instead of outright blocking, fostering more nuanced interactions. Notes allow users to provide context or feedback without severing connections, which can reduce negative emotions and promote constructive dialogue. This approach supports a more open communication environment, enhancing engagement and user satisfaction.

Case Studies: Community Notes and Block in Action

Community Notes enhances social media transparency by enabling users to add context to misleading posts, improving content accuracy and trustworthiness. Block in Action empowers Your online experience by allowing you to restrict interactions with unwanted accounts, fostering a safer, more personalized environment. Both tools demonstrate effective case studies in promoting user-driven moderation and content integrity on social platforms.

Choosing the Best Moderation Method

Choosing the best social media moderation method depends on factors such as the platform's user base size, content type, and the level of community engagement. Automated moderation tools powered by AI excel in handling large volumes of content quickly but may require human oversight to address nuanced or context-sensitive issues. Implementing a hybrid approach that combines machine learning algorithms with dedicated human moderators enhances accuracy, ensuring a safer and more positive online environment.

socmedb.com

socmedb.com