Photo illustration: Sockpuppet vs Spambot

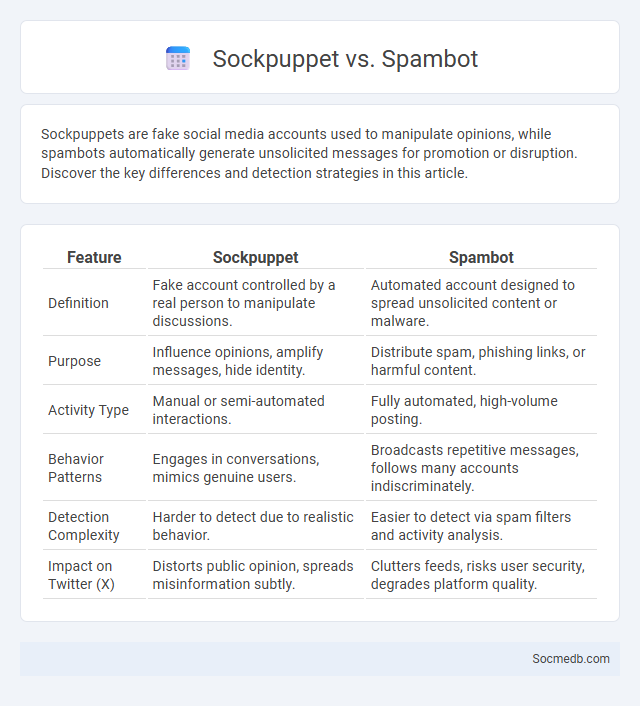

Sockpuppets are fake social media accounts used to manipulate opinions, while spambots automatically generate unsolicited messages for promotion or disruption. Discover the key differences and detection strategies in this article.

Table of Comparison

| Feature | Sockpuppet | Spambot |

|---|---|---|

| Definition | Fake account controlled by a real person to manipulate discussions. | Automated account designed to spread unsolicited content or malware. |

| Purpose | Influence opinions, amplify messages, hide identity. | Distribute spam, phishing links, or harmful content. |

| Activity Type | Manual or semi-automated interactions. | Fully automated, high-volume posting. |

| Behavior Patterns | Engages in conversations, mimics genuine users. | Broadcasts repetitive messages, follows many accounts indiscriminately. |

| Detection Complexity | Harder to detect due to realistic behavior. | Easier to detect via spam filters and activity analysis. |

| Impact on Twitter (X) | Distorts public opinion, spreads misinformation subtly. | Clutters feeds, risks user security, degrades platform quality. |

Introduction: Defining Sockpuppets and Spambots

Sockpuppets are fake online identities created to manipulate social media discussions, often used for spreading misinformation or amplifying opinions dishonestly. Spambots are automated programs designed to post spam content, generate fake followers, and distort engagement metrics. Understanding these entities helps you protect your online presence from deceptive interactions and maintain authentic social media experiences.

Understanding Sockpuppets: Hidden Agendas Online

Sockpuppets on social media are fake identities created to manipulate opinions, spread misinformation, or amplify certain viewpoints without revealing the true source. Recognizing these hidden agendas helps safeguard your online interactions and promotes a more authentic digital environment. Identifying patterns such as repetitive posting from new accounts or excessive praise for specific ideas can expose sockpuppetry.

What Are Spambots? Automation in Digital Deception

Spambots are automated programs designed to mimic human behavior on social media platforms, generating spam content and fraudulent interactions to manipulate user engagement. These bots exploit algorithmic vulnerabilities for digital deception by mass-producing fake comments, likes, and messages that distort public opinion and inflate follower counts. Advanced AI-driven spambots increasingly evade detection, complicating efforts to maintain authentic online conversations and safeguard digital ecosystems.

Comparing Sockpuppets and Spambots: Key Differences

Sockpuppets are fake online identities controlled by a single person to deceive others, often used to manipulate opinions and create false consensus on social media platforms. Spambots, on the other hand, are automated programs designed to spread spam, promote scams, or distribute malicious content across social networks rapidly. Understanding the key differences between sockpuppets and spambots helps you identify deceptive behaviors, enhancing your ability to navigate social media safely.

Common Motivations Behind Sockpuppet Accounts

Sockpuppet accounts on social media are frequently created to manipulate public opinion, amplify certain viewpoints, or fabricate artificial consensus. Users behind these accounts often aim to evade restrictions, disguise identity, or conduct covert promotion of products, ideologies, or individuals. Research indicates that political agendas, marketing strategies, and online harassment commonly drive the proliferation of sockpuppet profiles.

Spambot Tactics: How Bots Manipulate Platforms

Spambot tactics exploit social media algorithms through mass automated content posting, fake accounts, and keyword stuffing to manipulate visibility and engagement metrics. These bots often flood platforms with repetitive messages, misleading information, or malicious links to distort user perception and amplify misinformation. Platforms like Facebook and Twitter continuously improve detection methods such as machine learning models and behavioral analysis to combat spambot activity and protect authentic user interactions.

Identifying Sockpuppets: Signs and Red Flags

Sockpuppets on social media exhibit patterns such as identical posting styles, repetitive content, and synchronized activity times across multiple accounts. Red flags include uncanny similarities in language use, profile images, and interaction patterns that artificially boost engagement. Advanced detection techniques analyze metadata inconsistencies and network behavior to uncover hidden connections between fake personas.

Automated vs Human Deception: Challenges in Detection

Detecting deception on social media presents significant challenges due to the nuanced differences between automated bots and human-generated falsehoods. Automated deception often follows identifiable patterns and repetitive behavior, while human deception leverages complex language and emotional cues. Your ability to discern these subtleties is crucial for maintaining accurate information and trust in digital interactions.

The Impact of Sockpuppets and Spambots on Online Communities

Sockpuppets and spambots severely disrupt online communities by amplifying misinformation and skewing public opinion, resulting in reduced trust and engagement among users. These deceptive practices manipulate social media algorithms, inflating follower counts and artificially boosting content visibility, which undermines authentic interactions. Effective detection methods and platform policies are crucial to preserving the integrity and health of digital ecosystems.

Prevention and Mitigation Strategies for Digital Manipulation

Preventing and mitigating digital manipulation on social media involves implementing advanced AI-driven detection systems that identify deepfakes, misinformation, and bot-generated content in real-time. Platforms like Facebook and Twitter employ machine learning algorithms to analyze user behavior patterns and flag suspicious accounts or posts, while user education campaigns raise awareness about verifying sources and recognizing deceptive content. Regulatory measures, combined with cross-industry collaboration, enhance transparency and accountability, reducing the spread and impact of digital manipulation online.

socmedb.com

socmedb.com