Photo illustration: WhatsApp moderation vs Telegram moderation

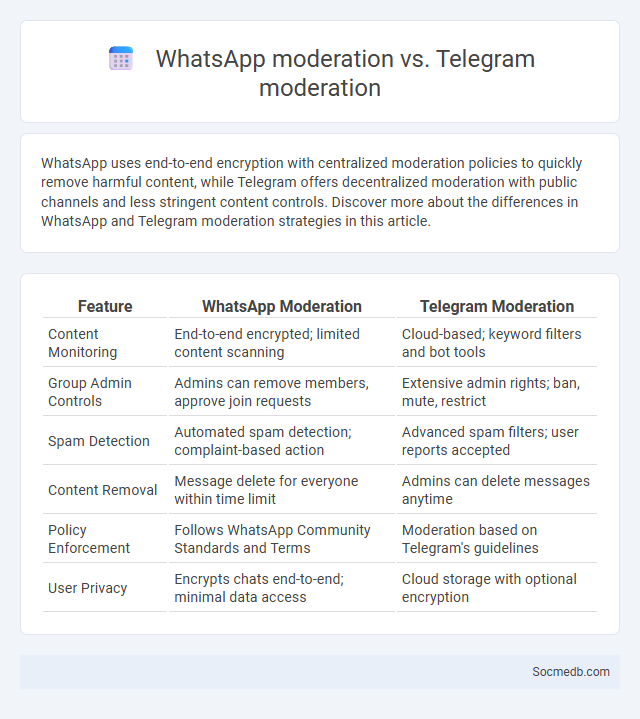

WhatsApp uses end-to-end encryption with centralized moderation policies to quickly remove harmful content, while Telegram offers decentralized moderation with public channels and less stringent content controls. Discover more about the differences in WhatsApp and Telegram moderation strategies in this article.

Table of Comparison

| Feature | WhatsApp Moderation | Telegram Moderation |

|---|---|---|

| Content Monitoring | End-to-end encrypted; limited content scanning | Cloud-based; keyword filters and bot tools |

| Group Admin Controls | Admins can remove members, approve join requests | Extensive admin rights; ban, mute, restrict |

| Spam Detection | Automated spam detection; complaint-based action | Advanced spam filters; user reports accepted |

| Content Removal | Message delete for everyone within time limit | Admins can delete messages anytime |

| Policy Enforcement | Follows WhatsApp Community Standards and Terms | Moderation based on Telegram's guidelines |

| User Privacy | Encrypts chats end-to-end; minimal data access | Cloud storage with optional encryption |

Introduction to Messaging App Moderation

Messaging app moderation involves monitoring and managing user-generated content to ensure safe and respectful communication within digital platforms. Techniques include automated filtering, AI-powered content analysis, and human review to detect spam, harassment, and inappropriate language. Effective moderation enhances user experience, reduces harmful interactions, and complies with regulatory requirements across global markets.

Overview of WhatsApp Moderation Policies

WhatsApp moderation policies focus on maintaining a safe and respectful communication environment by restricting spam, misinformation, hate speech, and illegal content. Your messages are subject to automated systems and user reports that help detect and remove harmful content swiftly. These policies ensure compliance with legal regulations and community standards to protect user privacy and security.

Telegram’s Approach to Moderation

Telegram employs a decentralized moderation strategy emphasizing user privacy and content encryption while allowing community-driven enforcement through independent channels and groups. Its approach prioritizes minimal interference by the platform itself, empowering users to manage content and report violations within their networks. This model differs from traditional social media platforms by balancing free speech with targeted intervention against harmful content via localized moderation policies.

Comparison of WhatsApp and Telegram Moderation Tools

WhatsApp offers end-to-end encryption and basic content moderation features primarily focused on spam and abuse reporting, relying heavily on user reports and automated detection systems. Telegram provides more advanced moderation tools with customizable bots, channel administrators, and AI-driven filters, allowing for granular control over content and user behavior. Your choice between WhatsApp and Telegram moderation tools depends on the level of control and automation you require for managing online communities.

User Reporting Mechanisms on WhatsApp and Telegram

User reporting mechanisms on WhatsApp and Telegram enable quick identification and action against harmful content or abusive behavior. WhatsApp allows users to report messages, groups, or contacts directly from the chat interface, which triggers a review by the platform's moderation team, enhancing community safety and privacy. Your reports contribute to maintaining a secure communication environment by helping these apps detect spam, misinformation, and violating content efficiently.

Effectiveness Against Spam: WhatsApp vs Telegram

WhatsApp employs end-to-end encryption and advanced spam detection algorithms to minimize unsolicited messages, resulting in a relatively low incidence of spam for users. Telegram offers robust spam control through automated bots and user-reported filtering, but its open API and public group features can occasionally lead to higher spam exposure. Both platforms continuously update their security protocols, yet WhatsApp's tighter integration with phone contacts enhances its effectiveness against spam compared to Telegram's more open messaging environment.

Automated vs Manual Moderation Techniques

Automated moderation techniques leverage AI algorithms and machine learning to quickly identify and filter inappropriate content, ensuring real-time enforcement of community guidelines. Manual moderation involves human reviewers who provide nuanced understanding and contextual judgment, essential for addressing complex or sensitive issues that algorithms might miss. Balancing automated tools with expert human oversight enhances Your platform's ability to maintain a safe, engaging social media environment.

Privacy Implications in Moderation Practices

Social media platforms often collect extensive personal data, raising significant privacy concerns in their moderation practices. Algorithms and human moderators analyze user content, sometimes exposing sensitive information without explicit consent. You should carefully understand how your data is used and shared during content moderation to protect your online privacy effectively.

Community Safety and Abuse Prevention

Effective social media platforms implement robust community safety measures, including real-time content monitoring and AI-driven abuse detection algorithms, to prevent harassment and harmful behavior. User reporting systems combined with proactive moderation teams help swiftly address violations and maintain respectful online environments. Data privacy protections and transparent enforcement policies further enhance trust and safety within digital communities.

Future Trends in Messaging App Moderation

Future trends in messaging app moderation emphasize advanced AI algorithms capable of detecting nuanced harmful content, including hate speech and misinformation, in real time. Integration of decentralized moderation frameworks is increasing, empowering user communities to participate in content regulation while maintaining privacy and transparency. Enhanced collaboration between platforms and regulatory bodies aims to establish standardized protocols for more effective, scalable, and ethical moderation practices.

socmedb.com

socmedb.com