Photo illustration: YouTube AI Moderation vs Human Moderation

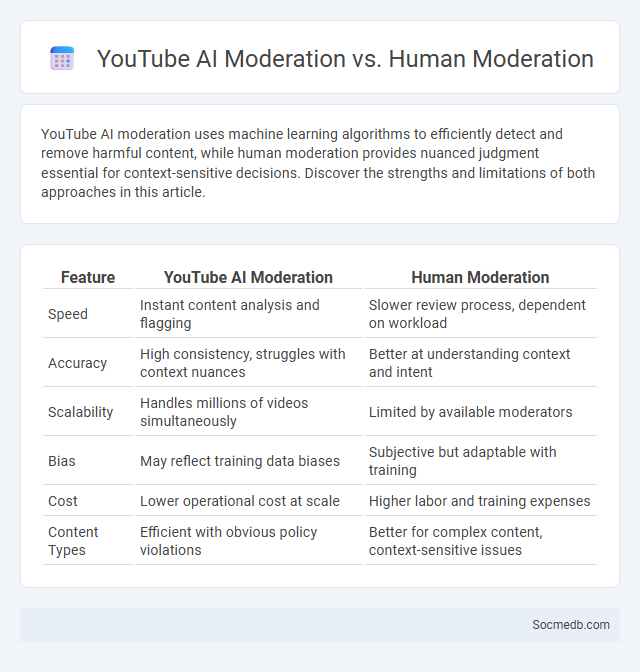

YouTube AI moderation uses machine learning algorithms to efficiently detect and remove harmful content, while human moderation provides nuanced judgment essential for context-sensitive decisions. Discover the strengths and limitations of both approaches in this article.

Table of Comparison

| Feature | YouTube AI Moderation | Human Moderation |

|---|---|---|

| Speed | Instant content analysis and flagging | Slower review process, dependent on workload |

| Accuracy | High consistency, struggles with context nuances | Better at understanding context and intent |

| Scalability | Handles millions of videos simultaneously | Limited by available moderators |

| Bias | May reflect training data biases | Subjective but adaptable with training |

| Cost | Lower operational cost at scale | Higher labor and training expenses |

| Content Types | Efficient with obvious policy violations | Better for complex content, context-sensitive issues |

Introduction to YouTube Content Moderation

YouTube content moderation utilizes advanced algorithms and human reviewers to enforce community guidelines and remove harmful or inappropriate videos. The platform relies on machine learning models to detect spam, hate speech, and misinformation in real-time, ensuring a safer environment for over 2 billion logged-in monthly users. Effective moderation balances automated systems with human judgment to address complex content issues and maintain user trust.

What Is YouTube AI Moderation?

YouTube AI Moderation uses advanced machine learning algorithms to automatically detect and manage inappropriate content, ensuring a safer platform for users. This technology analyzes video metadata, comments, and visual elements to identify violations of community guidelines such as hate speech, spam, and graphic content. By leveraging AI, YouTube enhances content oversight and helps protect Your experience from harmful or misleading material.

Human Moderators: Roles and Challenges

Human moderators play a critical role in maintaining the safety and integrity of social media platforms by reviewing and filtering user-generated content that automated systems may miss. They face challenges such as emotional strain from exposure to harmful or disturbing material and the need to make nuanced decisions respecting cultural and contextual differences. Effective moderation helps prevent the spread of misinformation, hate speech, and cyberbullying, ensuring a healthier online environment for users worldwide.

Demonetization: Definition and Impact

Demonetization in social media refers to the removal or restriction of a creator's ability to earn revenue from their content, often due to policy violations or advertiser preferences. This process can significantly affect your income streams and influence content strategies, as platforms like YouTube and Facebook implement algorithms to detect sensitive or inappropriate material. Understanding demonetization helps you adapt content to maintain monetization eligibility and sustain audience engagement.

AI Moderation vs. Human Moderation: Key Differences

AI moderation on social media efficiently processes vast amounts of content using machine learning algorithms and natural language processing to identify harmful or inappropriate material in real-time. Human moderation, by contrast, relies on contextual understanding, empathy, and nuanced judgment to assess complex cases and address ambiguous content that AI might misinterpret. Combining AI's scalability with human moderators' critical thinking ensures more accurate content regulation, reducing false positives and enhancing user safety.

Accuracy and Bias in AI Moderation

AI moderation on social media platforms aims to enhance accuracy by utilizing advanced algorithms to detect harmful content, but challenges with bias persist due to training data limitations and algorithmic design. Your interactions with AI-moderated platforms can sometimes reflect unintended biases, impacting the fairness and inclusivity of content moderation. Improving transparency and continuously refining AI models are crucial steps toward minimizing bias and ensuring more accurate and equitable moderation outcomes.

Emotional Intelligence: Are Humans Superior?

Emotional Intelligence plays a crucial role in human interaction on social media, enabling you to interpret and respond to the emotions behind digital communication effectively. While AI algorithms can analyze patterns and predict behavior, humans excel in empathy, self-awareness, and nuanced emotional understanding that machines cannot fully replicate. This superior emotional intelligence allows for deeper connections and more meaningful engagement across social media platforms.

Demonetization Algorithms: How They Work

Demonetization algorithms analyze content using machine learning to detect policy violations related to ads, copyright, or community standards. These algorithms evaluate keywords, video frames, and user interactions to classify content as eligible or ineligible for monetization, affecting your revenue streams. Understanding how these automated systems function can help you optimize your content to comply with platform rules and maximize earnings.

User Experience: Creator and Viewer Perspectives

Social media platforms prioritize seamless user interfaces and engaging features to enhance both creator and viewer experiences. Creators benefit from intuitive content management tools and real-time analytics, enabling them to optimize posts and grow their audience effectively. Your interaction is enriched through personalized feeds, interactive elements, and responsive design, ensuring a dynamic and satisfying viewing experience.

Future Trends in YouTube Moderation Systems

YouTube moderation systems are rapidly evolving with advancements in AI and machine learning, enabling faster detection and removal of harmful content. Future trends include enhanced real-time video analysis and automated context understanding to improve accuracy without compromising user experience. Your interactions on the platform will benefit from more transparent and adaptive moderation policies designed to create safer and more engaging communities.

socmedb.com

socmedb.com