Photo illustration: YouTube Flagging vs Age Restriction

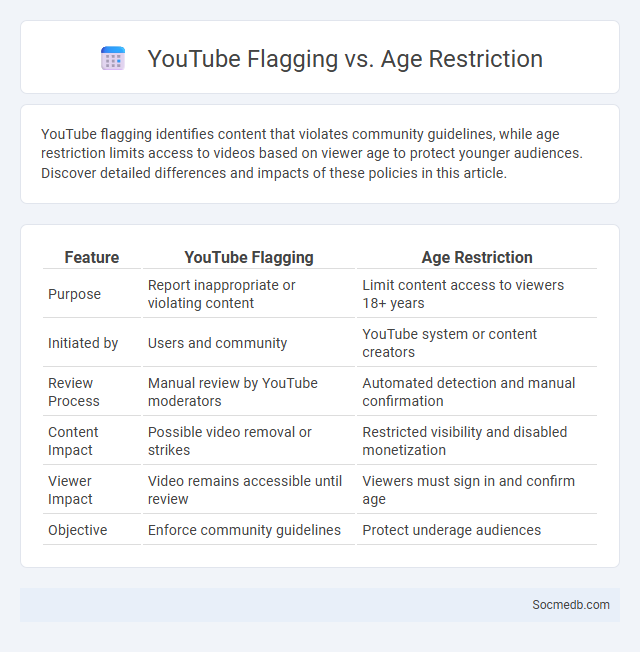

YouTube flagging identifies content that violates community guidelines, while age restriction limits access to videos based on viewer age to protect younger audiences. Discover detailed differences and impacts of these policies in this article.

Table of Comparison

| Feature | YouTube Flagging | Age Restriction |

|---|---|---|

| Purpose | Report inappropriate or violating content | Limit content access to viewers 18+ years |

| Initiated by | Users and community | YouTube system or content creators |

| Review Process | Manual review by YouTube moderators | Automated detection and manual confirmation |

| Content Impact | Possible video removal or strikes | Restricted visibility and disabled monetization |

| Viewer Impact | Video remains accessible until review | Viewers must sign in and confirm age |

| Objective | Enforce community guidelines | Protect underage audiences |

Understanding YouTube’s Content Moderation Tools

YouTube's content moderation tools utilize advanced AI algorithms and human reviewers to identify and remove harmful or inappropriate videos, ensuring adherence to community guidelines and copyright laws. Features such as automated flagging, age restrictions, and demonetization help maintain a safe platform while balancing creator freedom and viewer safety. Transparency reports provide insights into enforcement actions, promoting accountability in managing diverse global content.

What is YouTube Flagging?

YouTube flagging is the process where users report content that may violate YouTube's Community Guidelines, such as hate speech, misinformation, or copyright infringement. When a video is flagged, YouTube's moderation team reviews the content to determine if it should be removed, age-restricted, or demonetized. This system helps maintain platform safety by enabling community-driven identification of inappropriate or harmful content.

The Purpose of Age Restriction on YouTube

Age restriction on YouTube safeguards minors by limiting access to content that may be inappropriate or harmful, such as violent, explicit, or sensitive material. This policy helps comply with legal regulations like COPPA and protect users from exposure to age-inappropriate advertising and interactions. Enforcing age limits fosters a safer online environment and promotes responsible content consumption among younger audiences.

Key Differences: Flagging vs Age Restriction

Flagging and age restriction are two critical social media tools designed to protect users and maintain platform integrity. Flagging allows your content or posts to be reported for review due to inappropriate or harmful material, prompting platform moderators to evaluate and potentially remove or limit visibility. Age restrictions control who can access certain content based on user age, ensuring compliance with legal standards and safeguarding younger audiences from explicit or unsuitable material.

How the Flagging Process Works

The flagging process on social media platforms enables users to report content that violates community guidelines or terms of service. Once content is flagged, it undergoes a review by automated systems and human moderators to determine if it breaches policies related to hate speech, harassment, misinformation, or other prohibited content. Effective flagging mechanisms improve platform safety by quickly identifying harmful posts and facilitating timely removal or restriction, thereby enhancing user experience and compliance with legal regulations.

Criteria for Age Restriction Enforcement

Social media platforms enforce age restrictions based on criteria such as minimum age requirements set by legal regulations like COPPA or GDPR, user verification methods including ID checks or AI-driven age estimation algorithms, and the platform's own community guidelines to ensure safe user interactions. These criteria aim to protect minors from inappropriate content, prevent data collection from underage users, and comply with jurisdictional age-related laws. Effective enforcement relies on continuous monitoring, reporting mechanisms, and technological tools to verify and restrict access appropriately.

Appeals and Review: User Options

Social media platforms offer diverse appeals such as personalized content feeds, interactive features, and community engagement that enhance user experience and retention. Review options include detailed feedback mechanisms, star ratings, and comment sections enabling transparent user evaluation of content, products, and services. These tools empower users to make informed decisions while fostering trust and authenticity within digital ecosystems.

Impact on Content Creators’ Monetization

Social media platforms have revolutionized monetization opportunities for content creators by offering diverse revenue streams such as sponsored posts, affiliate marketing, and direct fan support through features like Patreon and Super Chat. Algorithms prioritize engaging content, significantly influencing creators' earnings by affecting visibility and audience reach. However, dependency on platform policies and fluctuating algorithm changes introduces income instability and challenges in sustaining long-term financial growth.

Community Guidelines and Policy Violations

Social media platforms enforce Community Guidelines to maintain safe, respectful online environments and prevent harmful behavior such as hate speech, harassment, and misinformation. Policy violations, including the spread of false information or inappropriate content, often result in account suspensions or content removal to protect users and promote healthy interactions. These enforcement measures are constantly updated to address emerging threats and comply with legal regulations globally.

Best Practices to Avoid Flagging and Age Restriction

To prevent flagging and age restrictions on social media platforms, ensure all content complies with community guidelines and avoids sensitive material such as explicit language, violence, or alcohol promotion to minors. Utilize clear age gating tools and verify user age where possible to restrict access to mature content effectively. Regularly review platform policy updates and employ proactive moderation strategies to maintain content appropriateness and reduce the risk of account penalties.

socmedb.com

socmedb.com