Photo illustration: YouTube Flagging vs Automatic Detection

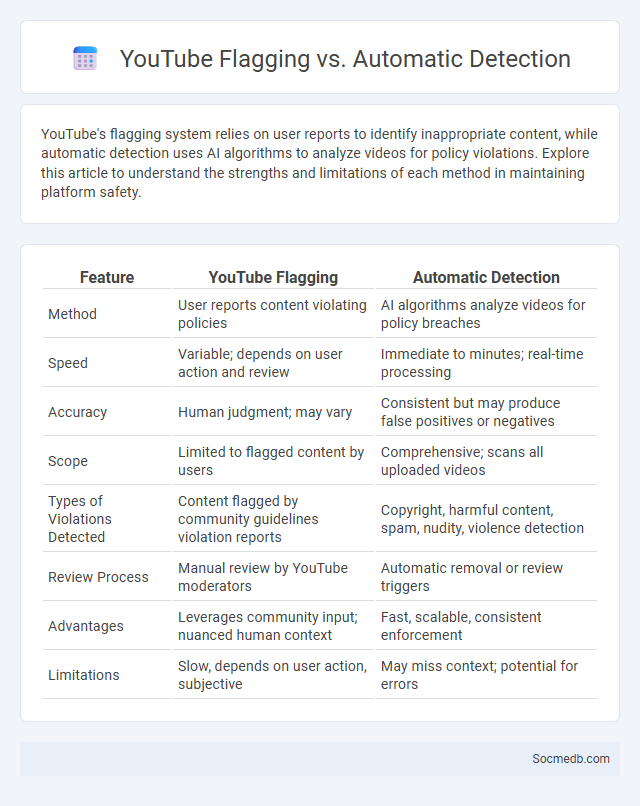

YouTube's flagging system relies on user reports to identify inappropriate content, while automatic detection uses AI algorithms to analyze videos for policy violations. Explore this article to understand the strengths and limitations of each method in maintaining platform safety.

Table of Comparison

| Feature | YouTube Flagging | Automatic Detection |

|---|---|---|

| Method | User reports content violating policies | AI algorithms analyze videos for policy breaches |

| Speed | Variable; depends on user action and review | Immediate to minutes; real-time processing |

| Accuracy | Human judgment; may vary | Consistent but may produce false positives or negatives |

| Scope | Limited to flagged content by users | Comprehensive; scans all uploaded videos |

| Types of Violations Detected | Content flagged by community guidelines violation reports | Copyright, harmful content, spam, nudity, violence detection |

| Review Process | Manual review by YouTube moderators | Automatic removal or review triggers |

| Advantages | Leverages community input; nuanced human context | Fast, scalable, consistent enforcement |

| Limitations | Slow, depends on user action, subjective | May miss context; potential for errors |

Introduction to YouTube Content Moderation

YouTube content moderation involves monitoring and managing videos, comments, and community interactions to ensure compliance with platform policies and community guidelines. Effective moderation uses AI algorithms combined with human reviewers to detect harmful content such as hate speech, misinformation, and inappropriate material promptly. Your experience on YouTube improves as harmful content is minimized, fostering a safer and more enjoyable environment for creators and viewers alike.

Overview of YouTube Flagging Systems

YouTube's flagging system allows users to report videos that violate community guidelines, including issues like hate speech, harassment, and copyright infringement. Reported content undergoes a review process by YouTube's moderation team and automated systems to determine appropriate actions, such as age restriction, removal, or demonetization. This system plays a critical role in maintaining platform safety and compliance with legal standards while balancing free expression.

What is Manual User Flagging?

Manual user flagging is a process in social media platforms where users actively report content they find inappropriate, misleading, or harmful. This system relies on community participation to identify violations of platform policies, helping moderators prioritize and address flagged content efficiently. Effective manual flagging supports maintaining a safer and more respectful online environment by leveraging user vigilance.

How Does Automatic Detection Work on YouTube?

Automatic detection on YouTube uses advanced machine learning algorithms and content identification systems like Content ID to scan videos for copyrighted material. The platform analyzes audio, video, and metadata to match uploads against a vast database of protected content, enabling swift identification of potential infringements. This technology helps protect your content rights by flagging unauthorized use and facilitating rights holders' claims efficiently.

Comparing Human Flagging and AI Detection

Human flagging relies on users identifying and reporting inappropriate content based on personal judgment, which can lead to subjective biases and slower response times. AI detection utilizes machine learning algorithms to automatically scan large volumes of data, enabling faster identification of harmful posts but sometimes missing nuanced context. Combining human flagging with AI detection enhances accuracy and efficiency in moderating social media platforms by leveraging the strengths of both approaches.

Strengths of Manual Flagging

Manual flagging leverages human judgment to accurately identify and report inappropriate or harmful content that automated systems might miss. This method enhances content moderation by capturing context, nuance, and cultural sensitivities, ensuring a safer and more respectful social media environment. Your active participation in manual flagging directly contributes to maintaining platform integrity and improving user experience.

Limitations of Automatic Detection Algorithms

Automatic detection algorithms in social media face significant limitations, including difficulties in accurately identifying context, sarcasm, and nuanced language, resulting in high false positive and negative rates. These algorithms often struggle with evolving slang, multilingual content, and sophisticated manipulation tactics such as deepfakes or coordinated misinformation campaigns. Consequently, reliance solely on automatic detection can impede effective content moderation and user safety.

Challenges in Balancing Accuracy and Speed

Maintaining accuracy while delivering fast updates on social media presents a significant challenge, as misinformation can easily spread and damage credibility. You must implement rigorous fact-checking processes without compromising the speed essential for timely news and engagement. Leveraging AI tools and trusted sources helps enhance accuracy while meeting the demand for rapid content delivery.

Impact on Creators and Viewers

Social media platforms have revolutionized content creation by providing creators with global reach and direct audience engagement, significantly increasing opportunities for monetization and brand building. Audiences benefit from diverse content tailored to niche interests, fostering interactive communities and real-time feedback loops that shape content trends. The symbiotic relationship enhances creativity and democratizes information sharing, while also raising challenges like content oversaturation and mental health effects for both creators and viewers.

Future Trends in YouTube Content Moderation

Future trends in YouTube content moderation emphasize advanced AI-driven algorithms that enhance the detection of harmful content with greater accuracy and speed. Your experience will benefit from improved transparency tools and user controls enabling more personalized content filtering. Increased collaboration between human moderators and machine learning systems is set to reduce false positives while fostering safer community environments.

socmedb.com

socmedb.com