Photo illustration: YouTube Flagging vs Community Guidelines Strike

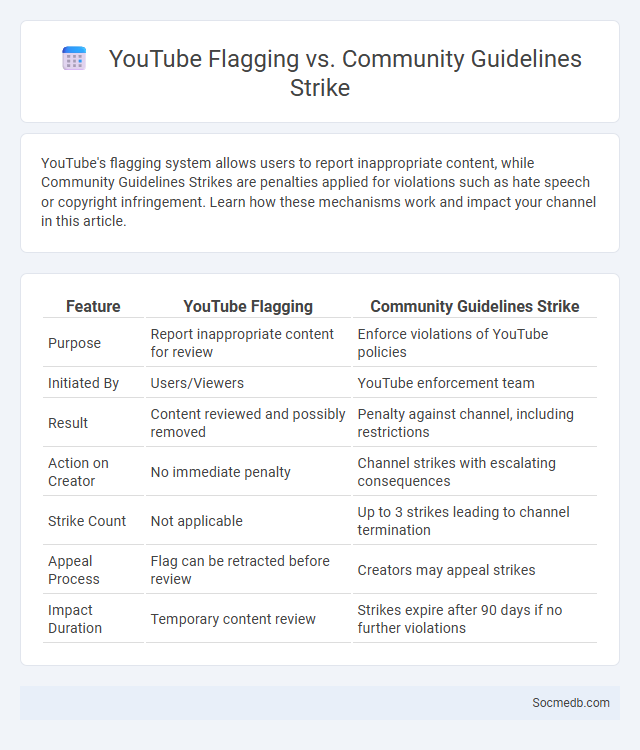

YouTube's flagging system allows users to report inappropriate content, while Community Guidelines Strikes are penalties applied for violations such as hate speech or copyright infringement. Learn how these mechanisms work and impact your channel in this article.

Table of Comparison

| Feature | YouTube Flagging | Community Guidelines Strike |

|---|---|---|

| Purpose | Report inappropriate content for review | Enforce violations of YouTube policies |

| Initiated By | Users/Viewers | YouTube enforcement team |

| Result | Content reviewed and possibly removed | Penalty against channel, including restrictions |

| Action on Creator | No immediate penalty | Channel strikes with escalating consequences |

| Strike Count | Not applicable | Up to 3 strikes leading to channel termination |

| Appeal Process | Flag can be retracted before review | Creators may appeal strikes |

| Impact Duration | Temporary content review | Strikes expire after 90 days if no further violations |

Understanding YouTube’s Moderation System

YouTube's moderation system relies on a combination of artificial intelligence algorithms and human reviewers to detect and remove content that violates community guidelines. Machine learning models analyze videos, comments, and metadata to identify hate speech, misinformation, and harmful content with increasing accuracy. The platform continuously updates its moderation policies and tools to address emerging threats and improve user safety across its diverse global community.

What Is YouTube Flagging?

YouTube flagging is a system that allows users to report content that violates community guidelines, such as hate speech, spam, or copyright infringement. When a video is flagged, YouTube's review team evaluates the content to determine if it should be removed or restricted. Understanding how YouTube flagging works helps you protect your channel and ensures a safer, more compliant platform experience.

How Community Guidelines Enforce Content Standards

Community guidelines define specific rules that regulate acceptable behavior and content on social media platforms, ensuring a safe and respectful environment for users. These standards are enforced through algorithms and human moderators who review reports, remove violating content, and issue warnings or bans for repeat offenders. Your adherence to these guidelines helps maintain the platform's integrity and promotes positive interaction within the online community.

Flagging vs Community Guidelines Strike: Key Differences

Flagging content on social media involves users reporting posts that may violate platform rules, triggering a review process by moderators. Community Guidelines Strikes are formal penalties applied when verified violations occur, often leading to consequences such as content removal, account restrictions, or suspensions. Understanding the difference helps you navigate platform safety measures and protect your online presence effectively.

The Flagging Process Step-by-Step

The flagging process on social media involves users identifying inappropriate or harmful content by selecting a specific reason for the report, such as hate speech or misinformation. Once flagged, the content undergoes automated scanning and review by moderation teams to determine whether it violates platform policies. If confirmed, the flagged content is either removed, restricted, or the user responsible may face temporary or permanent account suspension.

How Community Guidelines Strikes Affect Your Channel

Community Guidelines strikes significantly impact your channel's visibility, monetization, and content publishing rights on social media platforms. Accumulating multiple strikes can lead to temporary suspension or permanent removal of your channel, restricting your audience reach and engagement. Staying informed about specific platform policies helps you avoid violations and maintain consistent growth for your channel.

Common Reasons for Videos Being Flagged

Videos on social media are commonly flagged for violating community guidelines such as containing hate speech, explicit content, or misinformation. Content promoting violence, harassment, or copyright infringement also frequently triggers flagging mechanisms. Social media platforms utilize automated systems and user reports to identify and review potentially harmful or inappropriate video content.

Resolving and Appealing Community Guideline Strikes

Resolving community guideline strikes on social media involves carefully reviewing the platform's policies and identifying the specific content that triggered the strike. You can appeal these decisions by submitting a detailed request explaining why the content complies with guidelines, often including evidence or context that supports your case. Timely and clear communication with the platform increases the chances of successfully reversing the strike and maintaining your account's standing.

Best Practices to Avoid Flagging and Strikes

To protect your social media account from flagging and strikes, consistently adhere to platform-specific community guidelines and avoid sharing copyrighted or false information. Use original content and properly attribute any third-party materials to maintain authenticity and credibility. Monitoring your account activity regularly helps you quickly address potential violations and keep your online presence secure.

Impact of Repeated Offenses on YouTube Channel Status

Repeated offenses on a YouTube channel can lead to severe consequences such as temporary suspensions, reduced reach, or permanent termination of the account. YouTube uses a strike system where accumulating multiple violations within a specific period significantly diminishes your channel's standing and monetization eligibility. Understanding these impacts is crucial for maintaining your channel's integrity and long-term growth.

socmedb.com

socmedb.com